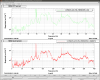

When DTT gets data from NDS2, it apparently gets the wrong sample rate if the sample rate has changed. The plot shows the result. Notice that the 60 Hz magnetic peak appears at 30 Hz in the NDS2 data displayed with DTT. This is because the sample rate was changed from 4 to 8k last February. Keita pointed out discrepancies between his periscope data and Peter F's. The plot shows that the periscope signal, whose rate was also changed, has the same problem, which may explain the discrepancy if one person was looking at NDS and the other at NDS2. The plot shows data from the CIT NDS2. Anamaria tried this comparison for the LLO data and the LLO NDS2 and found the same type of problem. But the LHO NDS2 just crashes with a Test timed-out message.

Robert, Anamaria, Dave, Jonathan

It can be a factor of 8 (or 2 or 4 or 16) using DTT with NDS2 (Robert, Keita)

In the attached, the top panel shows the LLO PEM channel pulled off of CIT NDS2 server, and at the bottom is the same channel from LLO NDS2 server, both from the exact same time. LLO server result happens to be correct, but the frequency axis of CIT result is a factor of 8 too small while Y axis of the CIT result is a factor of sqrt(8) too large.

Jonathan explained this to me:

keita.kawabe@opsws7:~ 0$ nds_query -l -n nds.ligo.caltech.edu L1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ

Number of channels received = 2

Channel Rate chan_type

L1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 2048 raw real_4

L1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 16384 raw real_4

keita.kawabe@opsws7:~ 0$ nds_query -l -n nds.ligo-la.caltech.edu L1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ

Number of channels received = 3

Channel Rate chan_type

L1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 16384 online real_4

L1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 2048 raw real_4

L1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 16384 raw real_4

As you can see, both at CIT and LLO the raw channel sampling rate was changed from 2048Hz to 16384Hz, and raw is the only thing available at CIT. However, at LLO, there's also "online" channel type available at 16k, which is listed prior to "raw".

Jonathan told me that DTT probably takes the sampling rate number in the first one in the channel list regardless of the actual epoch each sampling rate was used. In this case dtt takes 2048Hz from CIT but 16384Hz from LLO, but obtains the 16kHz data. If that's true there is a frequency scaling of 1/8 as well as the amplitude scaling of sqrt(8) for the CIT result.

FYI, for the corresponding H1 channel in CIT and LHO NDS2 server, you'll get this:

keita.kawabe@opsws7:~ 0$ nds_query -l -n nds.ligo.caltech.edu H1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ

Number of channels received = 2

Channel Rate chan_type

H1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 8192 raw real_4

H1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 16384 raw real_4

keita.kawabe@opsws7:~ 0$ nds_query -l -n nds.ligo-wa.caltech.edu H1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ

Number of channels received = 3

Channel Rate chan_type

H1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 16384 online real_4

H1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 8192 raw real_4

H1:PEM-CS_ACC_PSL_PERISCOPE_X_DQ 16384 raw real_4

In this case, the data from LHO happens to be good, but CIT frequency is a factor of 2 too small and magnitude a factor of sqrt(2) too large.

Part of this that DTT does not handle the case of a channel changing sample rate over time.

DTT retreives a channel list from NDS2 that includes all the channels with sample rates, it takes the first entry for each channel name and ignores any following entries in the list with different sample rates. It uses the first sample rate it receives ans the sample rate for the channel at all possible times. So when it retreives data it may be 8k data, but it looks at it as 4k data and interprets the data wrong.

I worked up a band-aid that inserts a layer between DTT and NDS2 and essentially makes it ignore specified channel/sample rate combinations. This has let Robert do some work. We are not sure how this scales and are investigating a fix to DTT.

As followup we have gone through two approaches to fix this:

- We created a proxy we put between DTT & NDS2 for Robert that was able to strip out the versions of the channels that we are not interested in. This was done yesterday and allowed Robert to work. This has allowed Robert to work but is not a scalable solution.

- Jim and I investigated what DTT was doing and have a test build of DTT that allows it to present a list with multiple sample rates per channel. We have a test build of this at LHO. There are rough edges to this, but we have filed a ECR to see about rolling out a solution in this vein in production (which would include LLO).