craig.cahillane@LIGO.ORG - posted 02:23, Friday 05 October 2018 - last comment - 17:48, Friday 05 October 2018(44351)

DARM Shot Noise Calibrated

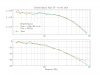

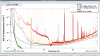

Our DARM shot noise calibration has been off by a factor of 4. I took a PCAL to DARM sweep and DARM OLG and got a rough measurement of the DARM optical plant while locked at 10 W. Templates saved in/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Measurements/FullIFOSensingTFs/. I then fit a simple pole model to the measurement:Gain: 3.642e6 cts/m (cts are DARM error cts) Pole: 381.83 HzOur expected DARM pole with 32.3% SRM transmission is 433 Hz, so we are 12% low. Our SRC is poorly aligned, though, and the SRC ASC loops were off during this measurement. According to Hang's SRC misalignment simulation, if SRM misalignment was totally responsible for this low of a DARM pole, the SRM would be far more than 10 microradians misaligned, which is probably not the case. Another culprit could be ITM differential lensing, which is high for us right now. Together these could explain our measured DARM pole. I added new filters in the CAL-CS_DARM_ERR filter module to put our latest inverse sensing calibration into the front end:O3_D2N: zpk([382.0;6.7496;-7.0660],[0.1;0.1;7000],1,"n")gain(4768.4) O3Gain: gain(2.7473e-07)We have a dip at 180 Hz because we haven't updated the control signal calibration yet. Edit: Also interesting is jitter at 300-350 Hz appears to be reduced.

Images attached to this report

Comments related to this report

Robert, Craig We looked coherence between DARM and the IMC WFS jitter witnesses at the time of the 10 W spectrum from last night. The broad humps at 300-350 Hz are notably absent, and some jitter peaks (483.1 Hz and 553.5 Hz) are still present and reduced. We will reveal more when we go to higher power.

Images attached to this comment

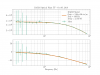

Improved single DARM pole fit and added delay parameter. DARM pole is actually 403 Hz, delay is 28 microseconds, gain is 3.64 cts/m. Calibration group will post full model fit shortly.

Images attached to this comment