Checking in on our DARM lockloss status after the second month of having our higher bias. At the end of August I put some comparison plots in to show how the doubling of the bias had significantly decreased the number of locklosses we had, as well as also significantly decreasing the amount of those locklosses that were due to the DARM glitch(86678). I remade those plots again now that we've gone through September.

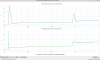

In my usual lockloss vs DARM lockloss plot, we see that we've actually had no locklosses due to the DARM glitch between September 3rd - September 30th, which is the longest we've gone in all of O4 without that lockloss cause!

The average lock length has gone down a bit compared to August (10.8 vs 13.3 hours), but that's still double the average lock length of February, March, June, and July (table, plot). The number of lockloses is still also much lower than the pre-ESD-doubling months.