I used Oli's SRM OSEM calibration measurments from [LHO: 87112] to get the absolute calibration of the SRM OSEMs. I only bothered with the ALIGNED position because that's is the official set of measurements for calibration.

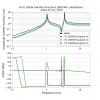

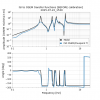

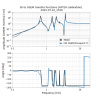

I also used a new, quasi-automated version of the calibration script used for the PR3 and SR3 suspensions. The script fits the 6-15 Hz data directly to 1 [OSEM m]/[GS13 m], no additional modelling is involved in the calibration.

The script's information, coordinates, and instructions will be the subject of a later logpost.

The results of the calibration are a mouthful because we are now doing M1, M2, and M3 all simultaneously.

The TL;DR is that the M1 OSEM calibrations seemed off by factors of 1.8 or so, larger than the SR3/PR3 averages, but not unexpected.

The M2 and M3 absolute calibrations were surprisingly good. They were all high from the GS13 measurments by a factor of 10% or so, which leads me to believe this is likely not a coincidence and someone may have previously done an absolute calibration of the SRM M2/M3 OSEMs. I would love to confirm these suspicions, but such work may be lost to time.

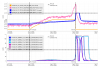

See the PDFs attached for the before and after calibration for all three stages.

Here is the full calibration script output:

___________________________________________

OSEM calibration of H1:SUS-SRM

Frequency range for calibration: 6 to 15 Hz

Stages to be calibrated: ['M1' 'M2' 'M3']

Measurement date: 2025-09-23_1830 (UTC).

%%%%%%%%%%%%

Stage: M1

%%%%%%%%%%%%

The suggested (calibrated) M1 OSEMINF gains are

(new T1) = 1.756 * (old T1) = 1.958

(new T2) = 1.806 * (old T2) = 2.127

(new T3) = 1.761 * (old T3) = 1.842

(new LF) = 1.945 * (old LF) = 2.102

(new RT) = 1.593 * (old RT) = 1.819

(new SD) = 1.525 * (old SD) = 1.794

To compensate for the OSEM gain changes, we estimate that the H1:SUS-SRM_M1_DAMP loops must be changed by factors of:

L gain = 0.571 * (old L gain)

T gain = 0.656 * (old T gain)

V gain = 0.565 * (old V gain)

R gain = 0.565 * (old R gain)

P gain = 0.561 * (old P gain)

Y gain = 0.571 * (old Y gain)

The calibration will change the apparent alignment of the suspension as seen by the M1 OSEMs

NOTE: The actual alignment of the suspension will NOT change as a result of the calibration process

The changes are computed as (osem2eul) * gain * pinv(osem2eul).

Using the alignments from 2025-09-23_1830 (UTC) as a reference, the new M1 apparent alignments are:

DOF Previous value New value Apparent change

---------------------------------------------------------------------------

L -37.1 um -65.7 um -28.6 um

T 45.4 um 69.2 um +23.8 um

V 35.5 um 62.4 um +27.0 um

R -62.2 urad -112.0 urad -49.7 urad

P 1082.8 urad 1901.1 urad +818.3 urad

Y 3.9 urad 88.6 urad +84.8 urad

%%%%%%%%%%%%

Stage: M2

%%%%%%%%%%%%

The suggested (calibrated) M2 OSEMINF gains are

(new UL) = 0.900 * (old UL) = 1.109

(new LL) = 0.838 * (old LL) = 1.082

(new UR) = 0.921 * (old UR) = 1.134

(new LR) = 0.939 * (old LR) = 1.293

The calibration will change the apparent alignment of the suspension as seen by the M2 OSEMs

NOTE: The actual alignment of the suspension will NOT change as a result of the calibration process

The changes are computed as (osem2eul) * gain * pinv(osem2eul).

Using the alignments from 2025-09-23_1830 (UTC) as a reference, the new M2 apparent alignments are:

DOF Previous value New value Apparent change

---------------------------------------------------------------------------

L -58.3 um -52.7 um +5.6 um

P 861.7 urad 772.3 urad -89.4 urad

Y -518.0 urad -520.3 urad -2.2 urad

%%%%%%%%%%%%

Stage: M3

%%%%%%%%%%%%

The suggested (calibrated) M3 OSEMINF gains are

(new UL) = 0.939 * (old UL) = 1.267

(new LL) = 0.875 * (old LL) = 1.138

(new UR) = 0.903 * (old UR) = 1.170

(new LR) = 0.939 * (old LR) = 1.351

The calibration will change the apparent alignment of the suspension as seen by the M3 OSEMs

NOTE: The actual alignment of the suspension will NOT change as a result of the calibration process

The changes are computed as (osem2eul) * gain * pinv(osem2eul).

Using the alignments from 2025-09-23_1830 (UTC) as a reference, the new M3 apparent alignments are:

DOF Previous value New value Apparent change

---------------------------------------------------------------------------

L -72.1 um -65.8 um +6.4 um

P 923.6 urad 845.0 urad -78.6 urad

Y -459.5 urad -453.6 urad +5.9 urad

We have calculated a GS13 to OSEM calibration of H1 SRM ['M1' 'M2' 'M3'] using HAM5 ST1 drives from 2025-09-23_1830 (UTC).

We fit the response SRM_OSEMINF/HAM5_SUSPOINT to unity between 6 and 15 Hz to get a calibration such that 1 [OSEM m] = [GS13 m]

This message was generated automatically by OSEM_calibration_master.py on 2025-09-27 00:50:36.037056+00:00 UTC

%%%%%%%%%%%%%%%%%%%%%%%%%%%%

EXTRA INFORMATION

%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%

Stage: M1

%%%%%%%%%%%%

The H1:SUS-SRM_M1_OSEMINF gains at the time of measurement were:

(old) T1: 1.115

(old) T2: 1.178

(old) T3: 1.046

(old) LF: 1.081

(old) RT: 1.142

(old) SD: 1.176

The matrix to convert from the old SRM M1 Euler dofs to the (calibrated) new Euler dofs is:

+1.769 -0.0 +0.0 +0.0 -0.0 -0.014

+0.0 +1.525 -0.0 +0.0 +0.0 +0.0

+0.0 +0.0 +1.77 -0.001 -0.0 +0.0

-0.0 -0.0 -0.224 +1.77 +0.006 -0.0

-0.0 -0.0 -0.761 +0.046 +1.783 -0.0

-2.203 +0.0 -0.0 -0.0 +0.0 +1.769

The matrix is used as (M) * (old EUL dof) = (new EUL dof)

The dof ordering is ('L', 'T', 'V', 'R', 'P', 'Y')

%%%%%%%%%%%%

Stage: M2

%%%%%%%%%%%%

The H1:SUS-SRM_M2_OSEMINF gains at the time of measurement were:

(old) UL: 1.232

(old) LL: 1.291

(old) UR: 1.232

(old) LR: 1.377

To compensate for the M2 OSEM gain changes, any controllers using the M2 OSEMs as inputs must be compensated with gains of:

L gain = 1.113 * (old L gain)

P gain = 1.112 * (old P gain)

Y gain = 1.114 * (old Y gain)

The matrix to convert from the old SRM M2 Euler dofs to the (calibrated) new Euler dofs is:

+0.9 +0.001 +0.001

+0.227 +0.9 -0.02

+0.635 -0.02 +0.9

The matrix is used as (M) * (old EUL dof) = (new EUL dof)

The dof ordering is ('L', 'P', 'Y')

%%%%%%%%%%%%

Stage: M3

%%%%%%%%%%%%

The H1:SUS-SRM_M3_OSEMINF gains at the time of measurement were:

(old) UL: 1.349

(old) LL: 1.300

(old) UR: 1.295

(old) LR: 1.439

To compensate for the M3 OSEM gain changes, any controllers using the M3 OSEMs as inputs must be compensated with gains of:

L gain = 1.094 * (old L gain)

P gain = 1.095 * (old P gain)

Y gain = 1.095 * (old Y gain)

The matrix to convert from the old SRM M3 Euler dofs to the (calibrated) new Euler dofs is:

+0.914 +0.0 +0.0

+0.147 +0.914 -0.025

+0.147 -0.025 +0.914

The matrix is used as (M) * (old EUL dof) = (new EUL dof)

The dof ordering is ('L', 'P', 'Y')