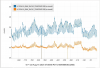

Recently, I looked into the narrow peaks in the 20-40 Hz region, noting that coherence with beam jitter sensors suggested that these peaks could be cleaned (86160) While the sharp jitter peaks may be cleanable (though we are having problems with this), many of the jitter peaks rest on pedestals that are not coherent with jitter or vibration sensors. See, for example, the peak at just above 20 Hz in the figure of the referenced log (here) - there is the sharp coherent peak and a broad pedestal that is not coherent with jitter sensors. During past periods when the sharp peak was not present, the pedestal was not present either. We subsequently found that this peak was produced by the Liebert AC in the warehouse building (86257), and the broad incoherent peak comes and goes with the coherent jitter peak as we turned the Liebert on and off (as Shivaraj noted).

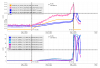

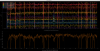

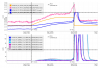

To study the non-linear coupling producing these broad pedestals under the jitter peaks, I injected a slow frequency sweep using a global speaker injection so that I could viibrate all regions of the LVEA. The sweep reproduced the two types of coupling and showed that the coupling extended over a broad band, from at least 20 to 45 Hz.

To begin narrowing down the site of the broad non-linear coupling, I first injected using a shaker mounted on the PSL table. This produced a jitter peak without the pedestal, so the nonlinear part of the coupling is not at the PSL table.

I then shook the input beam tube because it was a dominant scatter site whose coupling we reduced in 2024 with baffle work and by adjusting the CP-Y compensation plate (76969). No coupling was noted for MC tube vibration levels that matched or exceeded the level induced in the MC tube by the speaker injection that produced the non-linear coupling. Therefor the non-linear coupling site is also not at the input arm MC tube.

The global acoustic, PSL table, and MC tube swept injections and the coupling to DARM are shown in the figure.

The next step is to continue this process in other regions of the LVEA to narrow down the coupling site.