I created an updated blend for the SR3 pitch estimator. These are in the SUS SVN next to the first version. (see LHO log 84452)

The design script is blend_SR3_pitchv2.m

the Foton update script is make_SR3_Pitch_blend_v2.m

these are both in {SUS_SVN}/HLTS/Common/FilterDesign/Estimator/ - revision 12608

The update script will install the new blends into FM2 of SR3_M1_EST_P_FUSION_{MEAS/MODL}_BP with the name pit_v2. pit_v1 should still be in FM1. Turn on FM2, turn FM1 off.

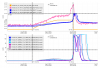

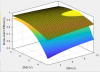

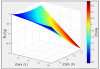

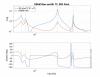

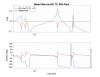

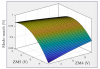

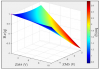

Jeff and Oli tried the first one, and see that the first 2 modes (about 0.65 and 0.75 Hz) are seeing more motion with the estimator damping than the normal damping. To correct this, _v2 add OSEM signal to the estimator for those modes. See plots below - First 2 are the _v2 blend and a zoom of the _v2 blend. figure 3 shows the measured pitch plant vs. OSEM path - the modes line up pretty well. There is a bit of shift because the peaks are close together. I expect hope this will not matter. Figure 4 shows the plant vs. the model path. Now all 4 modes are driven by the measured OSEM signal instead of the model.

It is interesting to see that the model was not doing a good job of predicting the motion at the first 2 peaks. This is (I guess) because either (a) the model and the plant are different - or - (b) there are unmodeled drives pushing the plant (the suspension) that the model doesn't know about.

I'm guessing the answer is (b - unmodeled drives) and is likely from DAC noise. I think this because

1 - The plant fit is smooth and really good.

2 - In the yaw analysis that Edgard and Ivey are doing (not yet posted) the first mode of the yaw plant can be seen with the OSEM, but the ISI motion is much too small to excite that level of motion. But the OSEM can see motion, so something is exciting that motion.

3 - The DAC noise is the only thing I can think of.

Quick chat with Jeff indicates that the DAC noise models at those frequencies are not well trusted. We'll try something anyway and see if it is close. I don't see how to use the estimator to deal with that noise - we'd need to have an accurate realtime measurement.