Tony S, Francisco L [Miriam R]

[Editors note: Added scan of procedure with notes included (there's no scanner at the ES), and the "What was done differently?" section. Also added links to some of the references.]

Measurement summary

By running es_meas.sh, we completed the measurement procedure T1500062. Measurement went ok. Results are attached in LHO_EndX_PD_ReportV5, values are within 0.10% of previous measurements, which is good for an initial review. Beams at Rx side look centered (see attached images of the power sensor, PS_BEFORE and PS_AFTER).

What was done differently for this procedure?

By design, the Pcal team follows procedure T1500062 to run an end station measurement. Recently, Miriam worked on running a shell script to acquire and save the GPS times that are requested in the procedure. This with the purpose of saving time to the user and to be less prone to user error when (1) reading and copying ten-digit long numbers from machine into paper, then (2) reading and copying ten-digit long numbers from paper into machine.

A week ago, Joe and Miriam successfully tested the shell script at LLO (L1:78065) when making the most recent Pcal ES measurement. Thus, we proceeded in testing that the program is interferometer independent.

Additionally, I decided to use ndscope instead of StripTool, since I feel more comfortable and familiar with the functions included in ndscope. The one thing we lose from StripTool is monitoring the actual value of the plotted channels -- ndscope cannot enable the cross-hair function during live plotting -- but we solved that by using the caget command in the terminal. The scope I used is saved at:

/ligo/home/francisco.llamas/PCAL/scopes/ex_maintenance.yml

There were no issues while running the shell program. The results, as mentioned in the summary section, agree with previous measurements. The key takeaway is that the processing of data is easily done at the end station, saving us hours of work. Today's outcome, however, does not mean that a printout is not necessary. The printout can become a option for a fail-safe, in case the ES computers become compromised, and use the terminal method as default. We will have to go through at least a couple more iterations of this new program before making a final decision.

Terminal output from generate_measurement_data.py

(pcal_env) francisco.llamas@cdsdell425:/ligo/gitcommon/Calibration/pcal/O4/ES/scripts/pcalEndstationPy$ python generate_measurement_data.py

--WS PS4 --date 2025-07-21

/ligo/gitcommon/Calibration/pcal/O4/ES/scripts/pcalEndstationPy/generate_measurement_data.py:52: SyntaxWarning: invalid escape sequence '\R

'

log_entry = f"{current_time} {command} \Results found here\: {results_path}\n"

Reading in config file from python file in scripts

../../../Common/O4PSparams.yaml

PS4 rho, kappa, u_rel on 2025-07-21 corrected to ES temperature 299.2 K :

-4.702207423037734 -0.0002694340454223 3.166921849830658e-05

Copying the scripts into tD directory...

Connected to nds.ligo-wa.caltech.edu

martel run

reading data at start_time: 1439656235

reading data at start_time: 1439656787

reading data at start_time: 1439657190

reading data at start_time: 1439657604

reading data at start_time: 1439658060

reading data at start_time: 1439658393

reading data at start_time: 1439658575

reading data at start_time: 1439659259

reading data at start_time: 1439659634

Ratios: -0.4616287378386522 -0.466497509893687

writing nds2 data to files

finishing writing

Background Values:

bg1 = 9.088424; Background of TX when WS is at TX

bg2 = 4.841436; Background of WS when WS is at TX

bg3 = 8.973841; Background of TX when WS is at RX

bg4 = 4.915986; Background of WS when WS is at RX

bg5 = 9.091218; Background of TX

bg6 = 0.432428; Background of RX

The uncertainty reported below are Relative Standard Deviation in percent

[34/528]

Intermediate Ratios

RatioWS_TX_it = -0.461629;

RatioWS_TX_ot = -0.466498;

RatioWS_TX_ir = -0.455998;

RatioWS_TX_or = -0.461669;

RatioWS_TX_it_unc = 0.077366;

RatioWS_TX_ot_unc = 0.075967;

RatioWS_TX_ir_unc = 0.068272;

RatioWS_TX_or_unc = 0.080853;

Optical Efficiency

OE_Inner_beam = 0.987787;

OE_Outer_beam = 0.989670;

Weighted_Optical_Efficiency = 0.988728;

OE_Inner_beam_unc = 0.048451;

OE_Outer_beam_unc = 0.051954;

Weighted_Optical_Efficiency_unc = 0.071040;

Martel Voltage fit:

Gradient = 1636.889155;

Intercept = 0.253680;

Power Imbalance = 0.989563;

Endstation Power sensors to WS ratios::

Ratio_WS_TX = -1.077440;

Ratio_WS_RX = -1.391188;

Ratio_WS_TX_unc = 0.046697;

Ratio_WS_RX_unc = 0.038795;

============================================================= [1/528]

============= Values for Force Coefficients =================

=============================================================

Key Pcal Values :

GS = -5.135100; Gold Standard Value in (V/W)

WS = -4.702207; Working Standard Value

costheta = 0.988362; Angle of incidence

c = 299792458.000000; Speed of Light

End Station Values :

TXWS = -1.077440; Tx to WS Rel responsivity (V/V)

sigma_TXWS = 0.000503; Uncertainity of Tx to WS Rel responsivity (V/V)

RXWS = -1.391188; Rx to WS Rel responsivity (V/V)

sigma_RXWS = 0.000540; Uncertainity of Rx to WS Rel responsivity (V/V)

e = 0.988728; Optical Efficiency

sigma_e = 0.000702; Uncertainity in Optical Efficiency

Martel Voltage fit :

Martel_gradient = 1636.889155; Martel to output channel (C/V)

Martel_intercept = 0.253680; Intercept of fit of Martel to output (C/V)

Power Loss Apportion :

beta = 0.998895; Ratio between input and output (Beta)

E_T = 0.993799; TX Optical efficiency

sigma_E_T = 0.000353; Uncertainity in TX Optical efficiency

E_R = 0.994898; RX Optical Efficiency

sigma_E_R = 0.000353; Uncertainity in RX Optical efficiency

Force Coefficients :

FC_TxPD = 7.901498e-13; TxPD Force Coefficient

FC_RxPD = 6.189270e-13; RxPD Force Coefficient

sigma_FC_TxPD = 4.661048e-16; TxPD Force Coefficient

sigma_FC_RxPD = 3.277513e-16; RxPD Force Coefficient

data written to ../../measurements/LHO_EndX/tD20250819/

Note: The data for this measurement, and this alog [first edition], were all done at EX!

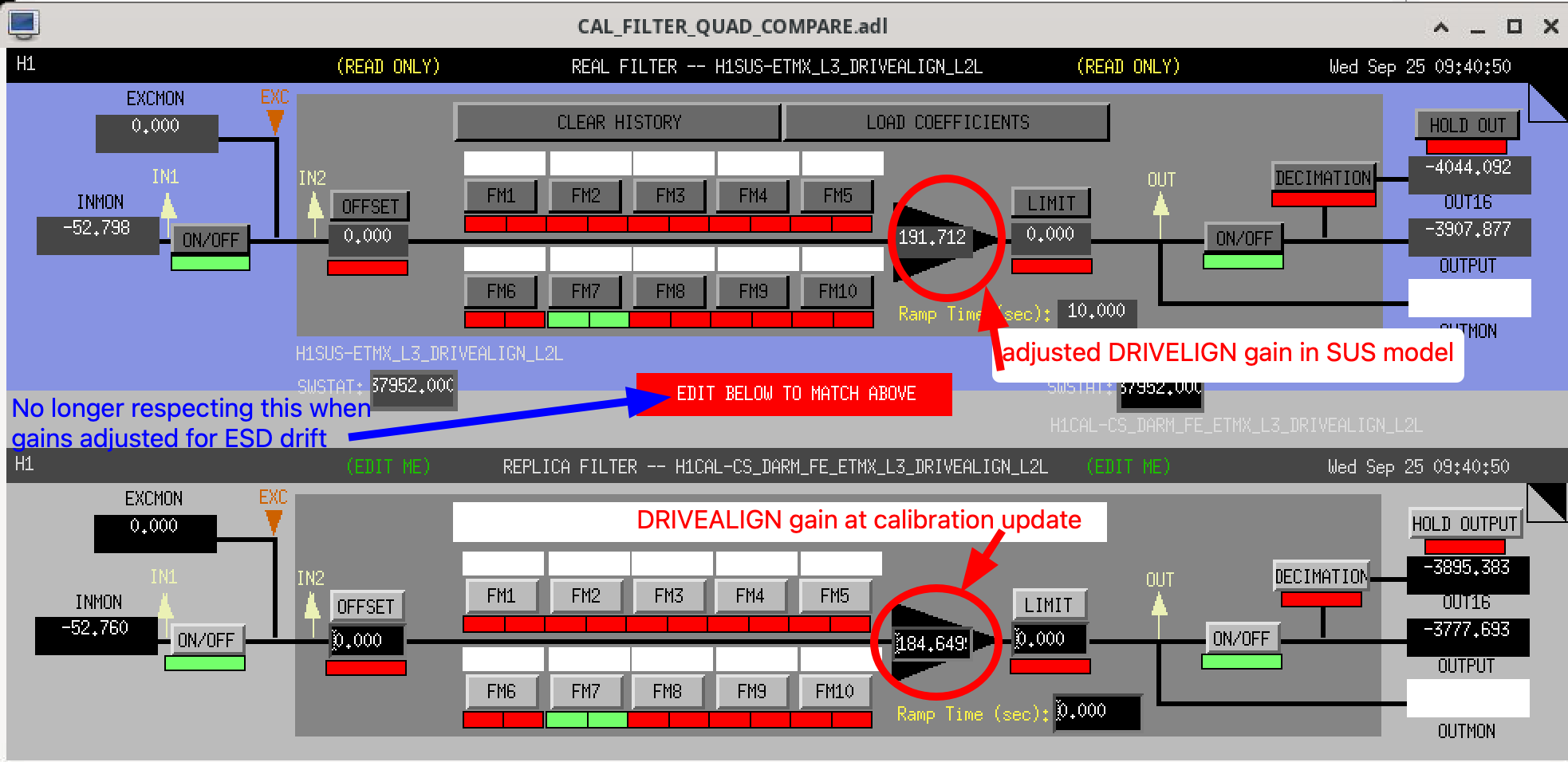

Somewhere along the way I updated the TST drivealign gain parameter in the pyDARM model even though I shouldn't have. At this point, I don't recall if I was confused because the two sites operate differently or if I was just running a test and left this parameter changed in the model template file by accident and subsequently forgot about it. In any case, the drivealign gain parameter change made its way through along with the actuation delay adjustments I made to compensate for both the new ETMX DACs and for residual phase delays that haven't been properly compensated for recently (

Somewhere along the way I updated the TST drivealign gain parameter in the pyDARM model even though I shouldn't have. At this point, I don't recall if I was confused because the two sites operate differently or if I was just running a test and left this parameter changed in the model template file by accident and subsequently forgot about it. In any case, the drivealign gain parameter change made its way through along with the actuation delay adjustments I made to compensate for both the new ETMX DACs and for residual phase delays that haven't been properly compensated for recently (