Following the steps as detailed here: 86935 we were able to get to the engage ASC full IFO state.

I ran the code for engaging IFO ASC by hand, and there were no issues. I did move the alignment around by hand to make sure the buildups were good and the error signals were reasonable. Ryan reset the green references once all the loops, including soft loops engaged.

We held for a bit at 2W DC readout to confer on the plan. We decided to power up and monitor IMC REFL We checked that the IMC REFL power made sense:

- we reduce the IMC REFL power by half on IOT2L on Friday

- at 2 W, we saw that IMC REFL was 1 mW of power

- At 25 W we saw that IMC REFL was 12.7 mW

- before the power outage IMC REFL was 8 mW

- Daniel reports that the IMC REFL power tripled after the power outage, so we would expect 24 mW

- but with the power down by half, we get about 12 mW- this is reasonable

- We checked that the power followed this trend as we powered up to full power- 60 W from PSL

I ran guardian code to engage the camera servos so we could see what the low frequency noise looked like. It looked much better than it did the last time we were here!

We then stopped just before laser noise suppression. With IMC REFL down by half, we adjusted many gains up by 6 dB. We determined that on like 5939, where the IMC REFL gain is checked if it is below 2 should now be checked to see if it is below 8. I updated and loaded the guardian.

We rain laser noise suppression with no issues.

Then, I realized that we actually want to increase the power out of the PSL so that the power at IM4 trans matches the value before the power outage- due to the IMC issues that power has dropped from about 56 W to about 54 W.

I opened the ISS second loop with the guardian, and then stepped up PSL requested power from 60 W to 63 W. This seemed to get us the power out we wanted.

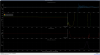

Then, while we were sitting at this slightly higher power, we had a lockloss. The lockloss appears to be an IMC lockloss (as in the IMC lost lock before the IFO).

The IMC REFL power had been increasing, which we expected from the increase of the input power. However, it looks like the IMC refl power was increasing even more than it should have been. This doesn't make any sense.

Since we were down, we again took the IMC up to 60 W and then 63 W. We do not see the same IMC refl power increase that we just saw when locked.

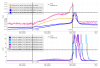

I am attaching an ndscope. I used the first time cursor to show when we stepped up to 63 W. You can see that between this first time cursor and second time cursor, the IMC refl power increases and the IM4 trans power drops. However, the iss second loop was NOT on. We also did NOT see this behavior when we stepped up to 60 W during the power up sequence. Finally, we could not replicate this behavior when we held in down and increased the input power with the IMC locked.