[Sheila, Eric, Jim, Kar Meng, Daniel D.]

When we started working this morning, the particle counts were 20/10/10 per cubic foot

It looked like the old cable for the PZT that needs to be replaced was routed to back into the chamber to a cable bracket which was far from reach. Before we can remove it, we need to find the cable harness plan for HAM7.

In the mean time, we were able to re-route the TEC cable using photos from this alog.

### Broke for lunch ###

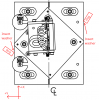

Over lunch, we determined the correct connector for the PZT/translation stage was on CB5 in HAM7. It's labeled cable #4 according to the wiring diagram. We also verified the correct operation of the TEC controller and the oven from CDS

After we returned, the particle counts were 0/0/0 per cubic foot and we uncovered the chamber to finish replacing the cables.

Unfortunately, cable #4 between CB5 (see image) and the OPO is terminated in a male molded connector, which is screwed into the connector bracket. This meant that we needed to remove the female cable (from the flange) from the rear of the connector and then remove the male connector (to OPO) from the cable bracket. Jim came in to help us reach the connector and he decided that it would be easier to remove the top and second-from-top connectors (3rd and 4th on CB5 in the wiring plan) from the bracket to make it easier to reach the ones for cable #4. Jim was able to remove the old cable and replace it with the new one in the bracket.

After Jim left, we were able to continue reinstalling the new cable. The longest DB-9 tentacle on the new cable is for the VOPO PZTs, the DB-15 is for the stage control, and the short DB-9 is a spare which was coiled to the side. We were then able to connect the piezo and stage control connectors to the OPO and roughly re-route the cabling on the ISI table. Final routing and securing of these new cables/checking for mistakes is still required.