Ivey,

I finished the yaw-to-yaw transfer functions for the OSEM estimator using the corrected measurements that Oli took for SR3 yesterday [see LHO: 86202].

The fits were added to the Sus SVN and live inside '~/SusSVN/sus/trunk/HLTS/Common/FilterDesign/Estimator/fits_H1SR3_2025-08-05.mat'. They are already calibrated to work on the filter banks for the estimator and can be installed using 'make_SR3_yaw_model.m', which is in the same folder.

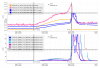

Attached below are two images of the fits for the estimator.

The first attachment shows the Suspoint Y to M1 DAMP Y fit. The zpk for this fit is

'zpk([0,0,-0.027+20.489i,-0.064+11.458i,-0.027-20.489i,-0.064-11.458i],[-0.072+6.395i,-0.072-6.395i,-0.096+14.454i,-0.096-14.454i,-0.062+21.267i,-0.062-21.267i],-0.001)'

The first attachment shows the Suspoint Y to M1 DAMP Y fit. The zpk for this fit is

'zpk([-0.002+19.224i,-0.007+8.31i,-0.002-19.224i,-0.007-8.31i],[-0.06+6.398i,-0.06-6.398i,-0.091+14.439i,-0.091-14.439i,-0.074+21.29i,-0.074-21.29i],12.184)'

I made sure to unplug the cable at the end of my injections today!