Merge h1sush2a/2b to form h1sush12

Jeff, Oli, Fil, Marc, Erik, Jonathan, EJ, Dave

h1sush2a was upgraded to become h1sush12, with h1sush2b being powered down for now.

In the MSR, the supermicro W2245 h1sush2a was removed from the rack and in its place the new W3323 h1sush12 was installed. The IX Dolphin card and cable was transferred to the new machine.

In the CER H1SUSH2A IO Chassis was upgraded to become H1SUSH12:

The ADC was removed from sush2b to become the third ADC in sush12.

All the 18bit DACs were removed.

3 LIGO-DACs were installed, along with a new 4th ADC.

The 3 Binary cards were left in place. In fact their cables were long enough to remain attached while the chassis was pulled out from the rack.

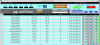

New models for h1iopsush12, h1sus[mc1,mc3,prm,pr3,im,ham1] were added. Note that h1sushtts is now called h1susham1. A DAQ restart was needed.

More details will follow.

New ASCIMC, LSC models

Jennie, Dave:

New h1ascimc and h1lsc models were installed. A DAQ restart was needed.

Beckhoff changes

Daniel, Dave:

Daniel installed new CS-ISC beckhoff PLC. A DAQ restart was needed.

DAQ Restart

Dave, Jonathan.

The DAQ was restarted for:

retire iopsush2a, iopsush2b. Add new iopsush12

h1sush12 user model changes

new h1ascimc and h1lsc models

EDC restart for ECAT CS ISC.

When we did the daq restart, we did the 1 leg first as that is what the control room is pointed at. The daqd systems did not come back properly. It exposed an error in the checkdaqconfig script that runs before daqd on the data concentrator machine. If the daqd process is restarted too quickly it may only do a partial copy of the ini files to a safe stable location. We had to remove the directory in the daq channel list archive and let checkdaqdata re-run. Then the daq 1 leg came up as expected.

When the daq 0 leg was restarted we rebooted the data concentrator (gds0) to make sure all its interfaces came up properly after a boot. This was done as some of the machines (including at least one of the gds machines) had not come back with all its interfaces enabled.

FW0 has been running out of memory overnight and restarting itself many times. Jonathan found the process which was taking the additional memory and killed it. We expect FW0 to be stable from now onwards.