[Joan-Rene Merou, Alicia Calafat, Sheila Dwyer, Robert Schofield, Anamaria Effler]

We have identified video cameras located in the PSL enclosure, operating at an NTSC-derived frame rate of approximately 29.97 frames per second, as the most probable source of the near-30 Hz comb (fundamental at 29.96951 Hz) observed in DARM.

This is a continuation of the work performed to mitigate the set of near-30 Hz and near-100 Hz combs as described is Detchar issue 340 and lho-mallorcan-fellowship/-/issues/3. As well as the work in alogs 88089, 87889 and 87414 and 88433.

Continuing with the magnetometer search described in alog 88433, we found out that the 29.96951 Hz line was very strong in the H1-PSL-R2 rack 12 V power supply.

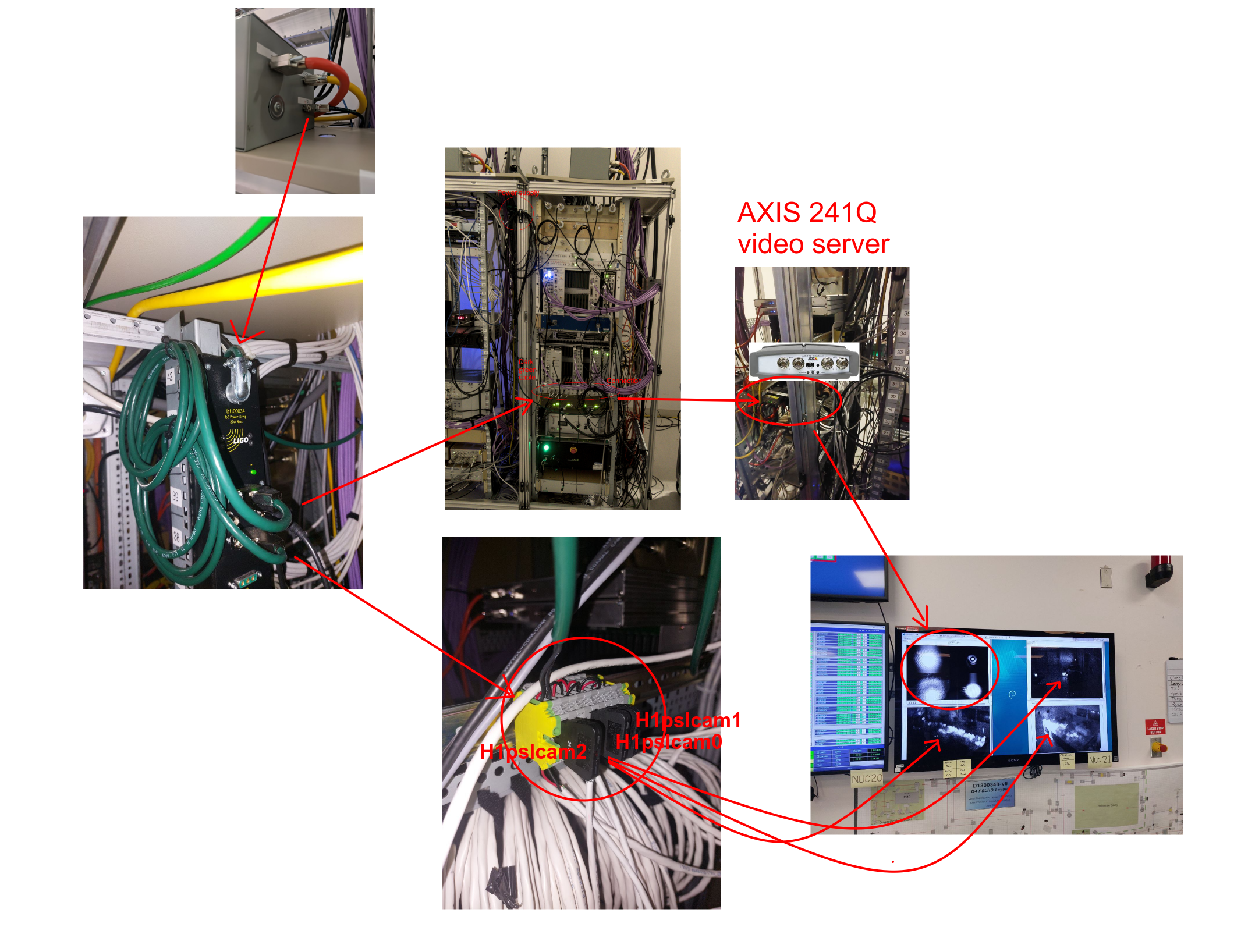

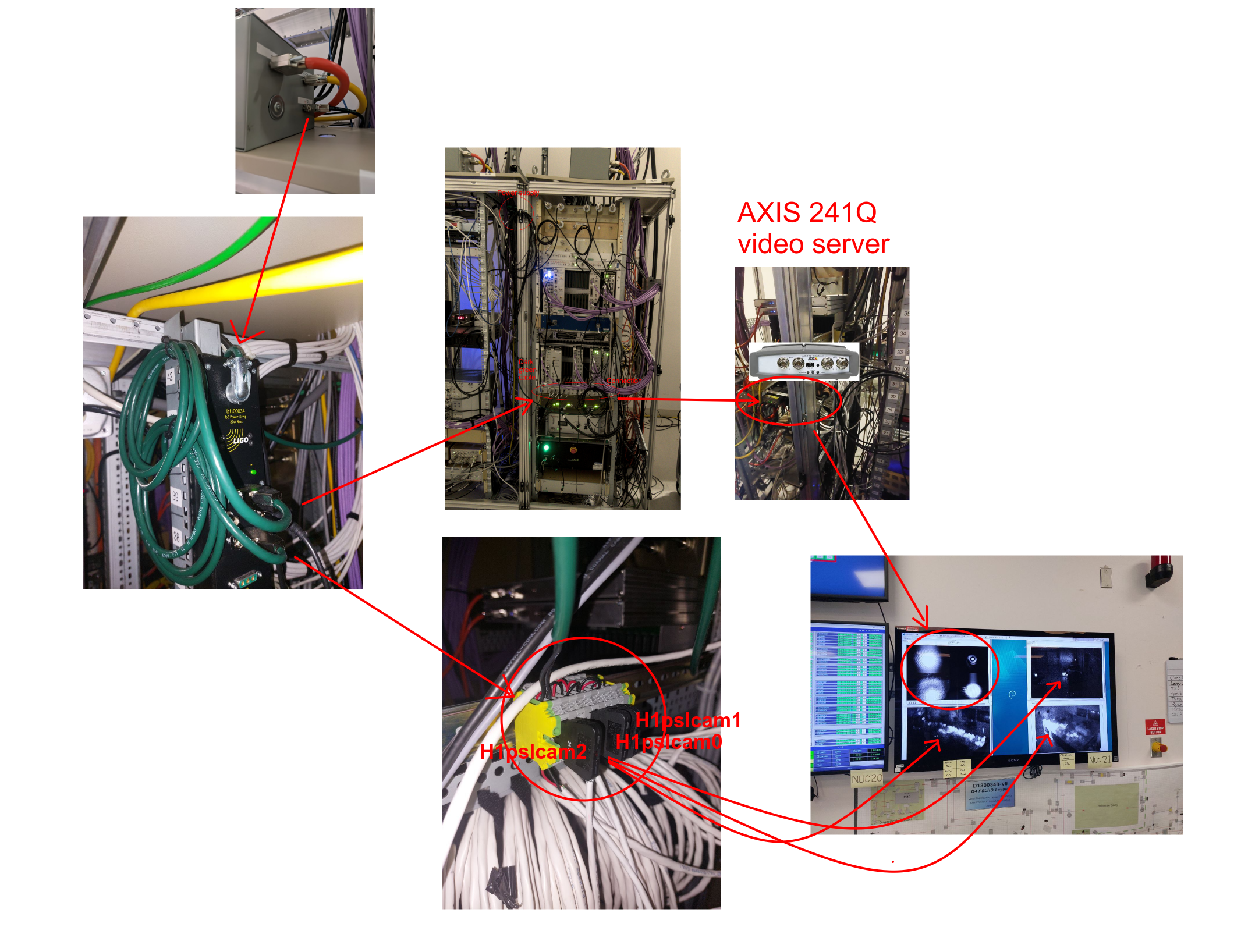

Tracing the cabling from this power supply revealed two distinct paths. The first one is a cable feeding a chassis in the H1-PSL-R1 rack, which connects to an Axis 241Q video server. This server supplies four beam cameras located inside the PSL enclosure. Then, a second cable feeding a small fused distribution unit, from which three white cables emerge. At least two of these enter the PSL enclosure directly, and the third appears to route upward toward the entrance area. These cables were identified as supplying three additional cameras in the PSL enclosure.

The relationship between the power supply, fused distribution unit, and cameras is illustrated in the diagram below.

On 2025-12-17, we went with Robert to disconnect the green power supply cable. Disconnecting the green power cable from the 12 V power supply caused the 29.96951 Hz line to disappear from magnetometer. Reconnecting the cable resulted in a gradual reappearance of the line. Disconnecting individual cameras connected to the Axis 241Q server produced no significant change in the comb amplitude, suggesting these cameras are not the dominant contributors.

In contrast, removing the three fuses from the fused distribution unit immediately eliminated the 29.96951 Hz line. Upon returning to the control room and restarting the cameras via the standard startup procedure (showed to us by Oli Patane), the line reappeared promptly.

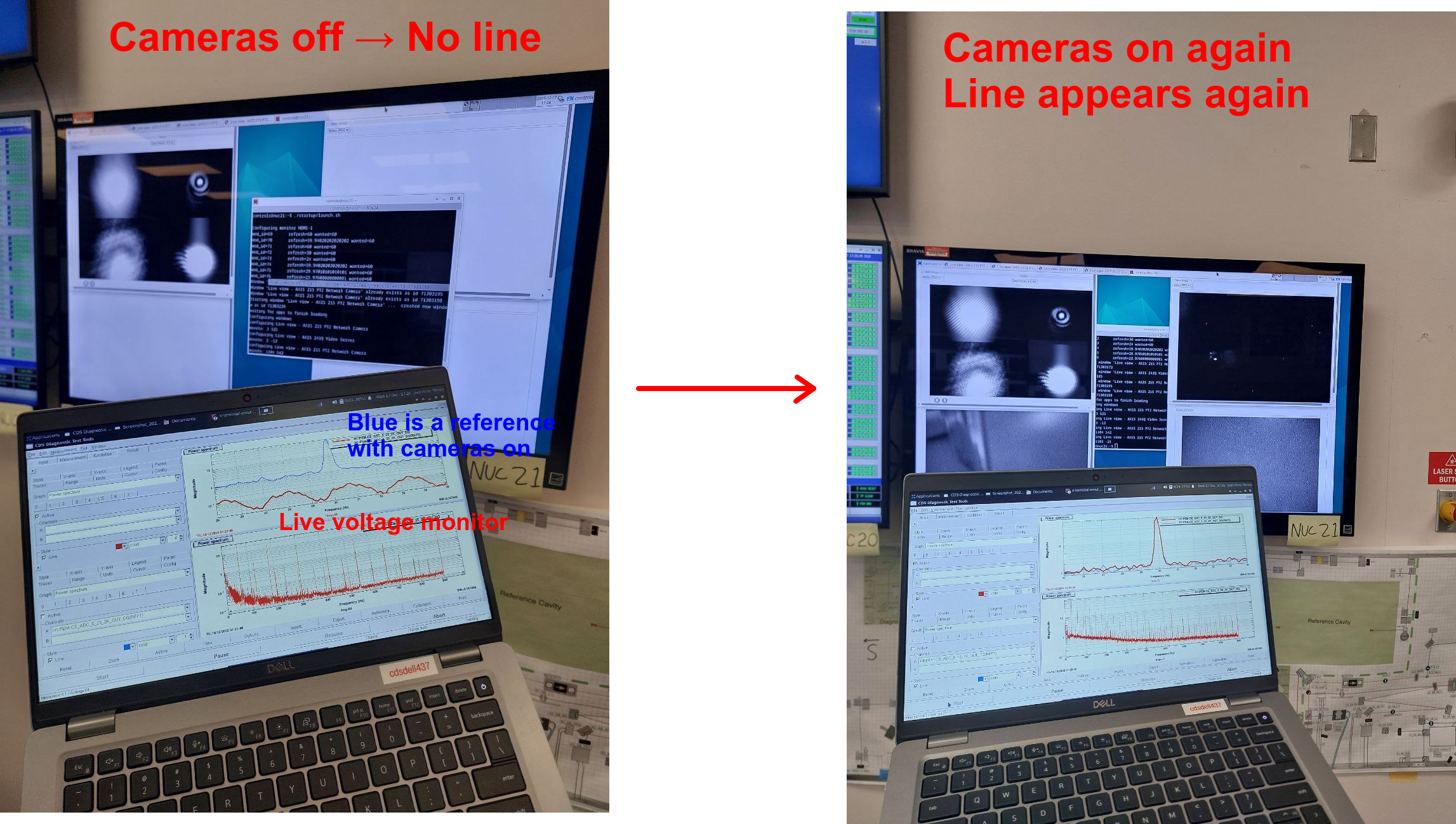

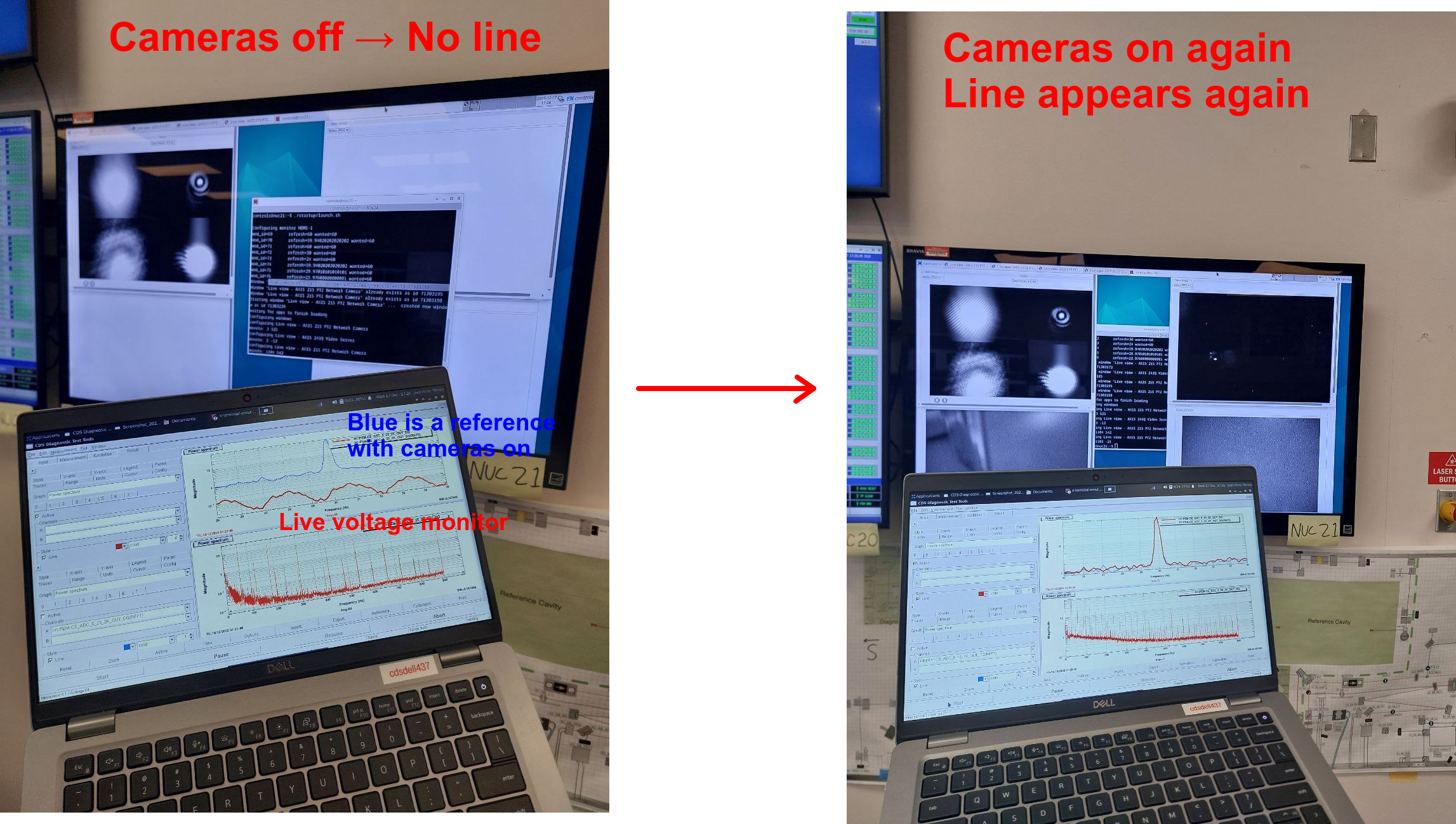

This sequence strongly implicates the three cameras powered through the fused distribution unit as the primary source of the comb. The photographs below show the differences between having the 3 cameras on or off and the peak as seen in the voltage monitor plugged to the 12 V power supply.

On 2025-12-17, we went with Robert to disconnect the green power supply cable. Disconnecting the green power cable from the 12 V power supply caused the 29.96951 Hz line to disappear from magnetometer. Reconnecting the cable resulted in a gradual reappearance of the line. Disconnecting individual cameras connected to the Axis 241Q server produced no significant change in the comb amplitude, suggesting these cameras are not the dominant contributors.

In contrast, removing the three fuses from the fused distribution unit immediately eliminated the 29.96951 Hz line. Upon returning to the control room and restarting the cameras via the standard startup procedure (showed to us by Oli Patane), the line reappeared promptly.

This sequence strongly implicates the three cameras powered through the fused distribution unit as the primary source of the comb. The photographs below show the differences between having the 3 cameras on or off and the peak as seen in the voltage monitor plugged to the 12 V power supply.

According to /opt/rtcds/userapps/release/cds/h1/scripts/fom_startup/nuc21/launch.yaml, the three cameras identified above are Axis 215 PTZ Network Cameras. The Axis 215 PTZ have a maximum frame rate of up to 30 fps, NTSC-compatible video timing and Nominal NTSC frame rate of 29.97 frames per second.

NTSC (National Television System Committee) is an analog color encoding system used in television systems in Japan, the United States and other parts of the Americas. An NTSC picture is made up of 525 interlaced lines and is displayed at a rate of 29.97 frames per second. This frequency matches the observed comb fundamental (29.96951 Hz) to within <1 mHz, within expected drift for video timing systems.

Some sources:

- Axis 215 PTZ Network Camera data sheet

- Axis 215 PTZ Network Camera technical details

- NTSC encoding system information

Another clue that pinpoints to an electronic origin is that the the line width is noted as 0.00042 Hz towards each side in the O4ab lines list (LIGO-T2500212). This means that the line amplitude is around 30 ppm, consistent with what we expect from an electronic element with its own clock (not tied to the GPS system) and this clock not being ultra precise.

It is possible that the way cameras are connected in LLO is different from LHO, or that the cameras themselves are different, which would explain why the comb is in LHO but not in LLO. Note that there is a line at LLO closer to 30 Hz but not at 29.97 Hz.

There are various changes that can mitigate the comb. Turning off the cameras would be the first one. Another idea is to turn of only 2 of the cameras, one in the entrance to the PSL enclosure and another one being one of the top corner in the PSL enclosure. Leaving one of the three there but reducing significantly the strength of the line. These cameras have an upper limit of 30 FPS. Getting more modern cameras at 60 FPS, thus moving the line to 60 Hz, could also be an idea.

Using the camera controls, we have also checked what happens if we change the frame rate of the cameras. When we reduced it to 1 frame per second instead of 30 frames per second, we were able to see peaks at every 1 Hz, while the peak at 29.97 Hz was much lower.

According to /opt/rtcds/userapps/release/cds/h1/scripts/fom_startup/nuc21/launch.yaml, the three cameras identified above are Axis 215 PTZ Network Cameras. The Axis 215 PTZ have a maximum frame rate of up to 30 fps, NTSC-compatible video timing and Nominal NTSC frame rate of 29.97 frames per second.

NTSC (National Television System Committee) is an analog color encoding system used in television systems in Japan, the United States and other parts of the Americas. An NTSC picture is made up of 525 interlaced lines and is displayed at a rate of 29.97 frames per second. This frequency matches the observed comb fundamental (29.96951 Hz) to within <1 mHz, within expected drift for video timing systems.

Some sources:

- Axis 215 PTZ Network Camera data sheet

- Axis 215 PTZ Network Camera technical details

- NTSC encoding system information

Another clue that pinpoints to an electronic origin is that the the line width is noted as 0.00042 Hz towards each side in the O4ab lines list (LIGO-T2500212). This means that the line amplitude is around 30 ppm, consistent with what we expect from an electronic element with its own clock (not tied to the GPS system) and this clock not being ultra precise.

It is possible that the way cameras are connected in LLO is different from LHO, or that the cameras themselves are different, which would explain why the comb is in LHO but not in LLO. Note that there is a line at LLO closer to 30 Hz but not at 29.97 Hz.

There are various changes that can mitigate the comb. Turning off the cameras would be the first one. Another idea is to turn of only 2 of the cameras, one in the entrance to the PSL enclosure and another one being one of the top corner in the PSL enclosure. Leaving one of the three there but reducing significantly the strength of the line. These cameras have an upper limit of 30 FPS. Getting more modern cameras at 60 FPS, thus moving the line to 60 Hz, could also be an idea.

Using the camera controls, we have also checked what happens if we change the frame rate of the cameras. When we reduced it to 1 frame per second instead of 30 frames per second, we were able to see peaks at every 1 Hz, while the peak at 29.97 Hz was much lower.

(Red line is a live voltage monitor on the power supply and blue one is a reference before changing camera settings)

On 2025-12-18, we have further investigated which one of the 3 Axis 215 cameras is responsible for most of the peak amplitude. These cameras are h1pslcam0, h1pslcam1, and h1pslcam2. In their default setting and default orientation, the peak amplitude in the voltage monitor PSD was around 150 1/Hz. We have then disconnected the three of them and reconnected them back on:

- With only h1pslcam0 on, the peak amplitude was around 60-80 1/Hz.

- With only h1pslcam1 on, the peak amplitude was around 40-50 1/Hz.

- With only h1pslcam2 on, the peak amplitude was around 10 1/Hz.

- With no cameras on, the peak cannot be seen.

Hence, it would appear that not all cameras have the same effect on the peak amplitude. h1pslcam2 is in the PSL enclosure pointing towards to table. From the other two, one also points towards the table and the other one is in the entrance room to the enclosure. Therefore, since h1pslcam2 has the smallest peak amplitude and has the same function as the other one in the enclosure, an option could be to just leave this camera on and turn off the other two ones.

Note that the near-100 Hz peaks do not dissapear from the voltage monitor if we turn off the cameras, which probably points to a different source.

We have further tested the settings in h1pslcam2 to see what else decreases the peak amplitude. We have seen that checking of the "de-interlacing" slighly also decreased the peak amplitude, from around 10 1/Hz to 7 or 8 1/Hz. Reducing the camera frame rate from 30 fps to 1 fps further reduces a bit the peak to 5 1/Hz. Reducing the gain in low-light in the camera advanced settings also appeared to reduce a bit the peak amplitude to around 4 1/Hz. Note that reducing the fps to 1 also creates small peaks every 1 Hz in the voltage monitors, but these are smaller than the one at 30 Hz:

(Red line is a live voltage monitor on the power supply and blue one is a reference before changing camera settings)

On 2025-12-18, we have further investigated which one of the 3 Axis 215 cameras is responsible for most of the peak amplitude. These cameras are h1pslcam0, h1pslcam1, and h1pslcam2. In their default setting and default orientation, the peak amplitude in the voltage monitor PSD was around 150 1/Hz. We have then disconnected the three of them and reconnected them back on:

- With only h1pslcam0 on, the peak amplitude was around 60-80 1/Hz.

- With only h1pslcam1 on, the peak amplitude was around 40-50 1/Hz.

- With only h1pslcam2 on, the peak amplitude was around 10 1/Hz.

- With no cameras on, the peak cannot be seen.

Hence, it would appear that not all cameras have the same effect on the peak amplitude. h1pslcam2 is in the PSL enclosure pointing towards to table. From the other two, one also points towards the table and the other one is in the entrance room to the enclosure. Therefore, since h1pslcam2 has the smallest peak amplitude and has the same function as the other one in the enclosure, an option could be to just leave this camera on and turn off the other two ones.

Note that the near-100 Hz peaks do not dissapear from the voltage monitor if we turn off the cameras, which probably points to a different source.

We have further tested the settings in h1pslcam2 to see what else decreases the peak amplitude. We have seen that checking of the "de-interlacing" slighly also decreased the peak amplitude, from around 10 1/Hz to 7 or 8 1/Hz. Reducing the camera frame rate from 30 fps to 1 fps further reduces a bit the peak to 5 1/Hz. Reducing the gain in low-light in the camera advanced settings also appeared to reduce a bit the peak amplitude to around 4 1/Hz. Note that reducing the fps to 1 also creates small peaks every 1 Hz in the voltage monitors, but these are smaller than the one at 30 Hz:

(blue is a reference with the three cameras on)

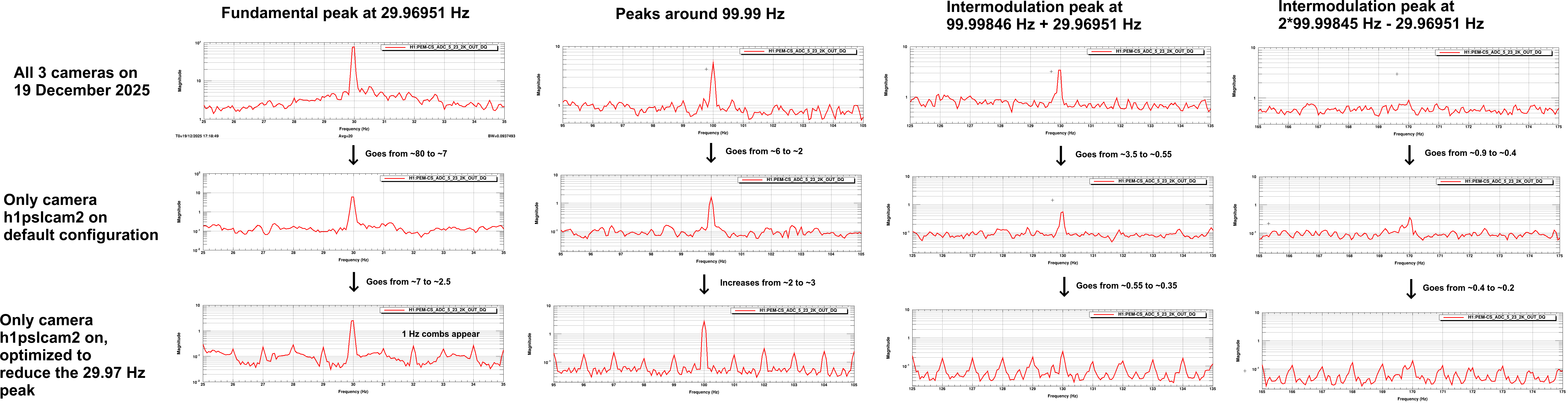

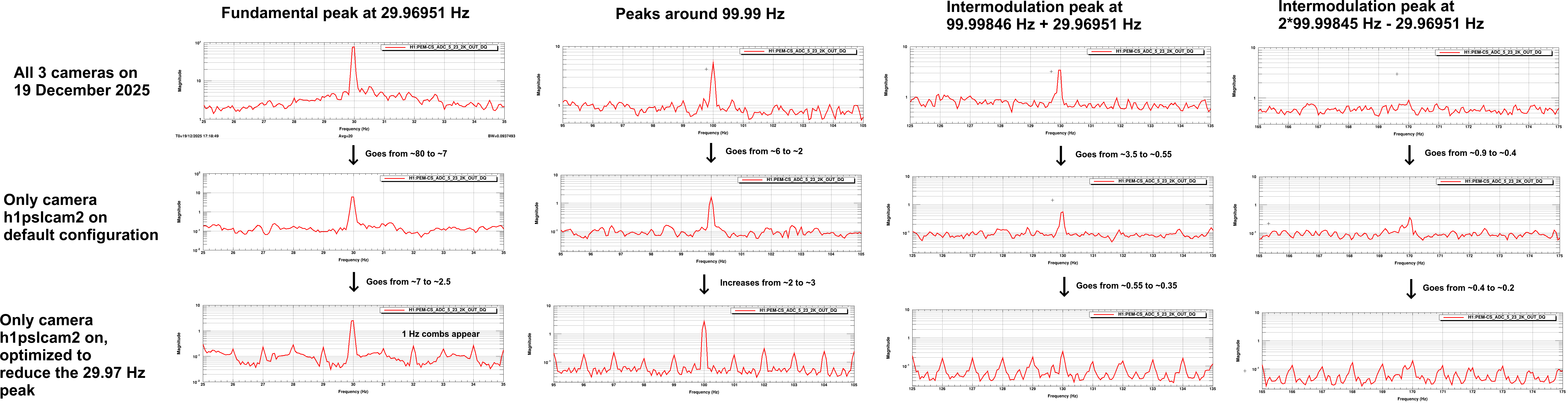

On 2025-12-19 we produced the following table of plots showing the height of the peak at 29.96951 Hz, as well as the near-100 Hz peak at 99.998455 Hz and 2 of their intermodulations, one at 99.998455 Hz + 29.96951 Hz and another one at 99.99845486125*2 - 29.96951 Hz. Each row represents a different configuration:

- The first row represents the actual default status with all cameras on in their default configuration.

- The second row shows only having h1pslcam2 with its default configuration

- The third row shows only having h1pslcam2 on with the configuration we have found optimal to reduce the 29.97 Hz peak (1 fps instead of 30 fps, no de-interlacing, low-light max gain of 0 dB and manual exposure control.)

Note that on 2025-12-19 while the reduction factor between the first and last configuration at 29.96951 Hz is still ~30, it began at a height of ~80 and went down to ~2.5 instead of from ~150 to ~5. As can be seen in the table of figures below, the 99.99 Hz peak does not change that much, while the intermodulations do become smaller as we reduce the height of the 29.96951 Hz line.

(blue is a reference with the three cameras on)

On 2025-12-19 we produced the following table of plots showing the height of the peak at 29.96951 Hz, as well as the near-100 Hz peak at 99.998455 Hz and 2 of their intermodulations, one at 99.998455 Hz + 29.96951 Hz and another one at 99.99845486125*2 - 29.96951 Hz. Each row represents a different configuration:

- The first row represents the actual default status with all cameras on in their default configuration.

- The second row shows only having h1pslcam2 with its default configuration

- The third row shows only having h1pslcam2 on with the configuration we have found optimal to reduce the 29.97 Hz peak (1 fps instead of 30 fps, no de-interlacing, low-light max gain of 0 dB and manual exposure control.)

Note that on 2025-12-19 while the reduction factor between the first and last configuration at 29.96951 Hz is still ~30, it began at a height of ~80 and went down to ~2.5 instead of from ~150 to ~5. As can be seen in the table of figures below, the 99.99 Hz peak does not change that much, while the intermodulations do become smaller as we reduce the height of the 29.96951 Hz line.

This appears a reasonable setting in which to leave the cameras if we want one on: h1pslcam0 and h1pslcam1 turned off. h1pslcam2 and the beam cameras turned on. h1pslcam2 checked off the "de-interlacing" setting, fps set to 1, resolution set to 4CIF (reducing resolution does not appear to have much influence), reduce the low-light gain below to optimally 0 dB and set exposure control to manual with the shutter speed to 1/60 s. This configuration is expected to reduce the peak amplitude in the voltage monitor from around 150 1/Hz to 5 1/Hz. This factor 30, if the same reduction takes place in DARM, should significantly reduced the peak and have a lesser effect on CW searches.

Note that in order to fully not affect CW searches, the optimal would be to just turn off the cameras. Looking at the O4ab averaged plots used to create the lines lists, the peak at 29.96951 Hz is over 2 orders of magnitude stronger than the surrounding noise. The peak is expected to be smaller in O4c given the change in H1:SUS-ITMY_L3_ESDAMON_DC_OUT16 bias studied in 87414, but only turning off the cameras would ensure getting rid of it.

In conclusion, the evidence strongly supports that the near-30 Hz comb observed in DARM originates from NTSC-timed video cameras in the PSL enclosure, specifically the Axis 215 PTZ cameras powered by the H1-PSL-R2 12 V supply. Powering them off and on, fuse removal, frame-rate manipulation, and frequency matching all independently confirm this attribution. Optimally, we recommend turning the cameras off or changing them. If one should stay on, we recommend having only h1pslcam2 on with the optimal configuration described above.

This appears a reasonable setting in which to leave the cameras if we want one on: h1pslcam0 and h1pslcam1 turned off. h1pslcam2 and the beam cameras turned on. h1pslcam2 checked off the "de-interlacing" setting, fps set to 1, resolution set to 4CIF (reducing resolution does not appear to have much influence), reduce the low-light gain below to optimally 0 dB and set exposure control to manual with the shutter speed to 1/60 s. This configuration is expected to reduce the peak amplitude in the voltage monitor from around 150 1/Hz to 5 1/Hz. This factor 30, if the same reduction takes place in DARM, should significantly reduced the peak and have a lesser effect on CW searches.

Note that in order to fully not affect CW searches, the optimal would be to just turn off the cameras. Looking at the O4ab averaged plots used to create the lines lists, the peak at 29.96951 Hz is over 2 orders of magnitude stronger than the surrounding noise. The peak is expected to be smaller in O4c given the change in H1:SUS-ITMY_L3_ESDAMON_DC_OUT16 bias studied in 87414, but only turning off the cameras would ensure getting rid of it.

In conclusion, the evidence strongly supports that the near-30 Hz comb observed in DARM originates from NTSC-timed video cameras in the PSL enclosure, specifically the Axis 215 PTZ cameras powered by the H1-PSL-R2 12 V supply. Powering them off and on, fuse removal, frame-rate manipulation, and frequency matching all independently confirm this attribution. Optimally, we recommend turning the cameras off or changing them. If one should stay on, we recommend having only h1pslcam2 on with the optimal configuration described above.

On 2025-12-17, we went with Robert to disconnect the green power supply cable. Disconnecting the green power cable from the 12 V power supply caused the 29.96951 Hz line to disappear from magnetometer. Reconnecting the cable resulted in a gradual reappearance of the line. Disconnecting individual cameras connected to the Axis 241Q server produced no significant change in the comb amplitude, suggesting these cameras are not the dominant contributors.

In contrast, removing the three fuses from the fused distribution unit immediately eliminated the 29.96951 Hz line. Upon returning to the control room and restarting the cameras via the standard startup procedure (showed to us by Oli Patane), the line reappeared promptly.

This sequence strongly implicates the three cameras powered through the fused distribution unit as the primary source of the comb. The photographs below show the differences between having the 3 cameras on or off and the peak as seen in the voltage monitor plugged to the 12 V power supply.

On 2025-12-17, we went with Robert to disconnect the green power supply cable. Disconnecting the green power cable from the 12 V power supply caused the 29.96951 Hz line to disappear from magnetometer. Reconnecting the cable resulted in a gradual reappearance of the line. Disconnecting individual cameras connected to the Axis 241Q server produced no significant change in the comb amplitude, suggesting these cameras are not the dominant contributors.

In contrast, removing the three fuses from the fused distribution unit immediately eliminated the 29.96951 Hz line. Upon returning to the control room and restarting the cameras via the standard startup procedure (showed to us by Oli Patane), the line reappeared promptly.

This sequence strongly implicates the three cameras powered through the fused distribution unit as the primary source of the comb. The photographs below show the differences between having the 3 cameras on or off and the peak as seen in the voltage monitor plugged to the 12 V power supply.

According to /opt/rtcds/userapps/release/cds/h1/scripts/fom_startup/nuc21/launch.yaml, the three cameras identified above are Axis 215 PTZ Network Cameras. The Axis 215 PTZ have a maximum frame rate of up to 30 fps, NTSC-compatible video timing and Nominal NTSC frame rate of 29.97 frames per second.

NTSC (National Television System Committee) is an analog color encoding system used in television systems in Japan, the United States and other parts of the Americas. An NTSC picture is made up of 525 interlaced lines and is displayed at a rate of 29.97 frames per second. This frequency matches the observed comb fundamental (29.96951 Hz) to within <1 mHz, within expected drift for video timing systems.

Some sources:

-

According to /opt/rtcds/userapps/release/cds/h1/scripts/fom_startup/nuc21/launch.yaml, the three cameras identified above are Axis 215 PTZ Network Cameras. The Axis 215 PTZ have a maximum frame rate of up to 30 fps, NTSC-compatible video timing and Nominal NTSC frame rate of 29.97 frames per second.

NTSC (National Television System Committee) is an analog color encoding system used in television systems in Japan, the United States and other parts of the Americas. An NTSC picture is made up of 525 interlaced lines and is displayed at a rate of 29.97 frames per second. This frequency matches the observed comb fundamental (29.96951 Hz) to within <1 mHz, within expected drift for video timing systems.

Some sources:

-  (Red line is a live voltage monitor on the power supply and blue one is a reference before changing camera settings)

On 2025-12-18, we have further investigated which one of the 3 Axis 215 cameras is responsible for most of the peak amplitude. These cameras are h1pslcam0, h1pslcam1, and h1pslcam2. In their default setting and default orientation, the peak amplitude in the voltage monitor PSD was around 150 1/Hz. We have then disconnected the three of them and reconnected them back on:

- With only h1pslcam0 on, the peak amplitude was around 60-80 1/Hz.

- With only h1pslcam1 on, the peak amplitude was around 40-50 1/Hz.

- With only h1pslcam2 on, the peak amplitude was around 10 1/Hz.

- With no cameras on, the peak cannot be seen.

Hence, it would appear that not all cameras have the same effect on the peak amplitude. h1pslcam2 is in the PSL enclosure pointing towards to table. From the other two, one also points towards the table and the other one is in the entrance room to the enclosure. Therefore, since h1pslcam2 has the smallest peak amplitude and has the same function as the other one in the enclosure, an option could be to just leave this camera on and turn off the other two ones.

Note that the near-100 Hz peaks do not dissapear from the voltage monitor if we turn off the cameras, which probably points to a different source.

We have further tested the settings in h1pslcam2 to see what else decreases the peak amplitude. We have seen that checking of the "de-interlacing" slighly also decreased the peak amplitude, from around 10 1/Hz to 7 or 8 1/Hz. Reducing the camera frame rate from 30 fps to 1 fps further reduces a bit the peak to 5 1/Hz. Reducing the gain in low-light in the camera advanced settings also appeared to reduce a bit the peak amplitude to around 4 1/Hz. Note that reducing the fps to 1 also creates small peaks every 1 Hz in the voltage monitors, but these are smaller than the one at 30 Hz:

(Red line is a live voltage monitor on the power supply and blue one is a reference before changing camera settings)

On 2025-12-18, we have further investigated which one of the 3 Axis 215 cameras is responsible for most of the peak amplitude. These cameras are h1pslcam0, h1pslcam1, and h1pslcam2. In their default setting and default orientation, the peak amplitude in the voltage monitor PSD was around 150 1/Hz. We have then disconnected the three of them and reconnected them back on:

- With only h1pslcam0 on, the peak amplitude was around 60-80 1/Hz.

- With only h1pslcam1 on, the peak amplitude was around 40-50 1/Hz.

- With only h1pslcam2 on, the peak amplitude was around 10 1/Hz.

- With no cameras on, the peak cannot be seen.

Hence, it would appear that not all cameras have the same effect on the peak amplitude. h1pslcam2 is in the PSL enclosure pointing towards to table. From the other two, one also points towards the table and the other one is in the entrance room to the enclosure. Therefore, since h1pslcam2 has the smallest peak amplitude and has the same function as the other one in the enclosure, an option could be to just leave this camera on and turn off the other two ones.

Note that the near-100 Hz peaks do not dissapear from the voltage monitor if we turn off the cameras, which probably points to a different source.

We have further tested the settings in h1pslcam2 to see what else decreases the peak amplitude. We have seen that checking of the "de-interlacing" slighly also decreased the peak amplitude, from around 10 1/Hz to 7 or 8 1/Hz. Reducing the camera frame rate from 30 fps to 1 fps further reduces a bit the peak to 5 1/Hz. Reducing the gain in low-light in the camera advanced settings also appeared to reduce a bit the peak amplitude to around 4 1/Hz. Note that reducing the fps to 1 also creates small peaks every 1 Hz in the voltage monitors, but these are smaller than the one at 30 Hz:

(blue is a reference with the three cameras on)

On 2025-12-19 we produced the following table of plots showing the height of the peak at 29.96951 Hz, as well as the near-100 Hz peak at 99.998455 Hz and 2 of their intermodulations, one at 99.998455 Hz + 29.96951 Hz and another one at 99.99845486125*2 - 29.96951 Hz. Each row represents a different configuration:

- The first row represents the actual default status with all cameras on in their default configuration.

- The second row shows only having h1pslcam2 with its default configuration

- The third row shows only having h1pslcam2 on with the configuration we have found optimal to reduce the 29.97 Hz peak (1 fps instead of 30 fps, no de-interlacing, low-light max gain of 0 dB and manual exposure control.)

Note that on 2025-12-19 while the reduction factor between the first and last configuration at 29.96951 Hz is still ~30, it began at a height of ~80 and went down to ~2.5 instead of from ~150 to ~5. As can be seen in the table of figures below, the 99.99 Hz peak does not change that much, while the intermodulations do become smaller as we reduce the height of the 29.96951 Hz line.

(blue is a reference with the three cameras on)

On 2025-12-19 we produced the following table of plots showing the height of the peak at 29.96951 Hz, as well as the near-100 Hz peak at 99.998455 Hz and 2 of their intermodulations, one at 99.998455 Hz + 29.96951 Hz and another one at 99.99845486125*2 - 29.96951 Hz. Each row represents a different configuration:

- The first row represents the actual default status with all cameras on in their default configuration.

- The second row shows only having h1pslcam2 with its default configuration

- The third row shows only having h1pslcam2 on with the configuration we have found optimal to reduce the 29.97 Hz peak (1 fps instead of 30 fps, no de-interlacing, low-light max gain of 0 dB and manual exposure control.)

Note that on 2025-12-19 while the reduction factor between the first and last configuration at 29.96951 Hz is still ~30, it began at a height of ~80 and went down to ~2.5 instead of from ~150 to ~5. As can be seen in the table of figures below, the 99.99 Hz peak does not change that much, while the intermodulations do become smaller as we reduce the height of the 29.96951 Hz line.

This appears a reasonable setting in which to leave the cameras if we want one on: h1pslcam0 and h1pslcam1 turned off. h1pslcam2 and the beam cameras turned on. h1pslcam2 checked off the "de-interlacing" setting, fps set to 1, resolution set to 4CIF (reducing resolution does not appear to have much influence), reduce the low-light gain below to optimally 0 dB and set exposure control to manual with the shutter speed to 1/60 s. This configuration is expected to reduce the peak amplitude in the voltage monitor from around 150 1/Hz to 5 1/Hz. This factor 30, if the same reduction takes place in DARM, should significantly reduced the peak and have a lesser effect on CW searches.

Note that in order to fully not affect CW searches, the optimal would be to just turn off the cameras. Looking at the

This appears a reasonable setting in which to leave the cameras if we want one on: h1pslcam0 and h1pslcam1 turned off. h1pslcam2 and the beam cameras turned on. h1pslcam2 checked off the "de-interlacing" setting, fps set to 1, resolution set to 4CIF (reducing resolution does not appear to have much influence), reduce the low-light gain below to optimally 0 dB and set exposure control to manual with the shutter speed to 1/60 s. This configuration is expected to reduce the peak amplitude in the voltage monitor from around 150 1/Hz to 5 1/Hz. This factor 30, if the same reduction takes place in DARM, should significantly reduced the peak and have a lesser effect on CW searches.

Note that in order to fully not affect CW searches, the optimal would be to just turn off the cameras. Looking at the