Travis, Jordan

We were able to leak check the new JAC viewport on the -Y door of HAM1 today. Since this is an o-ring sealed viewport we bagged the viewport leaving the conflat seal exposed before spraying helium, in an attempt to reduce helium diffusion through the o-ring.

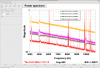

With the helium leak detector backing the main turbopump, the helium background was ~4E-10 Torr-l/s. While spraying the flange/leak check ports, the helium signal slowly rose (30-60 seconds) to maximum of ~1E-9 Torr-l/s, typical of permeation through an o-ring, see attached picture.

Once, leak checking was completed, we disconnected the leak detector from the main turbo pump cart, isolated the turbopump from the chamber, and moved the SS500 cart closer to HAM2 to allow room for the new JAC table to be moved into place.

HAM1 continues to be pumped via turbopump, while the annulus volume is still pumping with both the aux carts and the annulus ion pumps.