TL;DR - Don't worry about the new models and screens in /userapps/trunk/sus - Brian is developing these for the OSEM estimator project and none of these are (currently) linked to any observatory model.

--

FYI - As part of the project to see if we can improve the noise caused by the OSEMs, we are developing new models and medm screens for the HLTS. Tracking for this ECR is in FRS ticket 32526.

We are running these at Stanford for testing, and putting them into userapps because we will be trying them on a triple at LHO soon (detail will be in a different log, they are still in flux a bit).

As such, I've created several new things in userapps. These are not linked to anything at either site - I'm putting this note here to reduce potential worry. These are still in development, so I suggest waiting a bit before you look at these.

New Files:

/userapps/trunk/sus/common/models/

HLTS_MASTER_W_EST.mdl - library part for M1 with the estimator calculation

SIXOSEM_T_STAGE_MASTER_W_EST.mdl - library part for upgraded HLTS model with the estimator included.

userapps/trunk/sus/common/medm/hxts/

SUS_CUST_HLTS_OVERVIEW_W_EST.adl - new overview screen with estimator

These files are copies of the existing parts as of March 2025, and I added _W_EST to the name of the copy.

userapps/trunk/sus/common/medm/estim/ - directory of new sub-screeens for the estimator

Updated files:

userapps/trunk/isi/common/models/

blend_switch_library.mdl - I added a new model to this library to allow smooth switching between the osem damping, the estimator damping, and OFF.

I'm running this from the new top-level model in

/userapps/trunk/isi/s1/s1suspr3.mdl

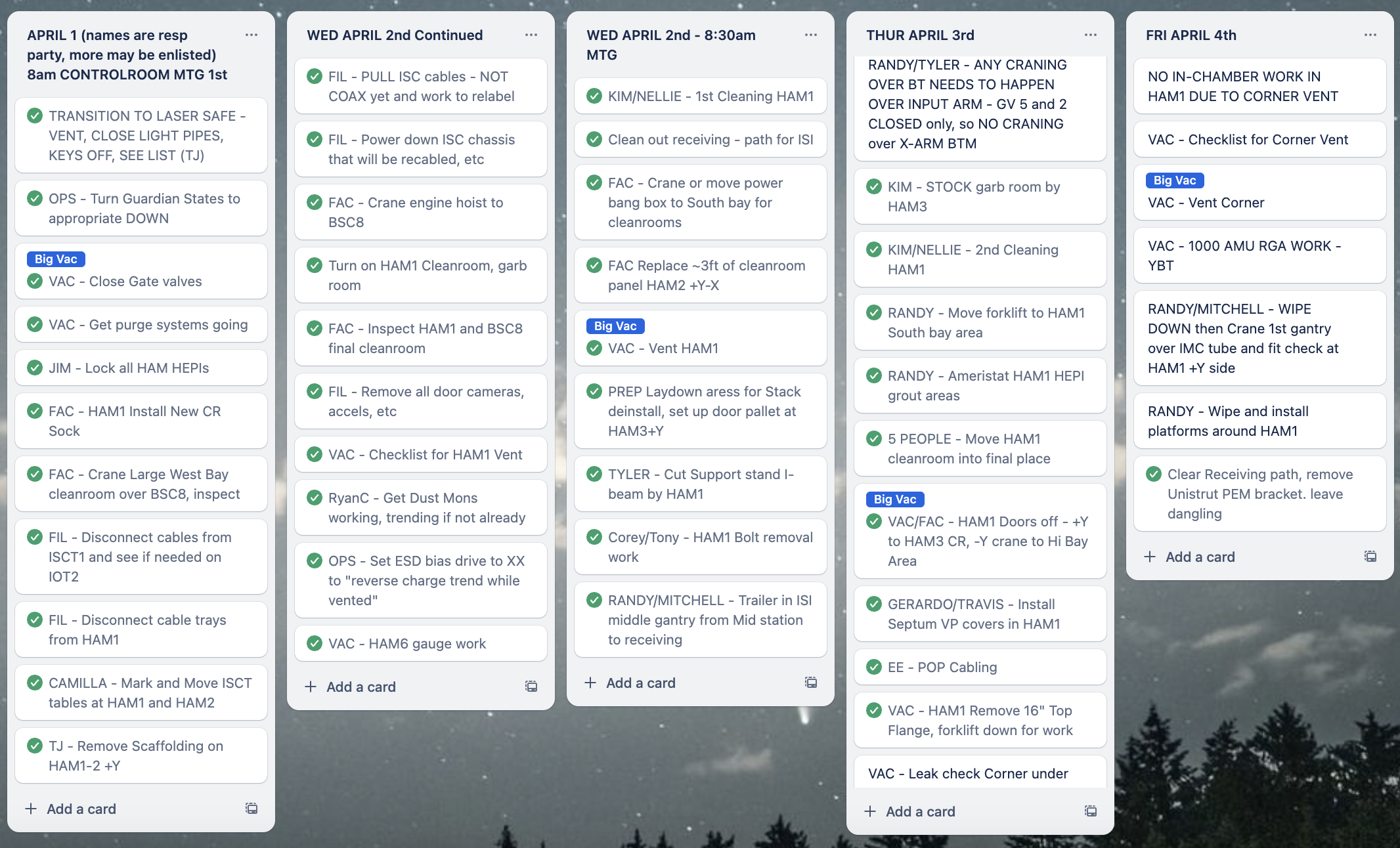

(Travis, Tyler, Randy, Jordan, Janos, Gerardo)

Something to note during the removal of the -Y door for HAM1, a hair (see attached photo) was found embeded on one of the annulus o-rings, good eye Travis. Maybe we are due for a contamination control refresh.