[Marie, Aidan, Alexei, Dan, TJ]

As mentioned above in alog 42171, we set up a ring heater measurement last night to observe the response of the HWS. The ring heater was switched on with 2 W total power dissipated (1W in the upper segment, 1W in lower segment) for 8 hours. Results are encouraging:

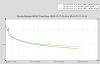

In figure 1, we can clearly see the effect of thermal transient from the ring heater on the spherical power of the HWS beam phase. The measured amplitude of the maximum phase deformation (~ -80 udiopters) corresponds to the prediction of the model, once we roughly correct for the magnification of the beam on the test mass (we measured it yesterday to be about 23.5 instead of 20.5 in the model). It indicates that the HWS might be probing the test mass correctly. However, a more careful analysis is required.

The spherical power from the HWS indicates a large drift at the beginning. We think it is partly due to the thermal stabilization of the HWS sensor itself. The total slope over the first 6 hours is about 40 udiopters, which is large compared to the effect of the ring heater. There is a cross-coupling between the spherical power and prism X, on top of a beam drift indicated by the prism X/Y power (corresponding to the tilts of the test mass).

In figures 2 to 5, we can see the wavefront measurements from the HWS at different stages: after the sensor thermal drift (+6h on the time scale of figure 1), when the deformation is maximum (+9h), before the ring heater is switched off (+14h) and at the end of the measurement (+17h). The code needs to be changed to see more clearly the phase below 90 nm difference.

****

Today we worked on the beam optimization by adjusting the beam alignment and initial iris aperture. One issue is the axis-symmetric reduction of the iris aperture doesn't translate into a symmetric effect in the return beam power distribution. It indicates that the beam is clipping and we are not imaging its center. However, the number of centroid has increased with respect to yesterday (+ 30%), as well as the intensity homogeneity on the HWS.

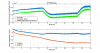

As it is inefficient to check the performance of the setup with ring heater tests, due to the large amount of time they take, today we looked for faster figures of merit. One of them could be the cross-coupling between the prism X/Y and the spherical power. If the coupling is low, the beam is more likely to be aligned on the test mass. We injected a yaw oscillation at ETMY M0 (10 urad at 0.03 Hz). When optimizing the homogeneity of the power distribution of the HWS return beam, we could see a reduction of the cross-coupling between prismX and the spherical power. It was reduced by a factor 1.5 (with 120 udiopters/ 34 urad) compared to last night. We scheduled a ring heater test for tonight to confirm if the situation has improved.

Another important parameter is the sensor noise floor at high frequency (time scale of minutes). The noise on the sensor is higher than expected and this will limit our ability to see high spatial resolution changes. After today's work, the rms of the noise over 10 mins is reduced by a factor 3 (see figure 6).

We still need to test the impact of our system on the ALS beam and vice versa.

We forgot to remove a jumper in the Beckhoff rack and to reinstall fuses, upon which the MEDM status showed yellow for status transition. We were still concerned about the loud noise we heard. Kyle and Gerardo noted a misalignment in the limit switch shaft and its corresponding pulley when a straight edge is placed next to mating gear pulley. The misalignment is slight (may have always been there), and we are not concerned about the pulley belt walking off. The valve opened successfully and MEDM shows it as green.