At ~12:40 PST the PSL HPO shut off due to a trip of the HPO power watchdog; the FE was still running. The laser restarted without issue, but once again we were having issues engaging the injection locking. To get a reasonable power out of the HPO we had to increase the operating current for DB1 again, this time up to 59.5 A (from 56.6 A); this unfortunately did not help with the injection locking, so we let the laser warm up for an hour or so to see if this helped. It didn't. Therefore, Peter and I went into the PSL enclosure at about 14:30 PST to take a look at the alignment onto the injection locking PD. The PDH error signal looked to be a little out of phase, but other than that looked fine, however no realignment of the beam into the PD improved the situation with the injection locking. Just to see what happens, Peter removed the pump light filter from the front of the PD; the injection locking engaged almost immediately. The lock broke when Peter reinstalled the pump light filter, but relocked almost immediately. Possibly not enough light on the PD?

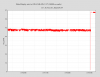

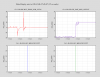

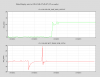

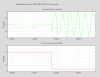

Looking into the trip, it appears that a rapid decay of DB1 caused a drop in laser power, which eventually triggered the power watchdog. This seems to be the cause behind last Saturday's trip as well. The two attached trends show both laser trips; the 1st is the power out of the HPO and the 2nd is the relative power (in %) of the 4 HPO DBs. It is clear that DB1 was rapidly decaying, especially compared to the other 3 DBs (DB4 also shows the same decay pattern, but its relative power out is much higher than DB1 and the decay is not as fast so it is unlikely to be part of the issue), and this decay tracked with the drop of the HPO output power, leading me to the conclusion that the decay of DB1 caused both of these trips. This is somewhat surprising as DB1 was swapped out last June, while DBs 2, 3, and 4 are all the original DBs installed with the PSL in 2011/2012. DB1 will need to be swapped at the next opportunity if the HPO is to survive the month.

The laser is back up and running to enable commissioning while we prepare for the diode box swap. If it trips off overnight or after hours we will take a look at it in the morning; given the nature of the trips any restart attempts will have to be done onsite. However, the odds of recovering from another trip are low; we are driving DB1 pretty hard to keep the laser up and running right now, so should the laser trip off again I would prefer to swap the DB before attempting a restart. We will check on the laser first thing in the morning.

Filed FRS 9691 for this trip.

Also filed FRS 9692 for Saturday's trip, which looks to be identical to this one.