TITLE: 04/19 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Planned Engineering

INCOMING OPERATOR: None

SHIFT SUMMARY:

- Continued work on the Access System at EX

- There was an accidental trip of the squeezer laser due to an e-stop button being bumped at EX

- A couple of tours through

- a high energy physics talk by Ulrik Uggerhoj

- commissioning of new Neg Pump with commissioners from Saes at EY

- Vent Meeting - (most info on white board)

- EMF - using a capacitive plate on view-port. Taking it down tomorrow

- HAM6 - Mode matching problems hindering progress...doors may go on in a week

LOG:

15:00 Christina noted on the EX camera, cleaning.

15:30 Sheila and Terry headed to Ham6

16:00 APS on site

16:24 Jeff K tour out to LVEA

16:39 Jeff B out to LVEA and then to Ends

16:45 Fil into CER

16:57 Access tech to EX

17:07 Chandra to EY with guests

17:12 Terry back

17:20 Peter and Fil to EX to do ALS interlock work

17:38 Gerardo out to LVEA - looking for viewport protector

17:41 Karen leaving EY

17:59 Fil and Peter back

18:14 Fil out to LVEA - PSL closet for access system

18:24 Jeff B back

18:34 Gerardo to EY

18:47 Sheila called to say the Squeezer

18:55 Tour group into CR

19:40 HANFORD EMERGENCY 'TAKE COVER' DRILL MESSAGE received

19:43 Peter out to PSL enclosure

20:07 DRILL MESSAGE TERMINATED

20:35 Visitor for Chandra on site

20:48 Jaime doing some Guardian re-boots

20:50 Jason ad Rick out tp PSL enclosure

20:51 Corey to end stations for inventory

20:52 Sheila out to HAM6

21:12 Corey heading back

21:20 Betsy ad Travis heading out to EX

21:25 Chandra,et al, back out to EY

21:33 Gerardo out to HAM6

21:35 Cheryl out to PSL encosure

21:42 Gerardo back

21:48 Fil out to LVEA to install network switch in PSL closet

21:54 Georgia and Niko out to EX

22:19 Sheila and Terry going out to HAM6

22:22 Niko and Georgia back

22:39 Travis and Betsy back

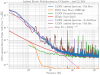

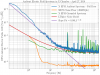

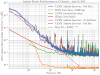

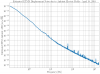

due to INI file mismatch, DAQ data from h1susauxb123 is currently not correct. I'll revert it back to RCG3.2 soon.

h1susauxb123 is now back at RCG3.2 with good DAQ data. Due to the recent model changes, I did not perform a new rebuild against 3.2 as this would require a DAQ restart. Instead I restored the target directory, the DAQ-INI and the GDS-PAR files from archive. I restored the 3.2 version of awgtpman as well.

While h1susauxb123 was being reverted, DAQ data from h1seih16 was again invalid for a few minutes.