Oli, Edgard.

On Tuesday, Oli tested the SR3 OSEM calibration factors mentioned in the comments of [LHO:84296] and summarized in [LHO:84531] and took a new suite of HAM5 ISI to SR3 M1_DAMP transfer functions (the measurements also live in /HLTS/H1/Common/Data/ and are dated for May 21st of 2025).

The calibration factors from [LHO:84531] (taken on May 7th) featured a seemingly crazy change on the T1 OSEM calibration of 2.4539 x. So we wanted to doublecheck that the numbers actually made sense before proceeding with OSEM estimator work.

The measured SUSPOINT V to M1_DAMP_{ V , R , P } show that the calibration factor was largely correct.

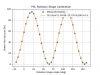

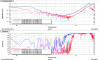

The first attachment shows the SUSPOINT V to M1_DAMP_{ V , R , P } in measured on May 7th before using the new OSEMINF gains. In it, it can be seen that there is a very clear crouss-coupling from V to R, which is mediated by the T1 OSEM in SR3. Dividing the length-to angle over the length-to-length at 10 Hz to get a sense of the cross-coupling we get 3.3 rad/m for R and 0.65 rad/m for P.

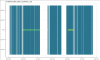

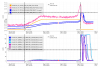

The second attachment shows the SUSPOINT V to M1_DAMP_{ V , R , P } measured on May 21st after using the new OSEMINF gains. The V to R (and to P) improves significantly with the new calibration factors. This is best seen by comparing the relative amplitude of the V to V transfer function (in red) with the V to R (in blue), and the V to P (in green), through which we get 0.4 rad/m for R and 0.1 rad/m for P. Therefore, the apparent cross-coupling has been greatly reduced, which confirms that the T1 factor was likely correct, and we can proceed with the rest of the procedure.

[NOTE: We use relative factors for the comparison, because all OSEMs were miscalibrated by a factor of 1.3-1.4, so directly comparing the amplitudes from may 7th to May 21st would be inaccurate]

These improvements are encouraging enough that we feel comfortable moving forward with the M1 OSEM calibration for SR3. To do so, Oli is hard at work rescaling the gains of the SR3 loops. We will post when that steps is ready