D. Sigg, E. Merilh, M. Pirello

Exchanged Timing Comparator S1107952 with modified S1201227. This modification adds frequency counter channels to the timing comparator, see ECR.

ECR can be found here E1700246

D. Sigg, E. Merilh, M. Pirello

Exchanged Timing Comparator S1107952 with modified S1201227. This modification adds frequency counter channels to the timing comparator, see ECR.

ECR can be found here E1700246

Daniel, Nutsinee

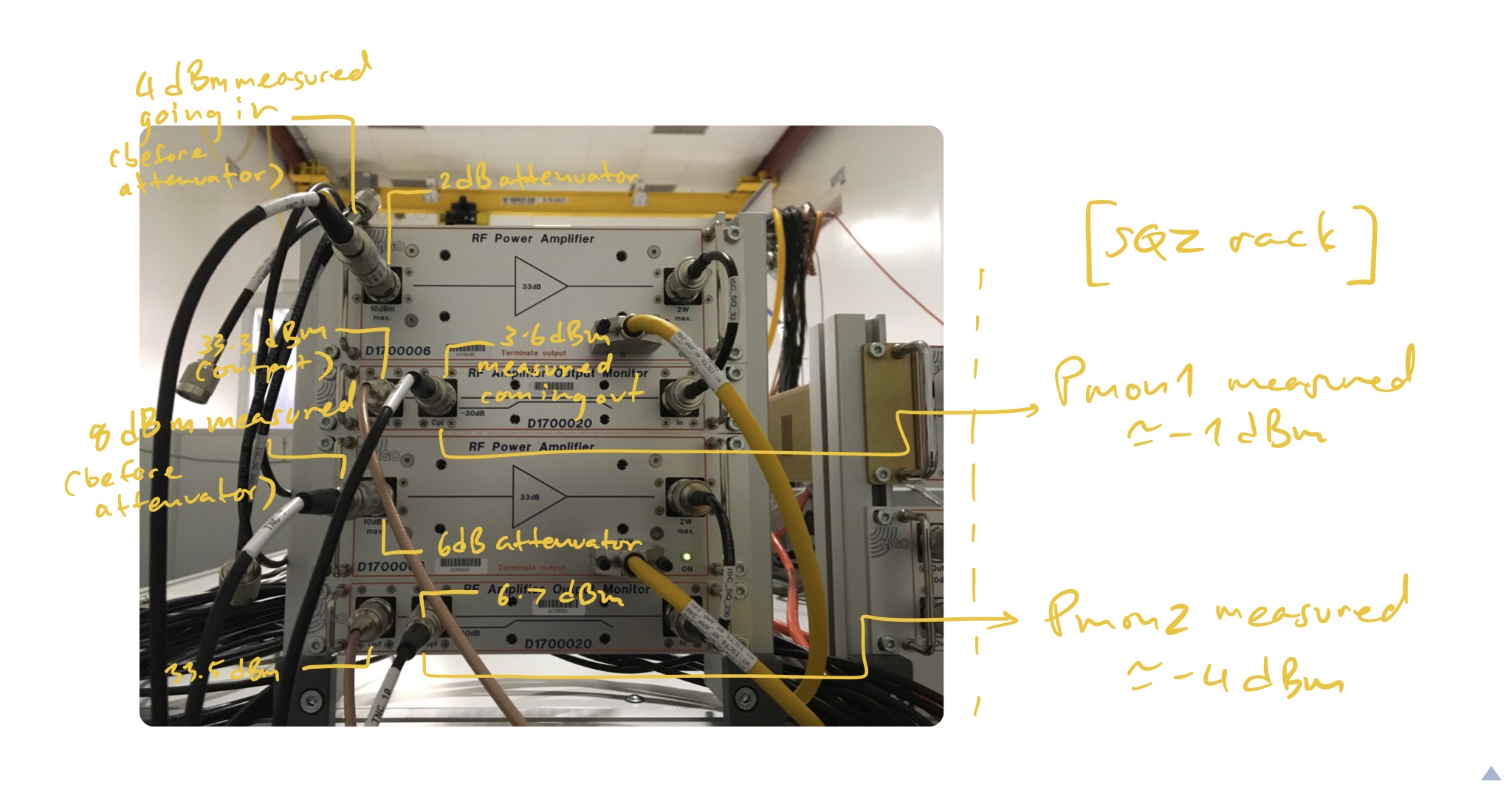

Here's a quick note of what we measured out there with the RF power coming in through (and monitored via) temporary cables. These RF power drive AOM1 and AOM2. There seems to be too much loss at Pmon2 compared to Pmon1 (almost 11 dBm loss versus 5dBm loss). The cable length going from CPL to Pmon are the same. The top RF input gets its power all the way from the CER while the bottom RF input gets its power from the SQZ rack. So the difference in the incoming RF power makes sense. Not sure if the factor of two difference coming straight out of the Cpl makes sense though.

Terry, Nutsinee

After realizing that the way the cables were hooked up didn't match the wiring diagram we decided to make it right once and for all. The output from the top RF amp used to drive AOM2, now it's driving AOM1 and the 2dB attenuator was taken off. The RF power measured on the table through the helix cable was 34 dB (2.5W), still within the max drive power allowed (2.9W). AOM1 (IntraAction ATM-200) diffraction efficiency is now 82%.

The bottom RF amp is now driving AOM2 (AA Opto Electronic MT200). The 6dB attenuator is still there. The power measured on the table was 33.2 dB (2.1W). The maximum power allows is 2.2W. The efficiency of AOM2 is still not great currently. The best we've had was 70%.

Daniel, Sheila, Terry, Nutsinee

We hooked temporary extension cables to bring stuff from SQZ rack to the table. Below are the list of what temporary labels correspond to. Note that all the temporary cables are 50 feet long.

TNC 1 = SQ_202_2 (goes on SQZT6. Not hooked up to anything at the moment)

TNC 2 = SQ_203_2 (ISCT6 RF1)

TNC 3 = SQ_12_2 (OPO phase mod)

TNC 4 = SQ_14_2 (SHG phase mod)

TNC 5 = SQ_21_2 (RF power input, U4)

TNC 6 = SQ_212 (TTFSS LO)

TNC 7 = SQ_229 (RF power input, U2)

TNC 8 = SQ_353 (CPL U4)

TNC 9 = SQ_370 (TTFSS mon)

TNC 10 = SQ_372 (CPL U1)

-------------- TNC11-TNC19 are hooked up to DCPD patch panel --------------

TNC 11 = 375_1 (fiber rejected)

TNC 12 = 376_1 (CLF launch)

TNC 13 = 377_1 (CLF rejected)

TNC 14 = 378_1 (SHG launch)

TNC 15 = 379_1 (SHG rejected)

TNC 16 = 380_1 (Seed launch)

TNC 17 = 381_1 (LO launch)

TNC 18 = 382_1 (this one doesn't go anywhere on the table, according to the wiring diagram.)

TNC 19 = 383_1 (fiber trans)

-------------- Leftovers --------------

TNC 20 = used to bring back SHG PDH signal from the demod on the SQZ rack

This is for a leak that was noticed last week. Jeff Bartlett noticed a large puddle forming under the chillers. When I went to investigate I noticed that the X chiller water level was low, I pulled off the panels and saw where a small leak had been dripping on top of the radiator for quite some time. I think this explains the difference in the refill logs for the past couple of months of operation. You can also see how there is sediment forming where the leak was evaporating. I pulled the motor and water pump from the chiller body, separated the water pump, and got the seal where you can see the ceramic seal had become chipped. I will try and find a suitable replacement so this chiller will be a functional spare. Meanwhile, the spare is waiting on some new quick-disconnects to arrive as the previous ones had been cross-threaded and I didn't trust them to hold a good seal.

Sheila, Jenne

We had a large EQ at just about 19:00 UTC, a couple of ISIs tripped but no suspensions so far. I set the seismic configuration to LARGE_EQ_NO_BRSXY after some BSC ISIs had already tripped, while I was doing that some more BSC ISIs tripped as well as BS HEPI, but I don't know if the change in state caused the trips or the earthquake did it. I reset all of the ISI watchdogs, they were various triggers for the trips including GS13s, ST2 CPS, T240s and ST1 actuators. A few minutes later (perhaps at 19:05) ITMX and ITMY tripped again. I have just reset them now at about 19:09, however they are not re-isolating because the guardians are waiting for the T240s to settle.

This should be some interesting data to see how the changes in the ISI models have changed the way things respond.

Our seismic FOM is not updating even when I hit update, and Terramon is down, but the USGS says there is a 7.6 in Honduras.

At around 19:35 ITMX and ITMY tripped to damping only again, both because of the T240s and ST2 CPSs. I am going to leave things this way and head home.

Because it wasn't mentioned here, I want to bump my alog 38921 , where I detail the VERY_LARGE_EQ button on the SEI_CONF screen. This is probably the kind of earthquake we should use that button for. Unfortunately, it probably wouldn't have worked this time, as we had changed the names of the DAMPED state for the chambers. I've updated that now to take all of the ISI's to ISI_DAMPED_HEPI_OFFLINE.

To reiterate what the button does:

1. Turns off all the sensor correction via the SEI_CONF guardian.

2. Switches all the ISI chamber guardians to ISI_DAMPED_HEPI_OFFLINE

3. Switches all the GS13s to low gain (except the BS & HAM6), and all BSC ST1 L4Cs to low gain as well

4. Puts HAM1 HEPI in READY

Also, Jenne reported that the local SEISMON code gave a verbal alarm about 3 minutes before the S-waves arrived and ISIs started tripping. If the code is alive, I'm not surprised it reported the earthquake before USGS or the Terramon webpages.

I took a look at the BS HEPI trip. This trip is very clearly caused by saturations in the vertical actuators. More work is needed to figure out what to do, but I put together a set of plots which show why I think the actuators signals generate the trips.

I'll note that saturated hydraulic actuators should be treated seriously, and are a good reason to turn things off.

But, hopefully we can keep this from happening in a smarter way than just turning everything off.

[TVo, Niko, Jenne]

This morning, Hugh and Cheryl found that IOT2 was still sitting on its wheels - its feet hadn't been put down yet. So, Hugh helped TVo and Niko get the feet set down. TVo, Niko and I then tweaked up the alignment of the IMC Reft and Trans paths.

Later, Sheila pointed out that likely the lexan cover was still in place in the Refl path. So, TVo and Niko removed it (WP 7276) and put on the dust cover in its place. We decided that the lexan probably only needed to be removed for Refl, since that is used for feedback. The Trans path is just used for triggering and a camera, so it less critical noise-wise. (Also, we only found one of the dust cover things that goes in place of the lexan.) We the re-tweaked the Refl path alignment, although it needed very little. The spot on the IMC Refl camera looks much more normal now, which is also good.

We have tried a few times to close the WFS loops, and they keep diverging even though our hand alignment has brought the error signals close-ish to zero. So, we checked the phasing of the WFS by driving MC2 in length and maximizing the I phase signal in each quadrant of each WFS. This didn't change much though.

I have to go, and we just got a juicy earthquake (7.8 in Honduras, seismic systems are tripping, and we're just getting the S and P waves, Rayleigh should be here in ~10 min. Sheila is putting us in the LargeEQ state). So, we'll come back to this IMC work in the morning, but IMC MC2 Trans Sum is up to a max of 91 now, which is way better than the ~15 we had this afternoon. I think (but haven't trended to actually check yet) that we should be getting something like 150 counts on MC2 Trans Sum with 2W PSL power.

On Monday Ken reconnected GV4's motor and encoder power cables after removing to test fit shroud. MEDM status is back to RED (was yellow). Remains LOTO.

Sheila, Terry, Nutsinee, Daniel

We were unable to drive a voltage to the SHG TEC. There is an error in the SQZ chassis 2 wiring list E1600384 missing the power cable for the TEC controllers. The TEC readbacks also suffers from some typos.

Some more info on the TEC work today:

23:08 Travis out of LVEA

23:11 Gerardo out to LVEA by CP1

23:13 Mark and Tyler are done at EY

23:15 Corey out of optics lab

23:18 Marc and Daniel into CER

23:25 Gerardo out

WP7273: Add whitening filters to h1sqz model

Daniel, Dave:

new h1sqz model was started.

New corner station Beckhoff Slow Controls code

Daniel, Sheila, Dave:

New Beckhoff code for h1ecatc1plc[1,3,4] was installed. The resulting new DAQ INI files and target autoBurt.req files were installed.

DAQ Restart

DAQ was restarted to assimilate the new INI files from the above work.

Timing

Daniel:

The h1ecatc1plc1 EPICS settings were not fully restored after yesterday's code changes, this resulted in a RED timing system. Daniel fixed this issue today.

h1isietmy pending filter module change

Jim, Dave:

Jim's latest filter file for h1isietmy was loaded to green up the overview.

Stuck SUS ITMX, ITMY excitations

Jeff K, Dave:

Stuck excitations and testpoints were cleared on h1susitm[x,y]

BTW: Timing system is currently RED. Daniel is testing a comparator on the 16th port (port number 15) of the first fan-out in the CER.

[Bubba, Mark, Tyler, Chandra]

The guys removed the north door after lunch and purge air is flowing good (measured -46degC DP).

Particle counts inside chamber at door side were all < 100 counts/ ft^3.

I turned ion pump #11 (IP11) off and removed HV cables.

Attached are the measured injection locking servo transfer functions, and what the DC output of the locking photodiode looks like when the PZT ramp is applied.

conlog-master.log:

2018-01-09T19:52:31.208080Z 4 Execute INSERT INTO events (pv_name, time_stamp, event_type, has_data, data) VALUES('H1:SQZ-SPARE_FLIPPER_1_NAME', '1515527550857386596', 'update', 1, '{"type":"DBR_STS_STRING","count":1,"value":["��'�"],"alarm_status":"NO_ALARM","alarm_severity":"NO_ALARM"}')

2018-01-09T19:52:31.208301Z 4 Query rollback

syslog:

Jan 9 11:52:31 conlog-master systemd[1]: Unit conlog.service entered failed state.

conlog.log:

Jan 9 11:52:31 conlog-master conlogd[10598]: terminate called after throwing an instance of 'sql::SQLException'

Jan 9 11:52:31 conlog-master conlogd[10598]: what(): Invalid JSON text: "Invalid escape character in string." at position 44 in value for column 'events.data'.

Suspect that it occurred with a Beckhoff restart.

Restarted and updated channel list. 59 channels added. 25 channels removed. List attached.

Found it crashed again, same issue, different channel:

2018-01-10T00:32:45.744823Z 5 Execute INSERT INTO events (pv_name, time_stamp, event_type, has_data, data) VALUES('H1:SQZ-LO_FLIPPER_NAME', '1515544365629095108', 'update', 1, '{"type":"DBR_STS_STRING","count":1,"value":["@@@e?

@@@j�"],"alarm_status":"NO_ALARM","alarm_severity":"NO_ALARM"}')

A new TwinCAT code for the corner h1ecatc1 was loaded. It includes the following fixes and features:

Pending: Timing comparator code update for implementing ECR E1700246.

The afternoon of December 22nd I went back into the optics lab and made a few measurements to try to understand why our Faraday isolation was only 20dB(see alog 39861). It turned out that one of the TFPs had an extinction ratio that didn't meet the spec, and by switching the positions of the TFPs I was able to measure an isolation of -28dB.

Thin Film Polarizer extinction ratio:

To measure the extinction ratio of each of the TFPs I used a set up very similar to the image in 39861, with the rotator removed (PD monitoring input power in position A, PD monitoring TFP transmission in position C which is after the Faraday path, used chopper to measure transfer function between PDs).

After the fiber collimator, there was already in place a PBS mounted to clean up the input polarization by reflecting horizontally polarized light. I also used whichever TFP I wasn't measuring to further clean up the polarization. I rotated the half wave plate to measure the maximum and minimum power transmitted by the second TFP to get its extinction ratio. For the TFP mounted with the backplate labeled SN9, I got a ratio of 2220:1 (coated side facing down in the mount, so that incident beam hit uncoated side first), for the one mounted on the backplate SN08, I got 336:1 with the coated side down and 577:1 with the coated side up (so that the incident beam first hits the coated side). The spec for these TFPs is greater than 1000:1 (spec here)

Better Faraday Performance

In the original set up, SN08 was the first polarizer in the Faraday (the one closer to the OPO), and both TFPs were mounted coated side down. Scattered light from the interferometer will be mostly in the polarization to be rejected by the TFP closer to the OPO, so swapped the two positions. Now SN09 (2220:1) is mounted coated side down closer to the OPO, and SN08 is mounted coated side down further from the OPO.

With this arrangement I repeated the measurements of isolation, transmission, and backscatter (I also increased the laser power compared to 39861). For transmission measurements I got 95.6% and 97.6%, for isolation I got -27.9 dB and -27.8dB (0.16%), and for backscatter I got -40dB and -41dB.

Sheila, Nutsinee, TJ

We made a couple of measurements to try to measure the Faraday rotation angle, but our measurements don't provide any better information than the constraint placed on the error by the isolation measurement.

We tried a few methods of measuring this, including setting the half wave plate to maximize transmission with the rotator both in place and removed and comparing the angles (47+/-3 degrees). We also used a polarizer in a rotation stage mounted after the Faraday and setting its angle to maximize transmission with vertically polarized light (with HWP and TFP after rotator left in place) and with the light directly out of the rotator. This method gave us fairly good accuracy (about 1 degree) but we found that repeated measurements varied by up to 5 degrees, so there must be something mechanically unreliable about the rotation stage we were using.

The TwinCAT software and medm screens were updated as well.