Ops Shift Log: 11/28/2017, Day Shift 16:00 – 00:00 (08:00 - 16:00) Time - UTC (PT)

State of H1: Unlocked - Vent

Intent Bit: Engineering

Support: N/A

Incoming Operator: N/A

Shift Summary: Continuing vent work

Activity Log: Time - UTC (PT)

15:52 (07:52) Richard – Going into the CER

16:00 (08:00) Start of shift

16:05 (08:05) Richard – Out of CER

16:35 (08:35) Filiberto & Peter – Going to electronics rack by HAM2

16:40 (08:40) John Feicht from CIT on site

17:09 (09:09) Filiberto & Peter – Out of the LVEA

17:21 (09:21) Filiberto & TJ – Going to both end stations to isolate the HWS camera

17:58 (09:58) Cheryl, Keita, Ed, & Jeff K, - Going to HAM2/3 for IO alignment

18:02 (10:02) Brandon King – Dychem on site to service blue mat

18:08 (10:08) Jeff B – Give Dychem rep a quick tour of LVEA

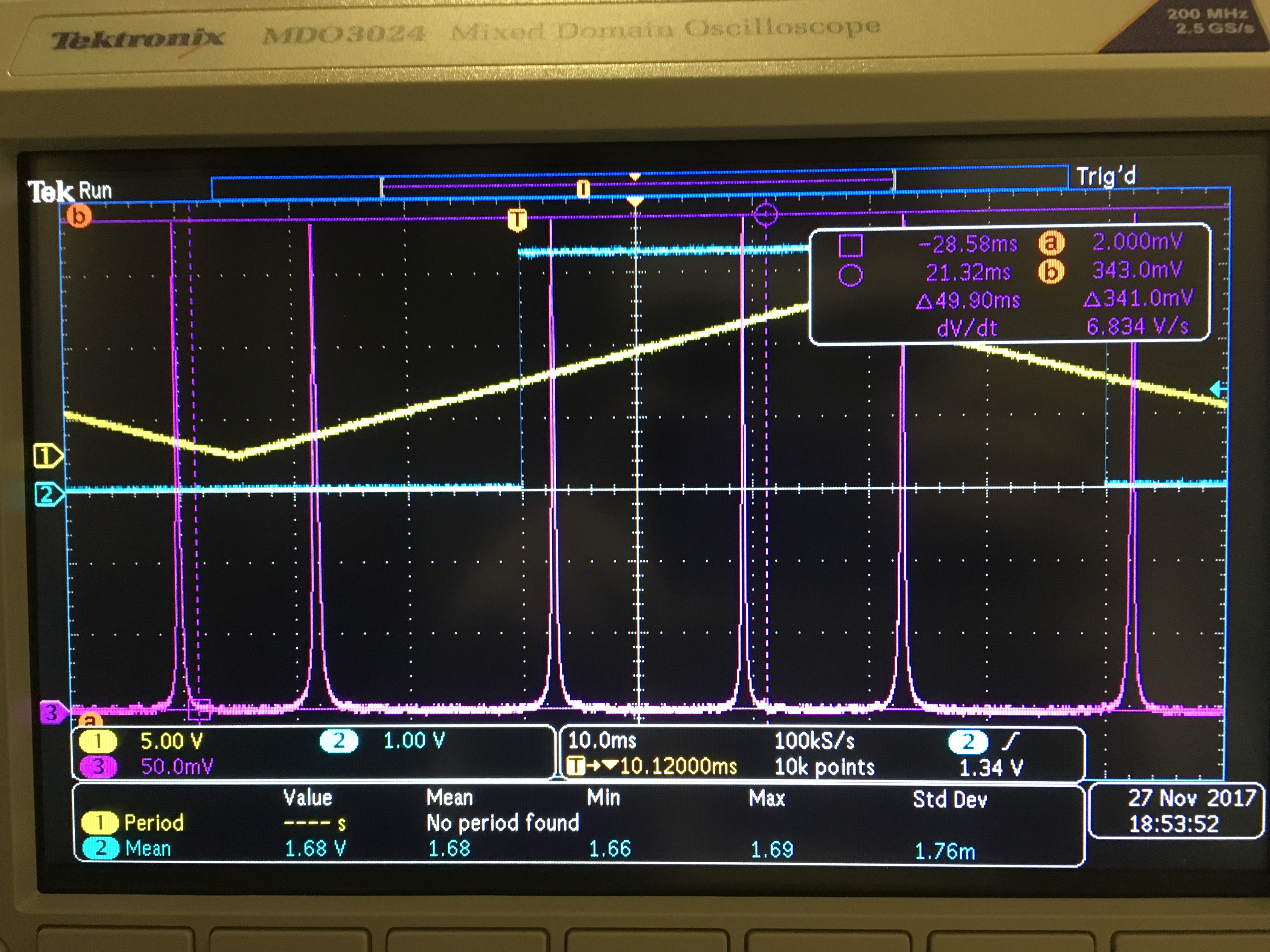

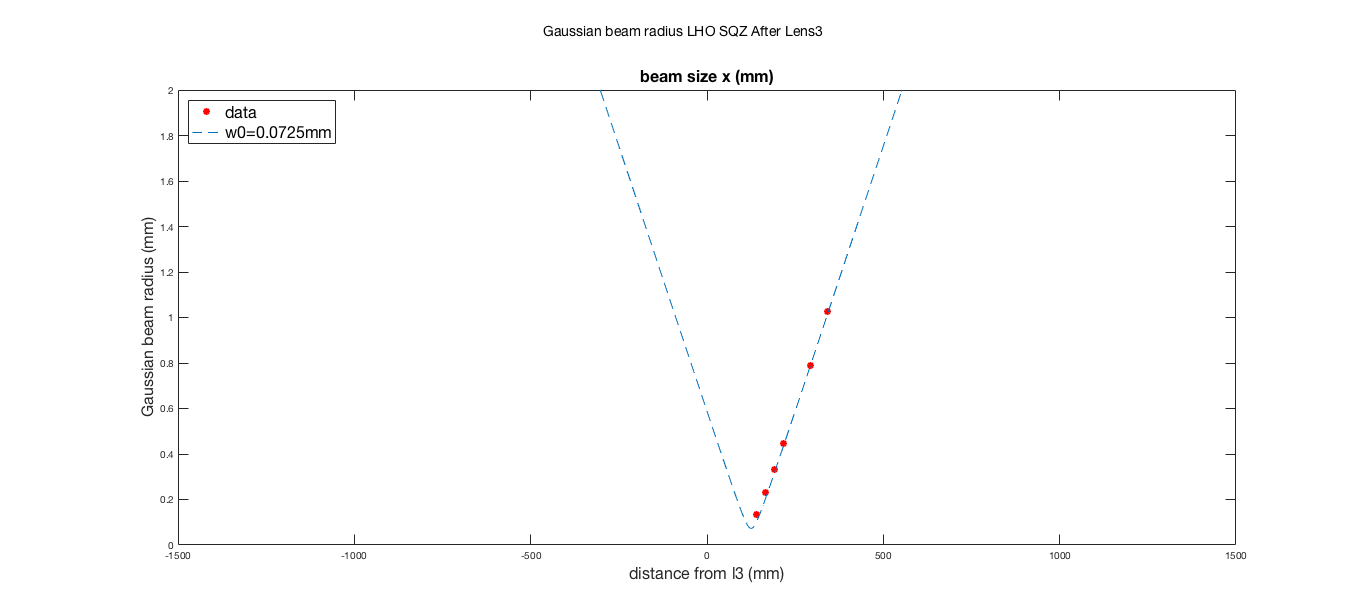

18:40 (10:40) Terry & Nutsinee – Going to Squeezer Bay

18:45 (10:45) Brandon King – Leaving site

19:05 (11:05) End-Y Laser Hazard

19:36 (11:36) Gerardo – Going into LVEA and then into the Optics Lab

20:00 (12:00) TJ & Filiberto – Finished at End-Y – Both Ends are back to Laser Safe

20:32 (12:32) Ed – Out of the LVEA

20:33 (12:33) Jeff K. – Out of the LVEA

21:30 (13:30) Jeff K. & Ed - Back to the LVEA for IO alignment work

22:32 (14:32) Kyle – Going to Mid-Y

23:56 (15:56) Klye - Back from Mid-Y

00:00 (16:00) End of shift