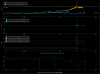

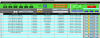

We noticed that the range was dropping even lower than our already low ~145Mpc. There was a lot of low frequency noise that was creeping up. Sheila suggested that we look at the PIs and sure enough, PI31 had started to slowly creep up the same time as the range degredation (see attached). The SUS_PI guardian node will turn on the damping at 3 according to the H1:SUS-PI_PROC_COMPUTE_MODE31_RMSMON channel and turn it back off when it gets below 3. For now we just tried changing that threshold to 0 to all for continuous damping. This let the mode damp down completely and it brought our range back up.

We should think more about damping this down lower and having two thresholds, an on an off.

Changed the threshold to 1. At 0 the node would continuously search for a new phase thinking that it needed to damp it further.

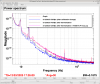

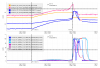

The first attached screenshot shows the spectrum around 10.4 kHz (edited to fix typo), the mode which rang up and caused this issue is at 10428.38 Hz, previously identified as being in the y arm (Oli's slides).

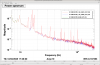

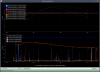

The behavoir seemed pretty similar to what we saw a few weeks ago, 82961, with broad nonstationary noise in DARM.

The downconversion doesn't seem to be caused by the damping, it started to have a noticable impact on the range while the damping was off, and when the damping came on and reduced the amplitude of the mode the range improved.

In the ndscope screenshot attached you can see the range that we are using on the DCPDs ADC, this is a 20 bit DAC so it would saturate at 524k counts, when this PI was at it's highest today it was using 10% of the range, the DARM offset takes up about 20% of the ADC range.

The third attachment is the DARM spectrum at the time of this issue, as requested by Peter. As described in 83330 our range is decreasing with thermalization in each lock, the spectrum shows the typical degredation in the spectrum in these last few days since the SQS angle servo has been keeping the squeezing more stable. The excess noise seen when the PI was rung up has a similar spectrum to the excess noise we get after thermalization.

Also, for some information about these 10.4kHz PI modes, see G1901351

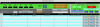

Jeff pointed me to 82686 with the suggestion to check the channels that have different digital AA.

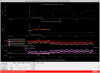

The attached plot shows DCPD sum, and two alternatives, in red is H1:OMC-DCPD_16K_SUM1_OUT_DQ which has a high pass to reduce the single precision noise and no digital AA filters (and some extra lines), SUM2 has 1 digital AA filter and the high pass, and DCPD sum is the darm channel and has 2 digital AA filters and no high pass. The broadband noise is the same for all of these, so digital aliasing doesn't seem to be involved in adding this broadband noise.