There is half as much jitter attenuation as we expect from the PMC. Since broad band jitter noise from the HPO has been limiting our sensitivity (in addition to acoustic jitter peaks which are imposed after the PMC), it is worth understanding the jitter attenuation we get from the PMC and fixing it if possible. I originally wrote this alog after looking at data from September 11th, however the bullseye QPD seems to have been misaligned or somehow otherwise changed while people were in the PSL for maintenance period on August 29th. This lead me to the seemingly impossible conclusion that the PMC was letting 33 times more jitter through than it should.

I looked at a period of time when the mode cleaner was misaligned and unlocked on July 18th 2017, starting around 2:05:00.

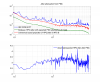

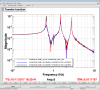

- Green trace Pitch jitter seen by the bullseye PD (which is upstream of the PMC), with an attenuation factor of 0.0144 based on the pointing attenuation expected from the PMC (T0900616.). This jitter is dominated by noise that comes from the HPO. I am relying on the calibration of the bullseye sensors into beam diameter units based on T1700126 (alog 34625), since the power on the bullseye drifted a little since the calibration was done there could be a 10% error in this calibration.

- Blue trace After the PMC, jitter is added to the beam by resonances of optical mounts on the PSL table, which causes the many jitter peaks seen in the IMC WFS signals (blue trace is the IMC WFS DC signals *sqrt(pi/8) to calibrate into beam diameters, I left this factor out in an earlier version of this log).

- Red trace We can make a projection based on the coherence between the bullseye and the IMC WFS of the fraction of the jitter seen by the IMC WFS that is coherent with the bullseye (HPO noise), sqrt(coh)*WFS B PIT spectrum. This is a factor of 2 larger than the expectation based on the bullseye.

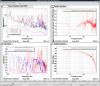

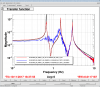

One possible explanation would be that the coherence is explained by something other than jitter coupling through the PMC, like residual intensity noise or acoustics on the table, but neither of these seem to be the case (second attachment, bottom left). There is no coherence of the WFS PIT with the SUM, which rules out intensity noise, and the table accelerometers have good coherence at the frequencies where the bullseye is not coherent (at resonant frequencies of optical mounts table acoustics explain the jitter, but not at the frequencies where the bullseye coherence is highest).

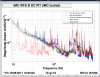

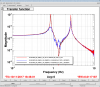

The third attachment shows that the spectrum of IMC WFS B (uncalibrated, taken with the IMC locked) has gotten noisier towards the end of O2.

It would be good to realign the bullseye or replace it with the new version and check that it is well aligned and that the beam size is correct. After the in chamber work with the IMC bypass is finished, we can repeat this measurement with more power.

It looks like our PMC does not attenuate jitter as much as it should, I think about 33 times less than it should.

The PMC should attenuate pointing jitter by 0.0163 for yaw and 0.0144 for pitch according to T0900616.

The first plot shows spectra and coherences from a time (only about a minutes and a half long) when there was 2 W into the IMC but MC2 was misalinged. The IMC WFS DC are then calibrated in to beam widths. The bullseye QPD is calibrated in to beam widths in the front end, (34625) in this plot I've added a factor for the attenuation we expect from the PMC. The bullseye shows jitter that comes from the HPO with a smooth spectrum, which should be well below the measured noise on the IMC WFS with the attenuation from the PMC. In the lower panel you can see that the Bullseye has rather high coherence with IMC WFS B PIT, suggesting that the jitter is not attenuated as much as it should be by the PMC.

At 200 Hz,it looks like the PMC is only attenuating the jitter by about 0.47. The WFS B PIT has 1.2e-6 1/rt Hz at 200 Hz, and a coherence of 0.5 with the bullseye, so the noise seen by the bullseye is at about the level of 8.5e-7. The bullseye pit is 1.8e-6 /rt Hz at 200 Hz (without the rescaling I've done in the attached plot).

Edit: The coherence of the WFS B Pit with the bullseye cannot be explained by residual intensity noise in the pit signal which is coherent with the jitter noise. I've updated the plot to include a trace that shows no coherence between WFS B sum and WFS B pit.

Second edit: The bullseye QPD was misalinged during the maintence window on August 29th, so this result be misleading.

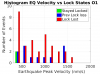

I ran a couple of PMC scans. Some good data should start at around 15/11/2017 at 2:50:57 UTC. The largest higher order mode is a 20 mode, with about 1/4th the power that is in the 00 mode.

It should be noted that the only maintenance on the PSL on August 29th was done entirely from the control room, no PSL incursion was made. Looking into it further, there are no alogs from that day indicating a PSL incursion was made (or on the preceeding Monday or following Wednesday), and the operator's daily activity log also indicates that no incursion was made. At this point in time we do not know why the bulls-eye PD became misaligned. Peter King is going into the enclosure tomorrow (11/17/2017) for something unrelated and has agreed to take a look at the bulls-eye PD to see if anything is amiss.