As part of the HAM4 install work, we removed and decommissioned the DLC mount shown in the attached drawing:

D1200984 OPTLEV DLC ASSY

As part of the HAM4 install work, we removed and decommissioned the DLC mount shown in the attached drawing:

D1200984 OPTLEV DLC ASSY

TITLE: 10/18 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Planned Engineering

INCOMING OPERATOR: None

SHIFT SUMMARY:

LOG:

HWS work is still the main activity of the day

15:37 Richard and Fil into the CER

15:45 Richard and Fil out

16:14 Travis out to ITMX

16:45 Dust Monitor 2 is crashed

17:03 Travis out

HWS work

17:05 TJ, John and Aiden heading in to LVEA HAM4

17:10 Lights on at EX

17:18 Jason headed out to BSC1 to help with Hartmann alignment

17:19 Lights off at EX

17:20 Richard tells me the fire panel at EY is acting up HFD is on the way

17:51 Karen and Vanessa heading to MY and then MX

17:48 Dave B restarting NDS1

18:58 Karen and Vanessa heading back to corner

19:00 Jason, Aiden et al are back for lunch

19:56 Peter into the optics lab

21:23 TJ into optics lab and then out to HAM4

21:28 FW0 is down for an upgrade

22:20 Sheila into the LVEA.

22:39 Cheryl out to LVEA to look for a container

22:45 Sheila is going Laser hazard in the squeezer bay. M1700239-v1 is the TSOP for this activity.

[Gregg, Jason, Aidan, TVo, TJ, Jon]

We located the correct Siskiyou 2" mirror mounts (IXM200) for the HAM4 work this morning. Thanks to Corey, who pointed us to two spares that were in the optics lab and were Class A cleaned.

Recall that yesterday, we discovered that the 2" mirror mounts that we had been given for mounting the DCBS were DLC mounts, not Siskiyou mounts. The DLC mounts put the optic height 0.5" higher than the Siskiyouu mounts do.

We removed the existing DLC mounts that were added yesterday and removed the dichroic beam splitters (DCBS - T = 300ppm for 1064nm at normal incidence [MI1000]) from these mounts. In doing so, we noted that both DCBS had visible particulates on them. Additionally, the DCBS on the X-arm had a small smudge on the optic and a chip in the barrel. We decided to proceed with the install of the new Siskiyou mounts and assess the action to be taken with the optics after install.

The ITMY laser was turned on again and we were able to use the leakage of this laser through SR2 to align the Y-arm DCBS. We pitched it down a considerable way and confirmed that the reflection from it was dumped on the scraper baffle well below the optical axis.

We adjusted the SR3 alignment to get a ghost beam coming off HWS STEER M1 aligned through the HWSX optics. We installed the new Siskiyou mount on the existing pedestal and noted the height was approximately 6mm higher than neighbouring optics. The pedestal and mount were shifted towards the in-vacuum lens by approximatley 3" (new position is shown in the attached image). Original assembly shown in D1101083-v2.

We centered the DCBS on the laser beam and then adjusted the pitch so that the return beam was dumped on the scraper baffle. For reference, we measured the return beam as it hit HWSX STEER M3 and it was 10mm below the incident beam on that optic. Since the DCBS is approximately 12" away, this puts the reflected beam angle at -1.9 degrees. This put the return beam about 45mm down from center on the HWS SCRAPER BAFFLE.

The optical mounts are installed and aligned, however, the optics themselves almost certainly need to be cleaned if not replaced. For reference, the HWSX DCBS sees about 300mW of 1064nm light (at about 25W input power into the IFO). The HWSY DCBS sees about 100nW.

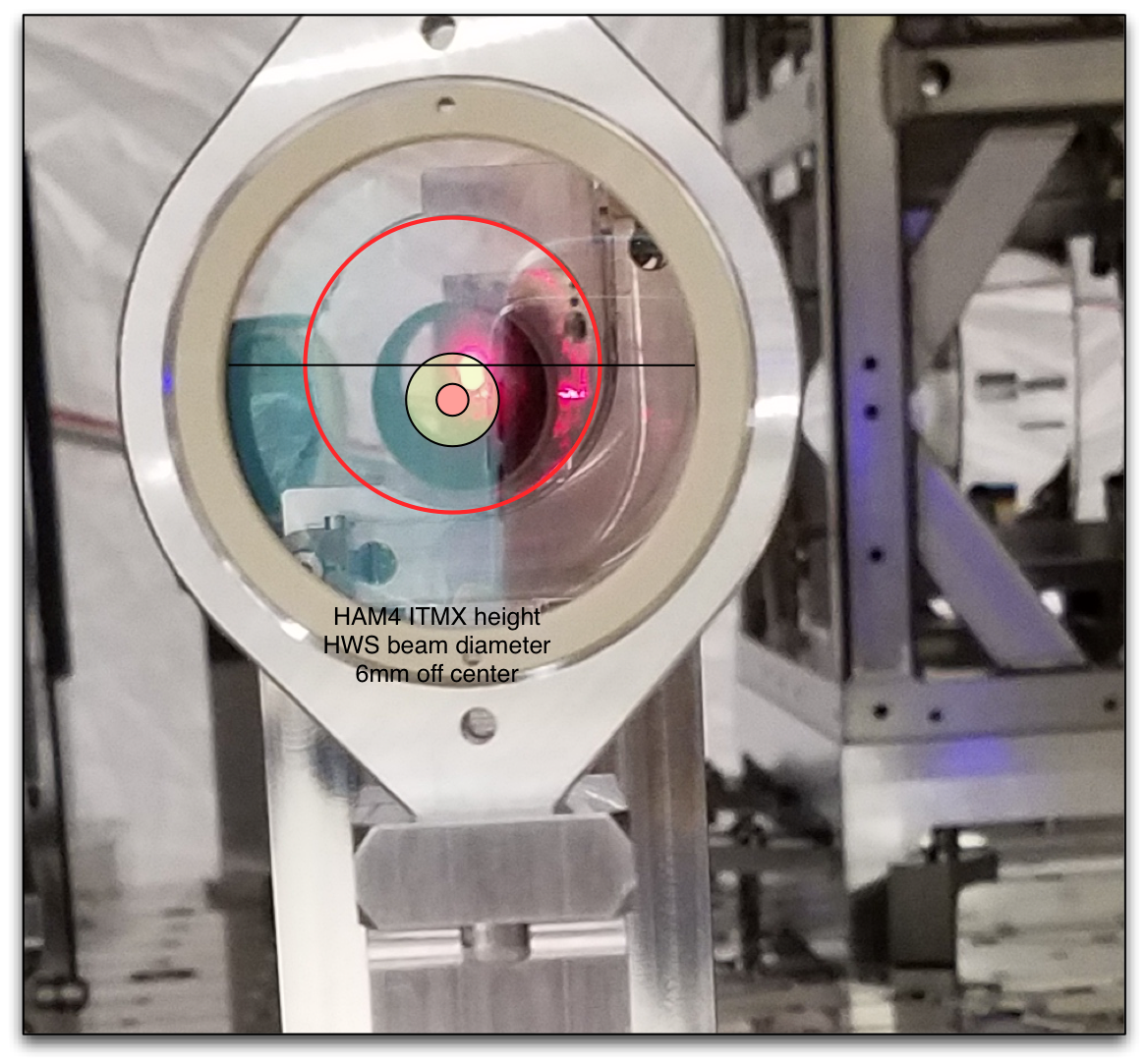

Here's an image of the estimated HWS beam size (about 16mm diameter) and IFO beam size (about 5mm diameter) at the DCBS. The beams are centered about 6mm below the center of the optic. That leaves about 19mm from the center of the beam to the edge of the optic, or about 2.4 beam radii.

You can see a slight smudge near the center, just off to the South-West (lower left) in this orientation.

Pictures of HWSX STEER M1 install

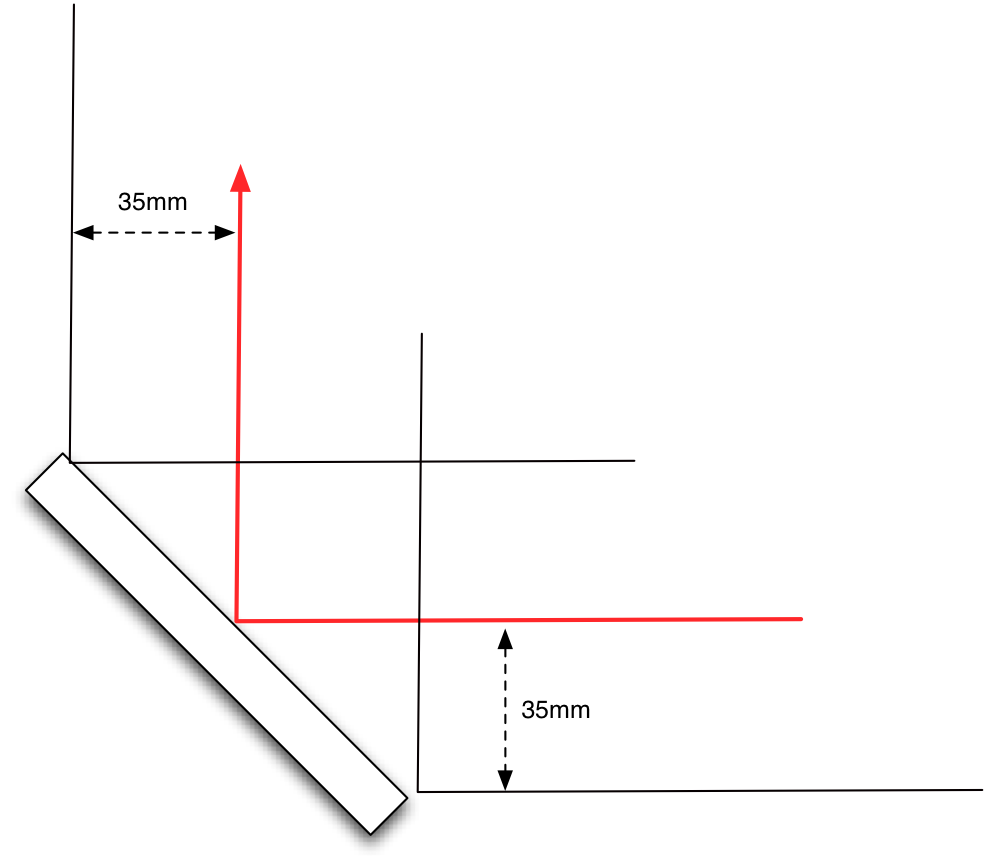

We confirmed that the incident beam and the reflected beam were approximately 35mm from the edge of the aperture (as viewed from each direction).

I added an FRS ticket for the particulate issue: https://services.ligo-la.caltech.edu/FRS/show_bug.cgi?id=9280

It appears that some process variables are taking on values with characters that do not conform to JSON: Oct 18 11:10:12 conlog-master conlogd[7334]: terminate called after throwing an instance of 'sql::SQLException' Oct 18 11:10:12 conlog-master conlogd[7334]: what(): Invalid JSON text: "Invalid escape character in string." at position 44 in value for column 'events.data'. Oct 18 11:41:52 conlog-master conlogd: Server started. prefix: 'H1:CDS-CONLOG_' database: 'conlog' max inserts: 500 max time: 30 keep alive period: 30 time source: 0 create_messages: 1 Oct 18 11:59:32 conlog-master conlogd[8524]: terminate called after throwing an instance of 'sql::SQLException' Oct 18 11:59:32 conlog-master conlogd[8524]: what(): Invalid JSON text: "Invalid escape character in string." at position 44 in value for column 'events.data'. Oct 18 12:19:34 conlog-master conlogd: Server started. prefix: 'H1:CDS-CONLOG_' database: 'conlog' max inserts: 500 max time: 30 keep alive period: 30 time source: 0 create_messages: 1 Oct 18 12:34:12 conlog-master conlogd[9092]: terminate called after throwing an instance of 'sql::SQLException' Oct 18 12:34:12 conlog-master conlogd[9092]: what(): Invalid JSON text: "Invalid escape character in string." at position 44 in value for column 'events.data'. The same error repeats at each loading of the channel list.

Restarted the MySQL server with the general query log enabled to log the SQL query causing the crash. Restarted conlog. Crash did not occur on restart this time. Will leave log enabled for now.

I found the original naming of the squeezer flippers confusing, so I have changed them since it will only become more difficult to change names.

The flipper in the CLF path was called SQZ-OPO_IR_FLIPPER I changed this to SQZ-CLF_FLIPPER and the one in the green pathfrom the SHG to the Fiber was called SQZ-OPO_GR_FLIPPER, I called this SQZ-SHG_FIBRFLIPPER)

I updated the system manager, and the channel link list.

All HAM and CS BSC ISIs are currently locked.

WP7184:

I have completed the first stage of offloading raw minute trend data from h1tw1's SSD-RAID array. This step required a quick restart of h1nds1 in order for it to find recent raw_minute_trend data at its temporary location on h1tw1.

I'm starting the next step; copying the data from h1tw1 to h1fw1 for permanent storage on hard spinning media. This runs in the background and typically takes three days.

file transfer from /trend/minute_raw_1192382538 to /frames/trend/minute_raw/minute_raw_1192382538 has started in background.

If anyone going into the LVEA feels so inclined to power cycle it for me, i would appreciate it. It's located on the NW side on top of the HEPI beam (kinda tucked underneath the chamber).

17:14 HAM2 dust monitor reset. THANKS!

TITLE: 10/18 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Planned Engineering

OUTGOING OPERATOR: None

CURRENT ENVIRONMENT:

Wind: 17mph Gusts, 12mph 5min avg

Primary useism: 0.05 μm/s

Secondary useism: 0.26 μm/s

QUICK SUMMARY:

(Subbing for Cheryl)

Some discussion about whether or not the TGG crystal in the output Faraday should be epoxied in place

or should be floating. The two rotators examined thus far, SN002 and SN003, have the TGG crystal

loose within its containment sleeve.

Attached are pictures of the spare TGG crystal. The hole in the sleeve, which maybe a vent hole,

is clearly plugged with something that is hard to the touch (VentHole.jpg). Looking at the inside,

one can see where said substance has spread on the inside (Blister.jpg). Without prodding the crystal

too hard, it did not move. Obviously there are no magnetic fields to hold it in place in this case.

Fil Patrick Ed Daniel

Big thanks to Jon, TJ, TVo and Jason for a 6-hour effort.

Today was a big day aligning things on HAM4. We got the main alignment laser set up using the gooseneck mounts and corner cubes. We got the new 4" HWSX STEER M1 mirror mount installed, replacing the old mount and shifting the pedestal in +Y direction by approximately 20mm.

Unfortunately, we ran into two problems with the DCBS mirror mount replacement. Firstly, the pedestals were machined for 2" Sisikyou mounts (which we were to recover from the HAM IS OPLEVs). However, these turned out to be a different (non-Siskiyou) mirror mount which placed the optic about 7-10mm higher than desired. Additionally, it turns out that the X and Y HWS arms are are slightly different beam heights. The result of these two issues put the Y-arm DCBS about 10mm higher (borderline usable) than desired and the X-arm was about 20mm higher (not usable). We're going to have to remachine them. I'll post images and full details tomorrow.

Good news: the Y-ARM DCBS can now easily tilt its reflection down cleanly into the HWS SCRAPER baffle. Also, the alignment of the DCBS can be done in future with a simple laser on confined to HAM4, rather than the whole ITMY, BS, SR3, PR3 procedure we did today.

J. Kissel

Took a set of Top to Top transfer functions for H1 SUS SRM this evening. Results look like the suspension remains free after Betsy & co re-engaged the OSEMs from having backed them off during the monolithic optic install.

Data lives here (and has been exported to files of similar name):

/ligo/svncommon/SusSVN/sus/trunk/HSTS/H1/SRM/SAGM1/Data/

2017-10-13_2122_H1SUSSRM_M1_WhiteNoise_L_0p01to50Hz.xml

2017-10-13_2122_H1SUSSRM_M1_WhiteNoise_P_0p01to50Hz.xml

2017-10-13_2122_H1SUSSRM_M1_WhiteNoise_R_0p01to50Hz.xml

2017-10-13_2122_H1SUSSRM_M1_WhiteNoise_T_0p01to50Hz.xml

2017-10-13_2122_H1SUSSRM_M1_WhiteNoise_V_0p01to50Hz.xml

2017-10-13_2122_H1SUSSRM_M1_WhiteNoise_Y_0p01to50Hz.xml

Will post detailed graphical results with the usual comparison against previous measurements and the model on Monday. Attached is a sneak peak of the Pitch to Pitch TF, the DOF usually most sensitive to shenanigans.

More detailed plots showing all DOFs. Confirms that the SUS is healthy and free thus far, after M2 & M3 OSEMs have been re-engaged to surround their magnets/flags. Never been closer!

Betsy, Hugh, TJ

Last week Betsy put the heater on the table and today Hugh checked it's vertical center with an auto-level. Some washers were added to get it as close to center as possible, which ended up around 0.4mm high. Betsy and I then had to wiggle the assembly into place, and rotate the entire gold ceramic holder to allow the screws on the outside to clear the OSEM brackets. The heater is currently sitting ~6mm away from the back of the SR3 optic and it is plugged into the feed through.

Picture attached.

Here are a few more pics. As TJ notes, the ROC front face is 5-6mm from the SR3 AR surface. It is locked down in this location.

Note, we followed a few hints from LLO's install:

https://alog.ligo-la.caltech.edu/aLOG/index.php?callRep=25831

Continuity checks at the feedthru still need to be made. Will solicit EE for their help.

Initial continuity test failed. Found issues with in-vacuum cable, power pins not pushed in completely. Pins were pushed in until a locking click was heard.

Reading are:

Larger Outer Pins, Heater: 66.9Ω

Inner pair (left most looking at connector from air side), thermacouple: 105Ω

Found center of SR3(-X Scribe) to be 230.2mm above optical table. By siting the top and bottom of RoC Heater, found center to be at 231.4mm. Removed available shim to put center of RoC Heater at 229.6 for 230.2-229.6=0.6mm below perfect.

Hugh's comment reminded me that to get the heater to fit, Betsy and I added a 1mm washer to raise the height of the assembly. In total we have 4mm of washers (2x1.5mm & 1x1mm).

I conducted measurement of quantity 6 of [D1600104 SR3 ROC Actuator, Ceramic Heater Assy] at CIT on 4 March 2016. Dirty state before baking. The serial number of the heater assy installed in LHO HAM5 is S1600180 - see https://ics-redux.ligo-la.caltech.edu/JIRA/browse/ASSY-D1500258-002 S1600180 Resistance = 66.8 Ohms on 4 March 2016. There is good agreement between the as-installed and pre-bake measurements.