As Dave mentioned (38991), we have started the model for running the squeezer ASC, although we don't have cables to the actual hardware yet.

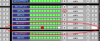

This is a simple model, which takes in the 16 AS_A and AS_B RF42 signals and processes them for sending to ZM1 and ZM2. The attached medm screenshot isn't finished yet but shows the basic functionality.

Most of the difficulty was with getting the channel names to be what we wanted. We wanted to make this a new model rather than adding more parts to the ASC model because the ASC model is already so big that we run into problems with the RCG. Because the squeezer WFS are really just an additional demod of the AS WFS, I wanted their naming to be consistent with the rest of the AS WFS channels (H1:ASC-AS_A_RF42 ect).

The model is broken into two blocks, one of which has the top name ASC and just has the standard WFS parts in it, the other is actually two nested blocks, SQZ with a common block called ASC inside of it which has the matrices and servo filters. This was done so that the channel names would be SQZ-ASC. As Dave mentioned we had to change the model name from h1sqzasc to h1sqzwfs to be able to use the name ASC in the model without causing a conflict with the actual ASC model's DCU_ID channel.

The models and the medm screen (still in progress) are in the squeezer userapps repo.