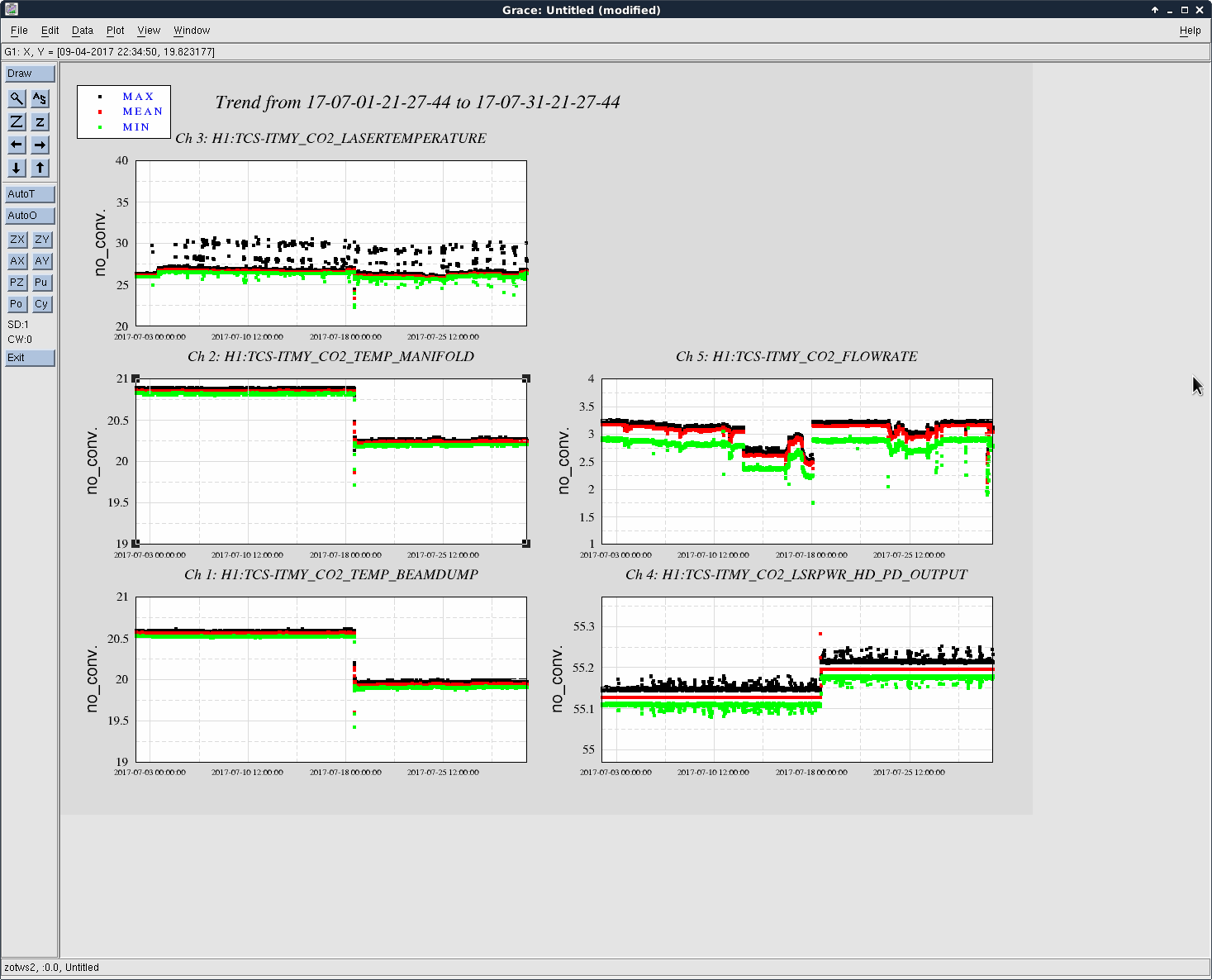

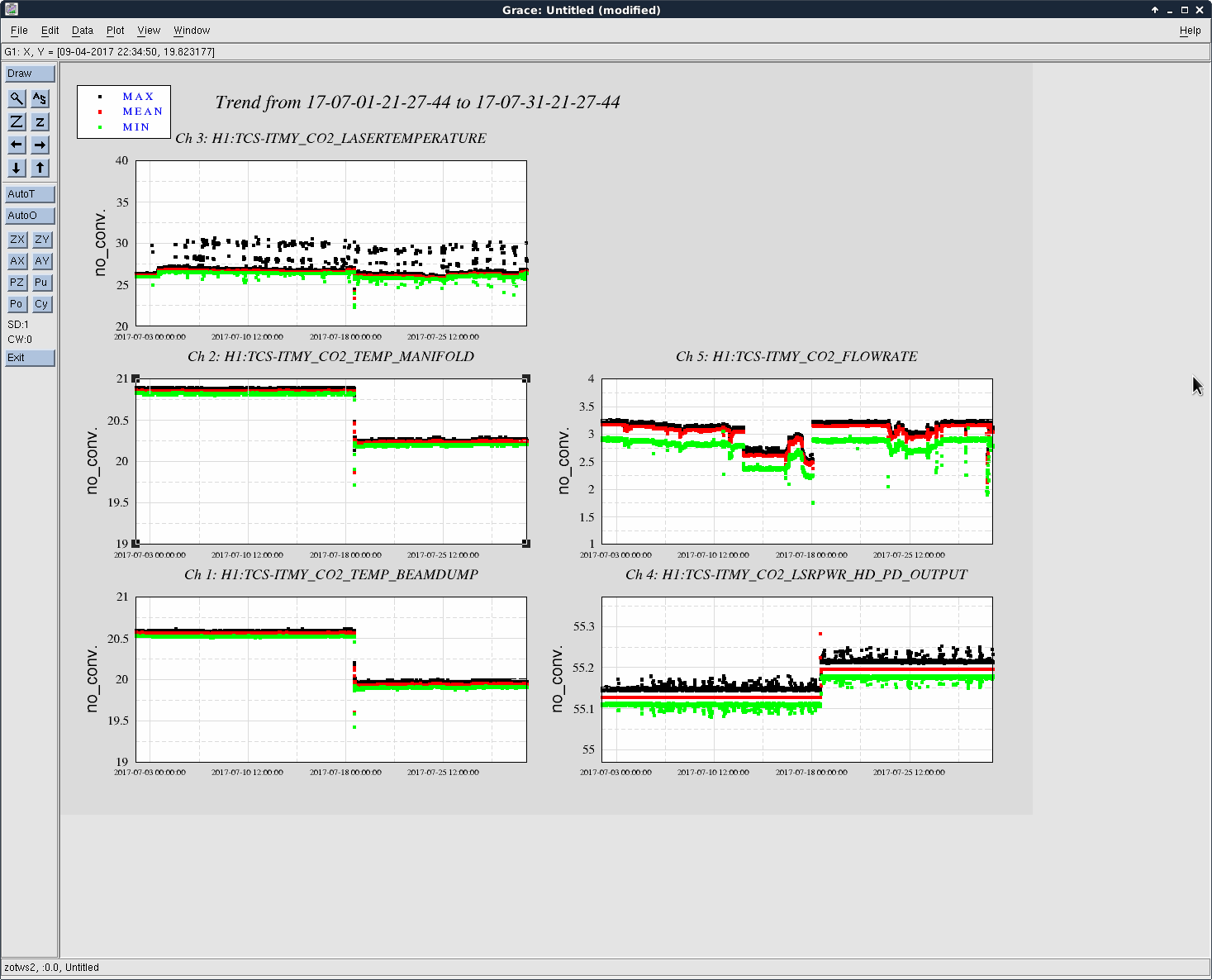

I've been having a look at the CO2Y flow meter issue (aLOG 32230). If we look back at the flow rate through July of this year, we see several periods where the "flow" has dropped by up to 30% (see attached time series showing H1:TCS-ITMY_CO2_FLOWRATE). There is zero change in any of the corresponding temperature sensors (laser, manifold, beam dump - all of which are attached to things that are cooled by the water), neither do we see anything in the laser output power.

We know, from measurements at LLO, that we expect the temperature of the laser to change in response to a change in flow rate. For example, we saw a 0.4C decrease in temperature in the LLO laser in response to the flow rate increase of 0.6GPM. There is nothing remotely commensurate here at LHO when the "flow" drops from 3.1GPM to 2.6GPM in mid-July.

There is a single observed drop in temperature around July 18. However, close examination shows that this does not correspond to change in flow - the nearest change in flow rate is approximately 19 hours earlier.

This leads me to believe that the flow rate itself is unaffected and that the electronic interface to the flow meter (the CO2 laser interlock controller) is most likely the source of the problem.

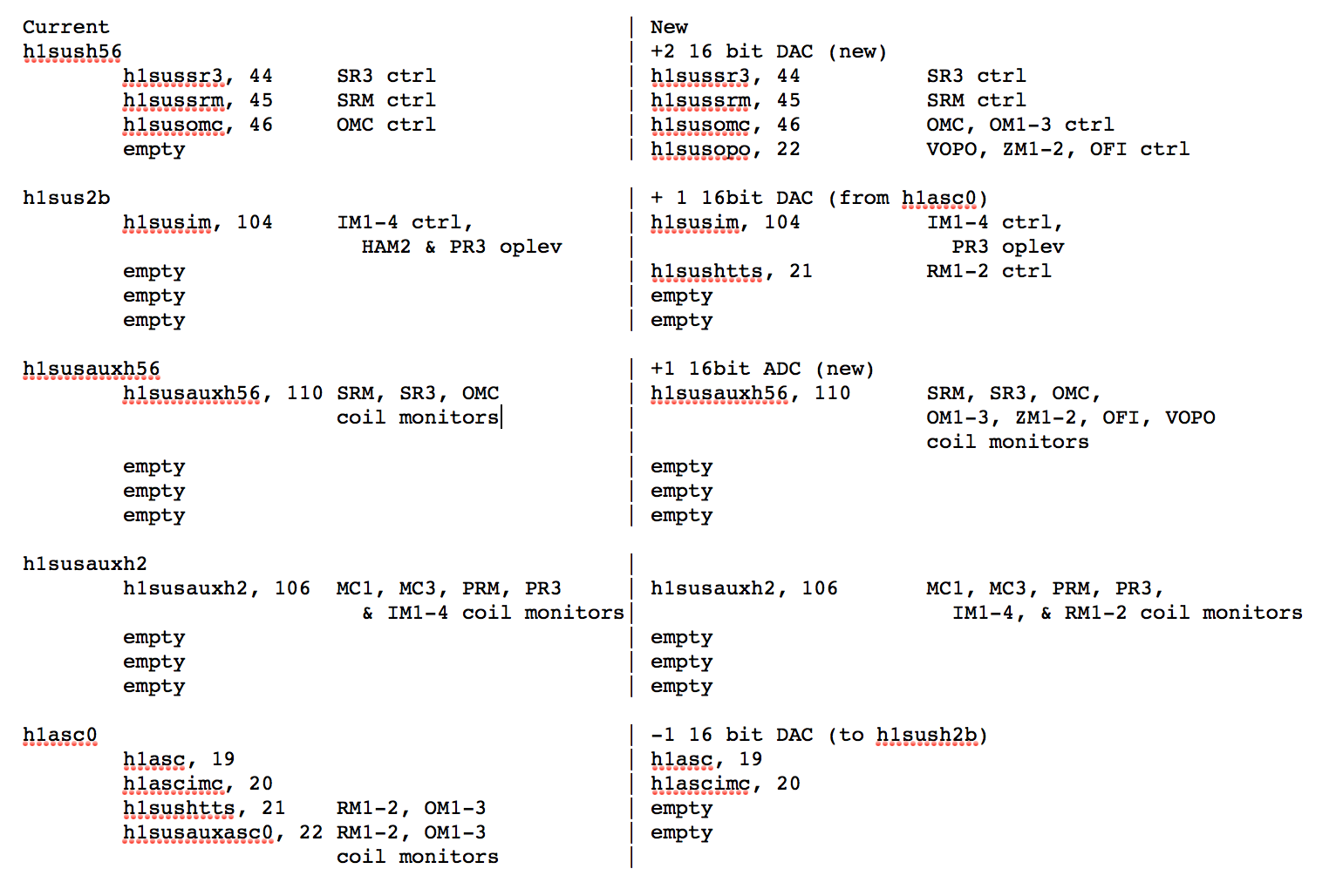

In words, I've:

- Created coil driver monitors in h1susauxh2 and h1susauxh56 for all new suspensions, re-arranging as mentioned above

- Updated the FOUROSEM_MONITOR_MASTER to use full filter banks instead of just test points and EPICs Monitors

- Changed the stored channel name list to include the "VOLTMON_??_OUT" needed for filter banks as opposed to the test point which was just named "VOLTMON_??""

- Only IMs, RMs, and OMs, use this library part so there's no need to recompile other susaux models

- Changed the PR3 optical lever ADC channel assignment in the h1susim model on the h1sush2b computer and removed the HAM2 table optical lever (these were picked up by h1sush2b sent out over the dolphin PCIE network to h1sush2a for consumption in the h1suspr3 model)

- Removed the OMs for the h1sushtts model (but the RMs remained), and changed the h1sushtts model to run on the second user model core (specific_cpu=3) of h1sush2b computer

- Changed the ASC control IPC receiver channels from shared memory (SHMEM) to over the dolphin PCIE network (PCIE)

- Terminated all of the OM inputs to the HTTS ODC vector

- Installed the OMs into the h1susomc model of h1sush56 computer

- Brought in an ADC1 block for the OMs

- Changed all the ASC control IPC receiver channels from SHMEM to PCIE

- Did NOT create any new ODC vector, so terminated all the status output from each HSSS_MASTER block

- Modified the h1asc model IPC outputs for the RMs and OMs to use PCIE instead of SHMEM

- Found that IM4 TRANS (sent by h1ascimc on h1asc0) was never received by the h1asc model (on h1asc0), so we hooked up the receiver... but are still suspicious how initial alignment ever worked without this...

- Created a new model h1susopo

- Installed ZMs using HSSS_MASTER.mdl

- Created new library parts VOPO_MASTER.mdl and OFIS_MASTER.mdl, and installed those as control in top-level model

I attach a bunch of screenshots of tghe finalized models to guide the eye -- and to guide the install at LLO.

The following models have been compiled, installed and are running:

- h1susauxh2

- h1susim

- h1sushtts

The following have been compiled, but we'll wait until next Two Tuesday to install them (due to work on replacing the SRM, and our need for more electronics)

- h1susomc

- h1susopo

- h1susaush56

Next up -- MEDM screens, and especially, re-populating the RM's control systems.

In words, I've:

- Created coil driver monitors in h1susauxh2 and h1susauxh56 for all new suspensions, re-arranging as mentioned above

- Updated the FOUROSEM_MONITOR_MASTER to use full filter banks instead of just test points and EPICs Monitors

- Changed the stored channel name list to include the "VOLTMON_??_OUT" needed for filter banks as opposed to the test point which was just named "VOLTMON_??""

- Only IMs, RMs, and OMs, use this library part so there's no need to recompile other susaux models

- Changed the PR3 optical lever ADC channel assignment in the h1susim model on the h1sush2b computer and removed the HAM2 table optical lever (these were picked up by h1sush2b sent out over the dolphin PCIE network to h1sush2a for consumption in the h1suspr3 model)

- Removed the OMs for the h1sushtts model (but the RMs remained), and changed the h1sushtts model to run on the second user model core (specific_cpu=3) of h1sush2b computer

- Changed the ASC control IPC receiver channels from shared memory (SHMEM) to over the dolphin PCIE network (PCIE)

- Terminated all of the OM inputs to the HTTS ODC vector

- Installed the OMs into the h1susomc model of h1sush56 computer

- Brought in an ADC1 block for the OMs

- Changed all the ASC control IPC receiver channels from SHMEM to PCIE

- Did NOT create any new ODC vector, so terminated all the status output from each HSSS_MASTER block

- Modified the h1asc model IPC outputs for the RMs and OMs to use PCIE instead of SHMEM

- Found that IM4 TRANS (sent by h1ascimc on h1asc0) was never received by the h1asc model (on h1asc0), so we hooked up the receiver... but are still suspicious how initial alignment ever worked without this...

- Created a new model h1susopo

- Installed ZMs using HSSS_MASTER.mdl

- Created new library parts VOPO_MASTER.mdl and OFIS_MASTER.mdl, and installed those as control in top-level model

I attach a bunch of screenshots of tghe finalized models to guide the eye -- and to guide the install at LLO.

The following models have been compiled, installed and are running:

- h1susauxh2

- h1susim

- h1sushtts

The following have been compiled, but we'll wait until next Two Tuesday to install them (due to work on replacing the SRM, and our need for more electronics)

- h1susomc

- h1susopo

- h1susaush56

Next up -- MEDM screens, and especially, re-populating the RM's control systems.

The CER switch ports do not show any link lights. We will investigate tomorrow in the LVEA to ensure there is an intact cable run up to the cameras through the patch panels.