Ops Shift Log: 08/11/2017, Day Shift 15:00 – 23:00 (08:00 - 16:00) Time - UTC (PT)

State of H1: Locked at NLN, 29.2w, 52.5Mpc

Intent Bit: Observing

Support: N/A

Incoming Operator: Jim

Shift Summary: Turn IFO over to commissioning at start of shift. Commissioning lockloss. Difficulty relocking. Needed to tweak the alignment in several places. Damped ETMX Violin modes 1, 3, and 4 before fully relocking.

Back to Observing after clearing SDF Diffs.

Activity Log: Time - UTC (PT)

15:00 (08:00) Take over from Ed

15:12 (08:12) Dropped into Commissioning for PEM Injection work

15:55 (08:55) Richard – Going into the LVEA

15:56 (08:56) Robert – Going into the LVEA

16:41 (09:41) Platt Electric on site delivering EE supplies

17:07 (10:07) Parking Lot Solutions – On site to resurface parking areas

17:24 (10:24) Lockloss – Commissioning

18:58 (11:58) Robert swept the LVEA

20:34 (13:34) Relocked at NLN – Back to commissioning

20:45 (13:45) Robert – Going back into the LVEA

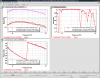

20:56 (13:56) Damp PI Mode-27

22:00 (15:00) Kentaro – Taking visitor to End-X

22:11 (15:11) Back to Observing

22:19 (15:19) Kentaro – Back from End-X

23:00 (16:00) Turn over to Jim