I'm looking again at the OSEM estimator we want to try on PR3 - see https://dcc.ligo.org/LIGO-G2402303 for description of that idea.

We want to make a yaw estimator, because that should be the easiest one for which we have a hope of seeing some difference (vertical is probably easier, but you can't measure it). One thing which makes this hard is that the cross coupling from L drive to Y readout is large.

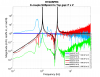

But - a quick comparison (first figure) shows that the L to Y coupling (yellow) does not match the Y to L coupling (purple). If this were a drive from the OSEMs, then this should match. This is actuatually a drive from the ISI, so it is not actually reciprocal - but the ideas are still relevant. For an OSEM drive - we know that mechanical systems are reciprocal, so, to the extent that yellow doesn't match purple, this coupling can not be in the mechanics.

Never-the-less, the similarity of the Length to Length and the Length to Yaw indicates that there is likely a great deal of cross-coupling in the OSEM sensors. We see that the Y response shows a bunch of the L resonances (L to L is the red TF); you drive L, and you see L in the Y signal. This smells of a coupling where the Y sensors see L motion. This is quite plausible if the two L OSEMs on the top mass are not calibrated correctly - because they are very close together, even a small scale-factor error will result in pretty big Y response to L motion.

Next - I did a quick fit (figure 2). I took the Y<-L TF (yellow, measured back in LHO alog 80863) and fit the L<-L TF to it (red), and then subtracted the L<-L component. The fit coefficient which gives the smallest response at the 1.59 Hz peak is about -0.85 rad/meter.

In figure 3, you can see the result in green, which is generally much better. The big peak at 1.59 Hz is much smaller, and the peak at 0.64 is reduced. There is more from the peak at 0.75 (this is related to pitch. Why should the Yaw osems see Pitch motion? maybe transverse motion of the little flags? I don't know, and it's going to be a headache).

The improved Y<-L (green) and the original L<-Y (purple) still don't match, even though they are much closer than the original yellow/purple pair. Hence there is more which could be gained by someone with more cleverness and time than I have right now.

figure 4 - I've plotted just the Y<-Y and Y<-L improved.

Note - The units are wrong - the drive units are all meters or radians not forces and torques, and we know, because of the d-offset in the mounting of the top wires from the suspoint to the top mass, that a L drive of the ISI has first order L and P forces and torques on the top mass. I still need to calculate how much pitch motion we expect to see in the yaw reponse for the mode at 0.75 Hz.

In the meantime - this argues that the yaw motion of PR3 could be reduced quite a bit with a simple update to the SUS large triple model, I suggest a matrix similar to the CPS align in the ISI. I happen to have the PR3 model open right now because I'm trying to add the OSEM estimator parts to it. Look for an ECR in a day or two...

This is run from the code {SUS_SVN}/HLTS/Common/MatlabTools/plotHLTS_ISI_dtttfs_M1_remove_xcouple'

-Brian