Have remained in observing. Adam M. has logged in remotely to prepare for hardware injections. No issues to report.

Have remained in observing. Adam M. has logged in remotely to prepare for hardware injections. No issues to report.

Thursday afternoon we had a brief but strong wind event--See first plot: 2 day's minute trends. I had moved the roaming STS2 a week or more ago and now resides at position Roam9. At the corner station the wind average touched on 20mph for maybe 90 minutes with a stable direction of about 170o or ~from EndX toward the corner. No non-wind seismic evident.

The second attachment shows the usual STS ASD comparisons between the Roaming HAM5 and the Detector used ITMY STSs, see 38184 for the Roam8 comparison and you can follow back from there. Great news: for the X dof here at Roam9, the HAM5 STS shows several factors less floor tilting than ITMY starting at 30ishmHz; and, the Y dof is maybe no worse--is that band between 10 & 100mHz too much?

So bottom line Roam9 shows promise!

Times: RefTime is low wind, 25 Aug 0930 utc. High wind is 0130 25 Aug. XMLs in /ligo/home/hugh.radkins/STS_Seismometer_Studies

Latest locations.

TITLE: 08/25 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Observing at 51Mpc

OUTGOING OPERATOR: Ed

CURRENT ENVIRONMENT:

Wind: 3mph Gusts, 2mph 5min avg

Primary useism: 0.01 μm/s

Secondary useism: 0.08 μm/s

QUICK SUMMARY: No issues to report.

TITLE: 08/25 Owl Shift: 07:00-15:00 UTC (00:00-08:00 PST), all times posted in UTC

STATE of H1: Observing at 50Mpc

INCOMING OPERATOR: Patrick (subbing for Cheryl)

SHIFT SUMMARY:

LOG:

12:01UTC Confirmed wit Livingston. Injections node working properly. 1 hour standown in effect.

07:22 Radar, at LLO aclled to confirm a GRB. Verbal Alarms did not report, however the INJ_TRANS node was activated into the 1hr standown mode. That being said, tweaks were in process after a fresh lock.

TITLE: 08/25 Owl Shift: 07:00-15:00 UTC (00:00-08:00 PST), all times posted in UTC

STATE of H1: Observing at 48Mpc

OUTGOING OPERATOR: Jeff

CURRENT ENVIRONMENT:

Wind: 9mph Gusts, 8mph 5min avg

Primary useism: 0.01 μm/s

Secondary useism: 0.10 μm/s

QUICK SUMMARY:

H1 was locking when I arrived. uSEI ws up to .2um/s and winds had been nearing 40mph earlier. OMC had to be massaged a bit before DC readout as it wouldn't lock. The AS90(purple)trace looked like te victim of some kind of offset as it was off scale until power increase where it came back down to familiar amplitudes. I ran a prelim a2l before setting the bit. the range was below 50Mpc as it was after I spoke to Aidan yesterday morning and he had me manually adjust the TCSX LASER power from preheat level (.500W) to .300W (see his aLog). He told me that we would probably go to .350W to claim a bit more of our already weakish range, so I did and it looks as if it may be a good move as our range has crept back up to 50+Mpc. Tweaking PI mode 28 (as usual).

Lost lock at start of shift. Relocked quickly and without trouble. Shortly after lost lock a second time; but was unable to relock. Completed initial alignment, after overcoming some difficulty with MITCH_DARK step. Tried relocking but with no success. Breaks on FIND_IR (DIFF) or at CHECK_IR. Also having difficulty with IR DIFF not really found. Can hand tune and get a good spike, but still cannot really find IR with regularity. Winds are up and gusting into the mid-30mph. X and Y microseism is up to 0.3um/s. BRS-X is damping. High winds are forecast until around 22:00PT. Holding the IFO in DOWN until the environment settles before trying relocking. Getting high dust alarms at End-Y. Given the high winds and heavy wildfire smoke, this is not unexpected.

FAMIS 6537 Added 100 mL H2O to crystal chiller. Neither chiller had a fault light lit and both chillers read that the water level was OK. Both canister filters appear white and free of debris.

TITLE: 08/24 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC STATE of H1: Observing at 51Mpc INCOMING OPERATOR: Jeff B. SHIFT SUMMARY: Lost lock for unknown reason. Had to make a significant adjustment to PRM in YAW to lock PRMI. Made it to NLN. While running a2l PI mode 27 ran away and I lost lock again. Succeeded in making it back to NLN and did not attempt to run a2l again. Set to observing. Succeeded in damping PI mode 27 early on. Have transitioned the INJ_TRANS guardian back to INJECT_SUCCESS. LOG: 14:47 UTC Closed terminal on nuc3 that was obscuring DARM on control room screenshots. 15:35 UTC Noticed DIAG_MAIN message stating that seismon is not updating. The GPS time does appear stuck at 1187621613. 16:10 UTC Betsy to optics lab. 16:26 UTC Lock loss. 16:32 UTC Gave Aidan remote access. 16:37 UTC Filiberto to mid Y. 17:03 UTC Filiberto back. 17:43 UTC NLN. 17:47 UTC Running a2l. 17:59 UTC Richard to CER. 18:05 UTC Lock loss. PI mode 27 ran away and I could not bring it back. 18:08 UTC Richard back. 18:31 UTC Visitor through gate to see Chandra. 18:32 UTC NLN. 18:35 UTC Observing. Damped PI mode 27 18:59 UTC Nichole W. to mid X to retrieve Pelican cases. 19:09 UTC Nichole W. through main gate. 19:23 UTC Nichole W. back. 20:19 UTC Set INJ_TRANS to INJECT_SUCCESS. 20:51 UTC Elizabeth to mid Y to pick up parts. 21:14 UTC Elizabeth back. 21:26 UTC Travis and Liz to mechanical room (property control). 21:34 UTC Travis and Liz back. 21:50 UTC Jeff B. moving equipment from LSB to staging building. 22:08 UTC TJ to optics lab. 22:10 UTC Jeff B. done, moving yellow cart to OSB. 22:23 UTC TJ back.

Back to observing after lock loss at 16:26 UTC.

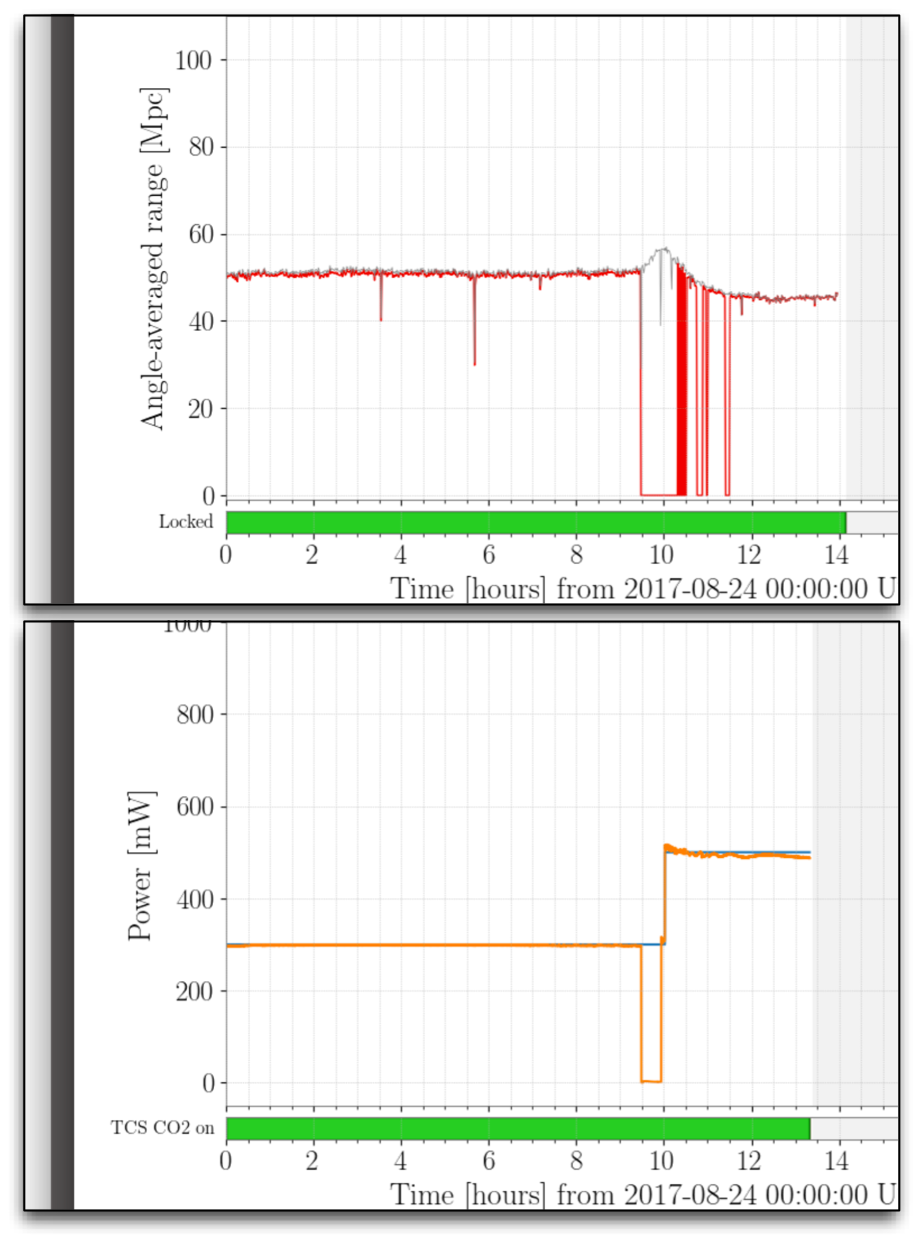

09:29UTC "TCSX CO2 LASER output off" verbal happened. After a trip into the LVEA to find the FLOW light tripped on the controller I went into the mezzanine to check the chiller which was NOT tripped. I went back down and reset the controller and everything looked ok except for some SDF diffs in TCS_ITMX_CO2_PWR,TCS_ITMX_CO2, and DIAG_SDF nodes. I called Jason on the phone and he didn't have to much to say about some new Guardian stuff that has been added. I accepted te DIFFS that were showing and took a couple of screenshots.

10:32UTC LASER won't stay locked. It keeps dropping/searching/locking...rinse, lather, repeat. When it locks, the ready bit goes GREEN and I set the Intention bit but it just keeps falling out over and over again.

10:35UTC maybe it will hold this time?

10:46UTC nope

We should look at the TCSX controller as it would seem that the trip point is set differently from the the TCSY controller. It might be that there are two resistor values that may have to be changed.

Here's what the BNS Range plot and the HIFO_RANGE looks like since the TCS issue began. There's an uptick in BNS range and then a drop into the mid-40Mpc range. DARM is also a mess.

[Aidan (remotely), Ed]

I just got off the phone with Ed. It seems that after the TCS CO2X laser reacquired lock, the requested power went to a much higher level (500mW) than that required during steady-state operations (300mW). Given the long recovery time, I advised Ed to drop the request power manually back down to 300mW and then re-enter observing mode if we got kicked out.

Interestingly, the range went UP when the CO2X delivered power was 0.0W. We might try dropping the requested power for the remaining time in O2.

I agree with Peter - we should look at the laser controller first, and the flow meter second. We have a spare chassis that can be swapped in very quickly. At the same time it would be useful to double check the settings on the yellow electronic flow meter that's under the beam tube. If you pop the top off it there is a screen and the current/voltage output range can be checked so we know its output calibration.

Once a laser trips and is turned back on it needs at least 30mins before we try to lock it. Not giving it this time will cause the guardian script to mess around with its thermal state and it will take longer to lock. We should think about whether we should be losing detector time to wait to be able to lock this laser if it does not improve sensitivity. Maybe we aren't gaining a lot from that.

This improved range was due to a reduction in the broadband jitter coupling, which can be seen looking at coherence between the PSL bullseye pit signal and DARM or the IMC WFS B DC PIT coherence, shown in the attached screenshot. (For IMC WFS B there are some peaks from acoustics on the table which are not changed with the TCS settings.)

It not surprising that the this can be improved. It doesn't seem worth changing settings at this point in the run. This noise is subtracted by Jenne's weiner filter cleaning of the data; we know that the spot positions also can improve this noise, and after the long vent we will have both a different PSL laser and hopefully less ITMX absorption.

Opened corresponding FRS Ticket 8846.

Rai W., Mike Z., Daniel S., Jeff K., Chandra R., Kyle R. Today the TMDS Gas Delivery Table was transported to the X-end VEA and set it up for operation. The actual Surface Discharge Ionizer was mounted on a stand for today's demonstration purposes. Nominally, it would be mounted to the dedicated TMDS port of BSC9's East Door. The Vent/Purge air supply was ran and dry air supplied to the TMDS setup. Prior to exposing the Ionizer to the conditioned air supply, the plumbing connection at the Ionizer was decoupled and connected to a test setup that facilitated the measurement of > 0.3 micron and > 0.5 micron particulate in the conditioned air. The air line was then re-coupled to the Ionizer. An approximate 50/50 mix of Ethylene Glycol to Alcohol along with "chunks" of dry ice was used as a cold trap to the path. Rai W. gave an overview of the theory and a detailed tutorial as to the operation of the setup. Daniel S. and Jeff K. noted details related to the ion production while Kyle R. and Chandra R. noted detail related to the vacuum related issues. The Ionizer setup performed satisfactorily (with caveats) at making comparable amounts of positive and negative ions. The plan now is to connect the Ionizer to BSC9 and perform an actual discharge of the ETMx during next Tuesday's maintenance day. My notes from today; 1. Oscillations were noted and attributed to electronics design -> Daniel S. will address. 2. Rai W. requested that we provide a means of support to take the weight of the Ionizer when mounted to the 1 1/2" valve on the BSC -> Kyle R. will address. 3. Confirmation of the preferred means to pump to rough vacuum the X-end VE between cycles of discharge gas admission -> Chandra R. will address.

We will use the QDP80 to pump down the chamber between discharge cycles, with the turbo spun down and, as needed, use purge air to regulate the pump-side pressure to match the chamber pressure as to not break the 10" gate valve rated for 10 Torr differential pressure. Attached is a plot from LLO discharge. They spun up and down the turbo between cycles, but decided later it was not worth the trouble.

Refer to Figure 1 of T1400713, TMDS design document for elements of setup. A couple of useful videos of the oscilloscope readouts during the experiment: Lesson 1: Control and readback of the electrometer from the TMDS inferface chassis, D1500152. Rai has set up the readback of the electrometer with the square-wave input shown on the blue trace, and the electrometer readback in yellow. One is looking for an even balance in voltage from the positive and negative ions. The electrometer has a negative voltage bias at the time of recording, so it appears as though we're getting more positive than negative ions, but, rest assured, we're good. Lesson 2: Demonstration of ionic discharge against TMDS chamber walls, if HV supply to ion generator is too high. The readback of the HV current is shown on the yellow trace. The video starts out with the ion generator discharging, as is evident by the rattiness of the waveform. At the last few seconds of the video, he reduces the VARIAC HV transformer gain, bringing the ion generator back to the desired level. Lesson 3: Demonstration of electrometer readback once HV voltage is reduced Once the HV is tuned with the VARIAC, with the initial max amplitude of the square wave generator, then the square wave amplitude may be reduced to ~ 3 V pkpk (assuming a flow rate of about 30-50 [mL/s ?? not sure about those units]). Once we did this, we saw evidence for a ~3 MHz oscillation on the electrometer readback. Investigating the circuit drawing for the electrometer board (D1500061), Daniel and Mike agreed to replace the readback's output resistors R9 & R10 with ~100 [ohm] resistors. This has been done already, and the electrometer has been reinstalled Lesson 4: Final setup of readback / monitor system, in the "ready to discharge into chamber" configuration. Once again, Rai demonstrates the control of the HV VARIAC and the desire to keep the ion generator current readback from any rattiness in its waveform, which is indicative of ionic breakdown discharge against the TMDS chamber walls. I attach a ton more pictures of the setup as well. Hopefully the filenames are a good enough caption.

Desired gas flow rate 30 - 50 Liters per Minute (LPM)

J. Kissel I've processed the data from LHO aLOG 38232, in which I was looking to see if the complex, high frequency dynamics found in the UIM (L1) stage of H1 SUSETMY (see LHO aLOG 31603). Plots are attached; note that I've used the former 2016-11-17 data for ETMY. To guide the eye, I show the calibration group's dynamical model used during O2. Data shown with a coherence threshold of 0.75 or greater. I've not yet compared this against the unphysical, fitted update to the QUAD model that Brett developed for H1 SUS ETMY in CSWG aLOG 11197 It looks like - All suspensions see the "unknown" ~165 Hz resonance, though interestingly ITMY's mode appears to be split. ETMX and ITMY's are *strikingly* similar. - only ETMY and ITMX show what Norna suspects is the "UIM blade bending mode" at ~110 Hz. ETMY's is indeed the ugliest. - All but ETMX show evidence for resonances between 290 and 340 Hz, which are likely the (Suspension Point to Top Mass) wire violin mode fundamentals weakly excited by the UIM excitation. (see LHO aLOG 24917, T1300876) - All suspensions show their (UIM to PUM) wire violin mode fundamentals between 410 and 470 Hz (see LHO aLOG 24917, T1300876) - ETMY and ITMY's wire violin modes are *strikingly* similar; ITMX's modes are surprisingly low Q. - We don't see the TOP to UIM wire violin modes likely because I didn't excite up to high enough a frequency; they're between ~500-510 Hz for ETMY (modeled at 495 Hz). So now the question becomes -- what's the physical mechanism for this huge mystery resonance that's seen virtually identically in all QUADs? It seems quite a fundamental feature. Why is it split on ITMX? It would be nice to have similarly detailed measurement of L1's QUADs, but all evidence from calibration measurements on L1's ETMY UIM stage point to this same feature (see the discrepancy between model and measurement for the UIM stage, e.g. in 2016-11-25_L1_SUSETMY_L1_actuation_stages.pdf LHO aLOG 29899). Further, it's *still there* before and after their Bounce/Roll mode Dampers (BRDs) have been installed (i.e. the same feature is seen in O1 and O2 CAL measurements; the above linked measurement was after BRD install).

If anyone needs to fit this data to try out their FDIDENT skills, the data from the above concatenated transfer UIM to TST functions is attached, in the same format as before for ETMY. Analysis script lives here: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O2/H1/Scripts/FullIFOActuatorTFs/ process_H1SUSQUAD_L1_HFDynamicsTest_20170814.m

I'm attaching the l2l st2 gs13 measurements for ITMX that we used for closeout after install. The 160 hz feature doesn't seem to show up on the ISI. The 300 hz feature maybe does? I don't think it's very conclusive.

J. Kissel I've checked the last suspensions for any sign of rubbing. Preliminary results look like "Nope." The data has been committed to SUS repo here: /ligo/svncommon/SusSVN/sus/trunk/HAUX/H1/IM1/SAGM1/Data/ 2017-08-08_1629_H1SUSIM1_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1629_H1SUSIM1_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1629_H1SUSIM1_M1_WhiteNoise_Y_0p01to50Hz.xml /ligo/svncommon/SusSVN/sus/trunk/HAUX/H1/IM2/SAGM1/Data/ 2017-08-08_1714_H1SUSIM2_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1714_H1SUSIM2_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1714_H1SUSIM2_M1_WhiteNoise_Y_0p01to50Hz.xml /ligo/svncommon/SusSVN/sus/trunk/HAUX/H1/IM3/SAGM1/Data/ 2017-08-08_1719_H1SUSIM3_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1719_H1SUSIM3_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1719_H1SUSIM3_M1_WhiteNoise_Y_0p01to50Hz.xml /ligo/svncommon/SusSVN/sus/trunk/HAUX/H1/IM4/SAGM1/Data/ 2017-08-08_1741_H1SUSIM4_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1741_H1SUSIM4_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1741_H1SUSIM4_M1_WhiteNoise_Y_0p01to50Hz.xml /ligo/svncommon/SusSVN/sus/trunk/HTTS/H1/OM1/SAGM1/Data/ 2017-08-08_1544_H1SUSOM1_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1544_H1SUSOM1_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1544_H1SUSOM1_M1_WhiteNoise_Y_0p01to50Hz.xml /ligo/svncommon/SusSVN/sus/trunk/HTTS/H1/OM2/SAGM1/Data/ 2017-08-08_1546_H1SUSOM2_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1546_H1SUSOM2_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1546_H1SUSOM2_M1_WhiteNoise_Y_0p01to50Hz.xml /ligo/svncommon/SusSVN/sus/trunk/HTTS/H1/OM3/SAGM1/Data/ 2017-08-08_1625_H1SUSOM3_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1625_H1SUSOM3_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1625_H1SUSOM3_M1_WhiteNoise_Y_0p01to50Hz.xml /ligo/svncommon/SusSVN/sus/trunk/HTTS/H1/RM1/SAGM1/Data/ 2017-08-08_1516_H1SUSRM1_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1516_H1SUSRM1_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1516_H1SUSRM1_M1_WhiteNoise_Y_0p01to50Hz.xml /ligo/svncommon/SusSVN/sus/trunk/HTTS/H1/RM2/SAGM1/Data/ 2017-08-08_1520_H1SUSRM2_M1_WhiteNoise_L_0p01to50Hz.xml 2017-08-08_1520_H1SUSRM2_M1_WhiteNoise_P_0p01to50Hz.xml 2017-08-08_1520_H1SUSRM2_M1_WhiteNoise_Y_0p01to50Hz.xml Will post results in due time, but my measurement processing / analysis / aLOGging queue is severely backed up.

J. Kissel Process the IM1, IM2, and IM3 data from above. Unfortunately, it looks like I didn't actually save an IM4 Yaw transfer function, so I don't have plots for that suspension. I can confirm that IM1, IM2, and IM3 do not look abnormal from their past measurements other than a scale factor gain. Recall that the IMs had their coil driver range reduced in Nov 2013 (see LHO aLOG 8758), but otherwise I can't explain the electronics gain drift, other than to suspect OSEM LED current decay, as has been seen to a much smaller degree in other larger suspension types. Will try to get the last DOF of IM4 soon.

All HTTSs are clear of rubbing. Attached are - the individual measurements to show OSEM basis transfer function results, - each suspensions transfer functions as a function of time - all suspensions (plus an L1 RM) latest TFs just to show how they're all nicely the same (now) Strangely, and positively, though RM2 has always shown an extra resonance in YAW (the last measurement was in 2014 after the HAM1 vent work described in LHO aLOG 9211), that extra resonance has now disappeared, and looks like every other HTTS. Weird, but at least a good weird!

J. Kissel Still playing catch up -- I was finally able to retake IM4 Y. Processed data is attached. Still confused about scale factors, but the SUS is definitely not rubbing, and its frequency dependence looks exactly as it did 3 years ago.