model restarts logged for Wed 23/Aug/2017 - Wed 16/Aug/2017 No restarts reported

model restarts logged for Wed 23/Aug/2017 - Wed 16/Aug/2017 No restarts reported

[Aidan]

I've been investigating the apparent increase in range that occurred this morning when CO2X was turned off. This would seem to indicate that either (a) the CO2 laser is somehow misaligned/or deformed and causing a very poor lens, or (b) the present level of lensing (SELF heating + CO2) is too much. It's worth noting that the requested (and delivered) levels of CO2X laser power haven't changed significantly over the last four or five months.

If we assume (b) and also that the better range in the past is partially due to a better thermal state, then the conclusion is that either the effective CO2 lens or the SELF heating lens has increased.

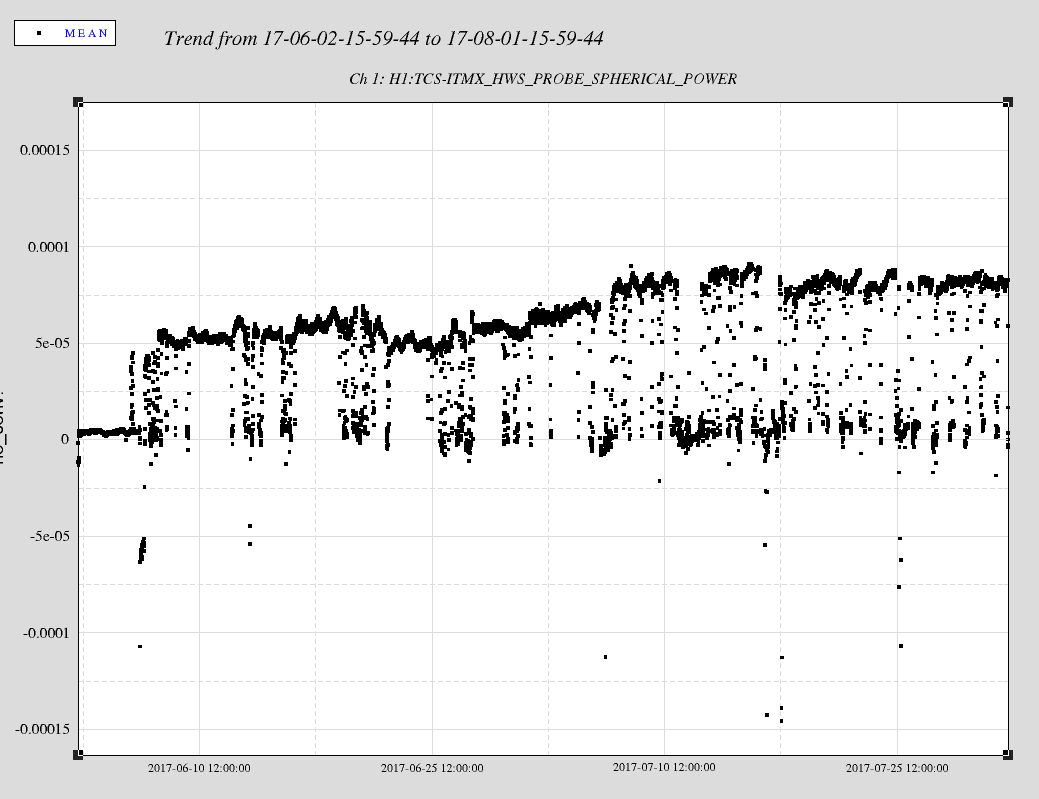

I've gone back and looked at the HWSX spherical power data (H1:TCS-ITMX_HWS_PROBE_SPHERICAL_POWER) from two months around the period of the earthquake. The relevant value here is the spherical power change per lock (the difference between the upper and lower levels that are obvious in the time series which shows the transient lens from SELF heating and CO2 laser changes). There is an interesting period form 22-June to 7-July where this trends upwards by approximately 25 micro-diopters (or about 50%). This indicates that the apparent lens change per lock seen by the HWS is increasing significantly.

Two factors also play into this. (1) the CO2 laser is being turned on and off during this time (to keep the thermal state warm when the IFO has lost lock). However there is no significant change in the CO2 power level behaviour over the corresponding time range. (2) we know there is already a point absorber on ITMX as well as uniform absorption. The spherical power value is fitted to the low spatial-frequency lens. The presence of a known high spatial frequency point in the wavefront complicates this. For example, it's possible that the HWS alignment may be drifting and the point absorber is contributing more to the total spherical power value. This obviously needs to be investigated further by looking at the stored gradient fields.

However, it does seem that there is a real effect going on as the range got much better (~15% better) when the CO2 laser turned off.

One thing to note is that if the IFO beam is moving across the surface of the optic (specifically, across the point absorber), then we would see a 50% increase in the amount of absorbed power if the IFO beam moved by dr = 7-10mm. This is based upon the estimate of the point absorber at a radius (r_point) of something like 36-44mm from the center of the optic. (see aLOG 35071 and aLOG 34868)

Specifically: dP_abs = exp(-2*[(r_point - dr)/w0]^2) - exp(-2*[(r_point)/w0]^2)

relative_change = dP_abs/exp(-2*[(r_point)/w0]^2)

Laser Status:

SysStat is good

Front End Power is 33.8W (should be around 30 W)

HPO Output Power is 154.8W

Front End Watch is GREEN

HPO Watch is GREEN

PMC:

It has been locked 9 days, 0 hr 11 minutes (should be days/weeks)

Reflected power = 16.77Watts

Transmitted power = 57.41Watts

PowerSum = 74.17Watts.

FSS:

It has been locked for 0 days 0 hr and 3 min (should be days/weeks)

TPD[V] = 0.9646V (min 0.9V)

ISS:

The diffracted power is around 3.1% (should be 3-5%)

Last saturation event was 0 days 0 hours and 34 minutes ago (should be days/weeks)

Possible Issues: None

Ran through daily svn check. The ISC_LOCK.py file differs by the addition of two log messages. File is owned by Sheila. The h1tcscs_OBSERVE.snap file differs only by the date in the header. Not sure if I can revert while in observing mode. -Time: Tue Apr 4 18:11:09 2017 +Time: Thu Aug 24 03:19:07 2017

TITLE: 08/24 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Observing at 47Mpc

OUTGOING OPERATOR: Ed

CURRENT ENVIRONMENT:

Wind: 19mph Gusts, 17mph 5min avg

Primary useism: 0.05 μm/s

Secondary useism: 0.09 μm/s

QUICK SUMMARY:

Range is trending back up.

TITLE: 08/24 Owl Shift: 07:00-15:00 UTC (00:00-08:00 PST), all times posted in UTC

STATE of H1: Observing at 46Mpc

INCOMING OPERATOR: Patrick (subbing for Cheryl)

SHIFT SUMMARY:

Shift mostly quiet until TCSX acted up. Aiden is making an aLog that includes the latest power adjustment made to recover the original range. I'm not feeling well this morning so Patrick has generously granted me early leave.

LOG:

Verbal Alarm at 13:25

Not sure if the features seen in ETMX and ETMY are of significance.

09:29UTC "TCSX CO2 LASER output off" verbal happened. After a trip into the LVEA to find the FLOW light tripped on the controller I went into the mezzanine to check the chiller which was NOT tripped. I went back down and reset the controller and everything looked ok except for some SDF diffs in TCS_ITMX_CO2_PWR,TCS_ITMX_CO2, and DIAG_SDF nodes. I called Jason on the phone and he didn't have to much to say about some new Guardian stuff that has been added. I accepted te DIFFS that were showing and took a couple of screenshots.

10:32UTC LASER won't stay locked. It keeps dropping/searching/locking...rinse, lather, repeat. When it locks, the ready bit goes GREEN and I set the Intention bit but it just keeps falling out over and over again.

10:35UTC maybe it will hold this time?

10:46UTC nope

We should look at the TCSX controller as it would seem that the trip point is set differently from the the TCSY controller. It might be that there are two resistor values that may have to be changed.

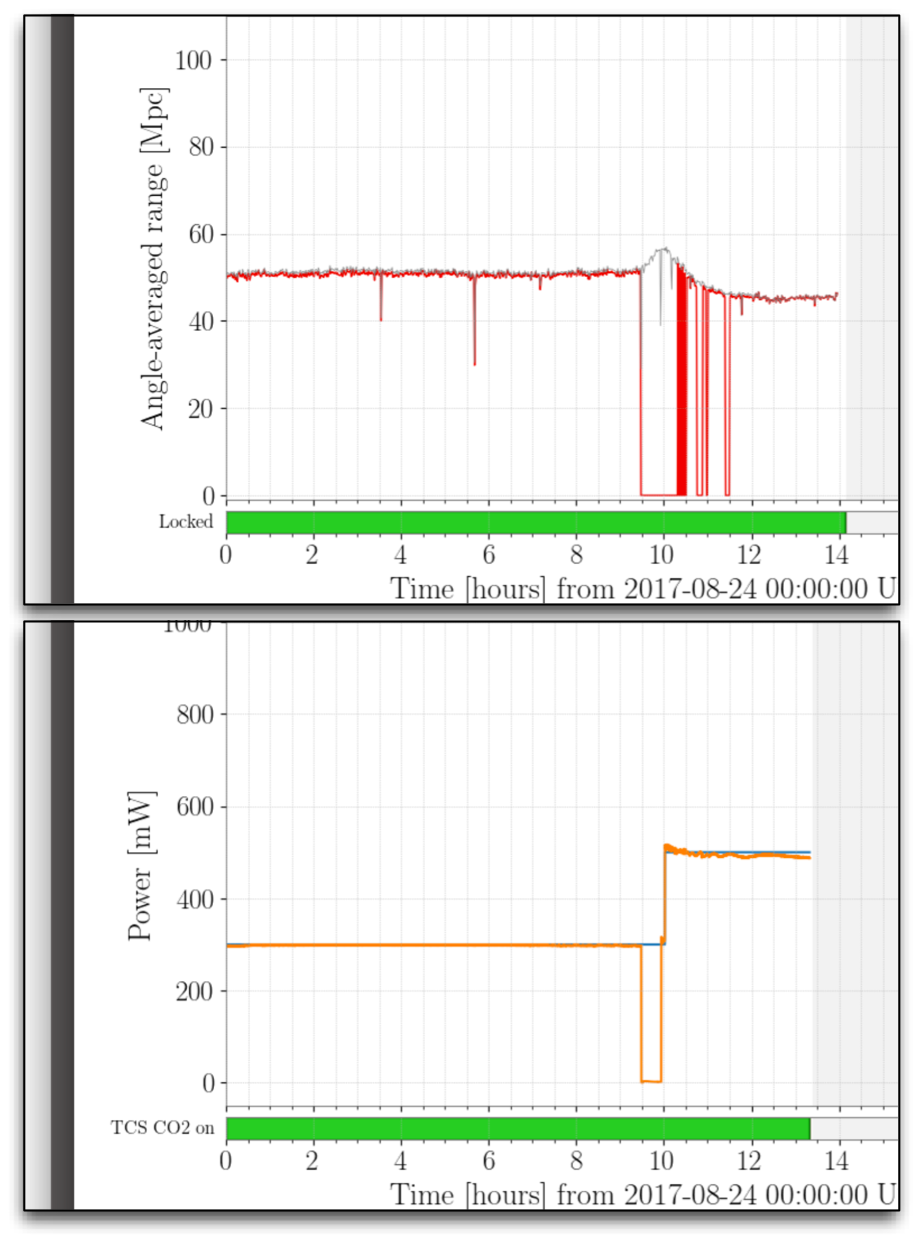

Here's what the BNS Range plot and the HIFO_RANGE looks like since the TCS issue began. There's an uptick in BNS range and then a drop into the mid-40Mpc range. DARM is also a mess.

[Aidan (remotely), Ed]

I just got off the phone with Ed. It seems that after the TCS CO2X laser reacquired lock, the requested power went to a much higher level (500mW) than that required during steady-state operations (300mW). Given the long recovery time, I advised Ed to drop the request power manually back down to 300mW and then re-enter observing mode if we got kicked out.

Interestingly, the range went UP when the CO2X delivered power was 0.0W. We might try dropping the requested power for the remaining time in O2.

I agree with Peter - we should look at the laser controller first, and the flow meter second. We have a spare chassis that can be swapped in very quickly. At the same time it would be useful to double check the settings on the yellow electronic flow meter that's under the beam tube. If you pop the top off it there is a screen and the current/voltage output range can be checked so we know its output calibration.

Once a laser trips and is turned back on it needs at least 30mins before we try to lock it. Not giving it this time will cause the guardian script to mess around with its thermal state and it will take longer to lock. We should think about whether we should be losing detector time to wait to be able to lock this laser if it does not improve sensitivity. Maybe we aren't gaining a lot from that.

This improved range was due to a reduction in the broadband jitter coupling, which can be seen looking at coherence between the PSL bullseye pit signal and DARM or the IMC WFS B DC PIT coherence, shown in the attached screenshot. (For IMC WFS B there are some peaks from acoustics on the table which are not changed with the TCS settings.)

It not surprising that the this can be improved. It doesn't seem worth changing settings at this point in the run. This noise is subtracted by Jenne's weiner filter cleaning of the data; we know that the spot positions also can improve this noise, and after the long vent we will have both a different PSL laser and hopefully less ITMX absorption.

Opened corresponding FRS Ticket 8846.

TITLE: 08/24 Owl Shift: 07:00-15:00 UTC (00:00-08:00 PST), all times posted in UTC

STATE of H1: Observing at 50Mpc

OUTGOING OPERATOR: Jeff

CURRENT ENVIRONMENT:

Wind: 1mph Gusts, 0mph 5min avg

Primary useism: 0.01 μm/s

Secondary useism: 0.08 μm/s

QUICK SUMMARY:

Continued triple coincident observing during first half of shift. No issues or problems to report.

TITLE: 08/23 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Observing at 50Mpc

INCOMING OPERATOR: Jeff

SHIFT SUMMARY:

Nice quiet shift with H1 being locked for about 23hrs (current Triple Coincidence is over 9hrs). Winds picked a little for 2nd half of shift and there was an EQ from Mexico that we rode through (as well as L1).

LOG: