J. Kissel, D. Sigg

ECR E1700228

WP 12370

The last piece of today's puzzle -- after understanding that PR3's optical lever (oplev) whitening chassis is NOT in newly labeled SUS-R1 (see LHO:83156), but in SUS-R2 (see LHO:83165, and when I first realized this in LHO:63849) -- I can now comfortably identify the physical cables that Daniel "moved" in future SUS HAM 1 2 wiring diagram to make room for PM1.

In short:

We need to move the D9F end of cable "H1:OP LEV_PR3_AA" or "H1:SUS-HAM2_88"

- from SUS-C4 U11 AA chassis spigot "IN25-28," which is CH24-27 via ADC0 in/on the sush2b IO chassis / front-end computer (and shipped over to PR3 via IPC in the top level of h1susim.mdl)

- to SUS-C4 U32 AA chassis spigot "IN29-31," which shall be CH28-31 via ADC1 in/on the sush2a IO chassis / front-end computer (and be added to the top level of h1suspr3.mdl as a direct read of ADC1)

and then make model changes accordingly.

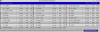

Pictured are

- Front view of SUS-R2 U12 ("U31" in iLIGO rack terms, as its labeled) oplev whitening chassis showing that this is indeed the PR3 optical lever, as stated in LHO:63849.

- Back view of SUS-R2 U12 oplev whitening chassis showing the field end of the SUS-R2 to SUS-C4 cable, labeled "H1:OP LEV_PR3_AA" or "H1:SUS-HAM2_88"

- SUS-C4 U11 AA chassis where the remote end of the cable is connected to "IN25-28"

- SUS-C4 U32 AA chassis were we want to use "IN29-32."

Why was this so hard? The history of drawing optical levers and SUS in HAM1 in wiring diagrams is sordid.

(1) The PR3 optical lever was originally drawn in the SUS HAM 2 wiring diagram in the aLIGO era. See page 26 of D0902810-v7. However, in these aLIGO era drawings, field racks weren't defined so each site put their optical lever whitening chassis where-ever, and connected them up in a way that made sense to them. Complicating the allocation were the never-used, now-decommissioned HAM-ISI optical levers, and as drawn in D0902810-v7, the HAM2 ISI optical lever was to pair with this PR3 optical lever.

(2) In the effort to, among other things, define field racks for the first time, Luis and I circa early 2023 (see 5097), we cleaned up the PR3 optical lever chain, arguably in correctly, and included PR3 and SR3 in the SUS HAM 3 4 wiring diagram D1000599-v8, see pages 5 and 7 (PR3 ADC) or 6 (SR3), respectively.

(3) The "missing" PR3 optical lever continued when we, as a result of the plan to integrate SUS in O5 (G2301306), created a new BSC 2 HAM 3 4 wiring diagram, D2300383, and in doing so we left out the PR3 optical lever chain. (See discussion below about the SR3 optical lever chain).

In parallel,

(4) as the HAM 2 wiring diagram, D0902810, became a very quick-and-dirty D0902810-v8 circa 2017 in order to find space for the then O3 SQZ system in HAM6 -- see, e.g. LHO:38883, or other alogs where the "h1sushtts" model changes are mentioned.

(5) Luis and I then, circa 2023 as the last field racks to draw, converted the drawing to the "modern" wiring diagram with D0902810-v9. Since it included the RMs, but no change for O5, we renamed it the HAM 1 2 wiring diagram. Again, because we'd put PR3 optical lever in D1000599-v8, nothing was shown in D0902810-v9.

So, when Daniel comes along, perusing for spare channels, he implicitly makes the recommendation to move the PR3's AA / ADC input from sush2b to sush2a, looking to make room for the incoming PM1, and further JM1 - 3. So now it appears back again in D0902810-10, on page 10, going into the U32 AA Chassis "IN29-32" spigot!

This is "necessary" and "natural," because after this change,

- everything on sush2a is related to the triples in HAM2

- PR3 optical lever can be directed read out from ADC1 of sush2a, rather than having to receive it via IPC from another IO chassis (sush2b)

- sush2b drives only single suspensions, even though they're split between HAM2 (the IMs) and HAM3 (RMs, PM1, and the future JMs).

- that leaves us open and capable, eventually, if we mass produce LIGO 32-channel DACs and we can claw back lots of 8 channel card slots, perhaps sush2a and sush2b can naturally evolve to simply sush1 and sush2, much like sush56 is to evolve to sush5 and sush6.

So -- a pretty dang simple change, but it was just tough to follow with the wiring diagrams alone.

FUTURE DRAWING ACTIONS:

(I) Need to update HAM 1 2 wiring D0902810-10 to include the a page for the "sensor and field" wiring for PR3 that's currently in D1000599-v8 page 5, so that the ADC input (on current page 10) doesn't appear out of "thin air" in D0902810-10.

(II) (For now, we have to leave SR3 on sush34, since there're no spare ADC spigots in the sush56 ADCs' AA chassis, see page 6 of D1002740-v10)

(III) When we split the sush56 computer into two, sush5 and sush6, for O5, in the sush5 drawing, currently D2300380-v3, we should include the a page for the "sensor and field" wiring for SR3 that's currently in D1000599-v8 page 5. In doing do, we can move the AA chassis end of SUS_OPLEV_SR3_AA

:: from SUS-C2 sush34 HAM 34 IO chassis's AA U33 of SUS-C1 "IN17-20" spigot

:: to the SUS-C8 sush5 HAM 5 IO chassis's AA U33 of SUS-C7 "IN17-20" spigot that's currently spare.

There there'll be some semblance of symmetry and "final home" for SR3 optical lever.

(IV) Need to update D1000599-v8 to remove all evidence of PR3 and SR3. PR3 will "already" be represented in the HAM 1 2 wiring diagram D0902810, and SR3 will be represented in the HAM 5 wiring diagram D2300380, and the HAM 3 4 wiring diagram D1000599 will be deprecated anyways in favor of the BSC2 HAM 3 4 wiring diagram, D2300383, which "correctly" only includes the Beam Splitter optical lever.

BUT FOR NEXT WEEK: All we need to do is the above summarized single cable move -- and make the corresponding changes to the h1sushtts and h1suspr3 front-end models.

Now unable to make it past FIND_IR since fully auto initial aligment ~7PM. Wind is just picking up and is now over 30mph again. The last 6 locklosses have been from FIND_IR, caused by ALSY slowly becoming unstably and losing lock. I will go into IDLE and attempt locking if wind improves, which forecast says is unlikely before shift end.

ALSY instability was caused by DOF2 P and Y going in opposite directions slowly causing ALS to lose lock at FIND_IR. I turned them off at this point and was able to get past this state after 7 locklosses.

In other news, Elenna and I do not think this issue is environmental because we were able to hold PRMI lock for over 10 minutes but were not able to improve its counts past 60 with movements in all the relevant suspensions. DRMI is thus still failing to lock (and this is fresh after another initial alignment). The current hypothesis is that something is wrong with ASC.

The alignment on the AS AIR camera looks very poor in PRMI, and the buildups are lower than I expect (around 60 on the fast channel). We tried moving the beamsplitter, PRM, and PR2 and nothing seems to improve the buildup or the shape of the beam on the camera. I even tried stepping PR3 in all directions (I reverted my steps!) and nothing got better. DRMI locking isn't working, we see plently of flashes but no triggers. My guess is the alignment is still poor that we are not meeting the threshhold. However, we've done multiple initial alignments and even adjusted the alignment by hand with no luck. I am stumped about what the problem could be.