TITLE: 03/01 Day Shift: 1530-0030 UTC (0730-1630 PST), all times posted in UTC

STATE of H1: Aligning

INCOMING OPERATOR: Tony

SHIFT SUMMARY:

It's a been a rough day on the locking front.

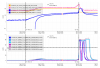

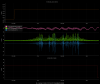

Microseism is high (the 2hrs when H1 was OBSERVING, you could definitely see alot of 0.12 - 0.15 Hz oscillations in many LSC, ASC, & SUS signals ndscopes).

DRMI has not looked great (even after fresh alignments). Not seeing many triggers for it and the flashes are as high as they have been. Hoping this is also microseism-related.

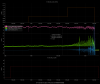

Also had a return of the SRC noise during OFFLOAD DRMI ASC earlier in the day, but then it went away.

LOG:

- ISC LOCK stalled, ran INIT on H1 Manager to clear up

- 1547-1607 Initial Alignment

- DRMI & PRMI looked bad; tried CHECK MICH FRINGES (if this looks bad will run another alignment).

- C_Mich_Fringes did the trick with PRMI locking in seconds

- 1652 (& lockloos) & 1732 Had return of SRC noise during Offload_DRMI_ASC

- 1815 OBSERVING

- 2000 Lockloss

- 2003-2027 Initial Alignment

- No SRC noise seen at OFFLOAD_DRMI_ASC

- 2053 LOCKLOSS at ENGAGE ASC FOR FULL IFO :(

- 2155-2337 PCal sensor measurements in PCal lab (tony)

- 2227 OBSERVING

- 2248 Lockloss

- 2250-2312 Alignment

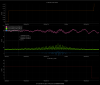

- DRMI looked bad (no triggers) even after recent alignment

- PRMI looked bad (although it had lots of triggers), but could see a pitch misalignment, and eventually found that the BS was misaligned--so tweaked BS pitch in the positive direction a few clicks and this improved flashing and PRMI locking.

- Kept having trouble with a misaligned DRMI.

- 0009 2nd Alignment started