Took transfer functions for ITMY M0 and R0 now that we are in a good enough vacuum. The ones I had taken in air before doors were put on are here: 83876.

M0

Data (/ligo/svncommon/SusSVN/sus/trunk/QUAD/H1/ITMY/SAGM0/Data/)

2025-04-21_1700_H1SUSITMY_M0_Mono_WhiteNoise_{L,T,V,R,P,Y}_0p01to50Hz.xml

Results (/ligo/svncommon/SusSVN/sus/trunk/QUAD/H1/ITMY/SAGM0/Results/)

2025-04-21_1700_H1SUSITMY_M0_ALL_TFs.pdf

2025-04-21_1700_H1SUSITMY_M0_DTTTF.mat

Committed to svn as r12261 for both Data and Results

R0

Data (/ligo/svncommon/SusSVN/sus/trunk/QUAD/H1/ITMY/SAGR0/Data/)

2025-04-21_1800_H1SUSITMY_R0_WhiteNoise_{L,T,V,R,P,Y}_0p01to50Hz.xml

Committed to svn as r12259

Results (/ligo/svncommon/SusSVN/sus/trunk/QUAD/H1/ITMY/SAGR0/Results/)

2025-04-21_1800_H1SUSITMY_R0_ALL_TFs.pdf

2025-04-21_1800_H1SUSITMY_R0_DTTTF.mat

Committed to svn as r12260

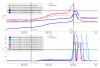

I wanted to compare these measurements with old ones, and on the first try I tried comparing these measurements to the last time that ITMY measurements in vac were taken, which was a measurements set from 2018-05-22_2119 and 2018-06-08_1608 for M0 and R0 respectively. However, comparing these two measurements to the ones I just took, there are multiple differences in some of the cross-coupling traces, so I then decided to also compare my measurements to the last full set that was taken (which was in air), 2021-08-10_2115 and 2021-08-11_2242 for M0 and R0. These measurements line up well with the current measurements, so ITMY is looking good!

Comparison between May/June 2018 In-Vac vs Aug 2021 In-Air vs April 2025 In-Vac (/ligo/svncommon/SusSVN/sus/trunk/QUAD/Common/Data/)

allquads_InVacComparison_MayJun2018vAug2021vApr2025_ALLM0_TFs.pdf

allquads_InVacComparison_MayJun2018vAug2021vApr2025_ALLM0_ZOOMED_TFs.pdf

allquads_InVacComparison_MayJun2018vAug2021vApr2025_ALLR0_TFs.pdf

allquads_InVacComparison_MayJun2018vAug2021vApr2025_ALLR0_ZOOMED_TFs.pdf

allquads_InVacComparison_MayJun2018vAug2021vApr2025_ALL_TFs.pdf

allquads_InVacComparison_MayJun2018vAug2021vApr2025_ALL_ZOOMED_TFs.pdf

Committed to svn as r12263

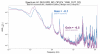

Adding a comment to talk about the L2P coupling in page 20. It appears as if we have a non-minimum phase zero that appears and dissappears between measurements [see page 20 of the original post above].

While I don't have a full explanation for this behavior, I remember seeing these shenanigans when I was testing the ISI feedforward many years ago. I was too young to make any coherent argument about it, but I remember seeing that the state of the ISI seemed correlated with the behavior. If the ISI is ISOLATED we have normal behavior, if it is DAMPED then we have the non-minimum phase behavior.

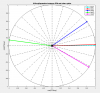

Here is a comparison between the last few years of successful ITMY M0 to M0 transfer functions, with the ISI states retrieved from plotallquad_dtttfs.m. The color coding is selected to separate the situations with the ISI in 'ISO', and with the ISI in any other state. in pseudocode:

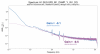

I got the same comparison done for ITMX and the ISI backreaction theory really does not seem to hold water.

There are two main regimes, same as ITMY. This time, the more recent ITMX TFs (after 2017-10-31) look more similar to the old (prior to 2021) ITMY TFs.

I am at a loss of what is making the change happen. Brian suggested it might be related to the vertical position of the suspension, maybe this is the next thing to test.

To back up Edgard's conclusion, I took measurments with the ISI in Fully Isolated and we didn't get the extra zero back 84083