Summary: After baffle damping, 10-2000 Hz vibration injections in the input arm needed a roughly 5 times greater amplitude than before damping in order to produce scattering shelves that reached into the 100 Hz band of DARM. It would be interesting for DetChar to see if there has been a reduction of transients in the 60-200 Hz band, and it would be interesting to see if the efficacy of jitter subtraction has improved.

During the May vent we damped the MCA1 baffle in hopes of reducing the dominant vibration coupling to DARM in the 10-30 Hz region, which was causing transients in DARM and almost daily reductions in range and, because of the constant background (dominated by the HVAC), might limit any jitter coupling improvements from cleaning ITMX (https://alog.ligo-wa.caltech.edu/aLOG/index.php?callRep=36147 ).

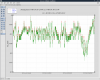

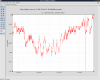

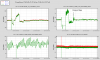

The figure shows the results, spectrograms for a reference accelerometer located on the input beam tube and for DARM during impulsive excitations made before and after damping. To produce a scattering shelf in DARM that reached the same maximum frequency in DARM, the amplitude of the impulse needed to be several times higher in the 10-20 Hz band, as measured at the reference accelerometer, after the damping than before the damping. For similar injection amplitudes, the shelf reached frequencies that were a few times lower after damping than before damping. In addition, the plot shows that the decay time of the shelf was several times smaller, as expected from damping. I repeated this for 2 different injection locations and 2 different reference sensors with similar results.

With the laser vibrometer, I found that the ~12.1 Hz baffle peak had broadened and moved up to ~12.4 Hz, overlapping with the resonance of the eye baffle. The improvement would have been greater if the resonances had not overlapped. This is because the resonances are coupled through beam tube motion, so the velocity at the new Swiss cheese baffle resonant frequency is now increased by the eye baffle resonance for vibrations that affect both (I estimate by about 2). Nevertheless, the factor of roughly five improvement exceeds the minimum factor of two that I had hoped for.

The injections were local to the input arm beam tube. We have previously found that the input beam tube is the dominant coupling site for 10-20 Hz vibrations affecting the entire corner station (https://alog.ligo-wa.caltech.edu/aLOG/index.php?callRep=35166 ) and this should still be the case. But I nevertheless wanted to demonstrate a coupling reduction for global corner station shaking using the fire pump as a corner station shaker. However, John, Bubba and I found that the fire pump now produces much smaller vibration levels in the 10-20 Hz band; we tried a couple of things but couldn’t reproduce the vibration levels from spring. With the improvement in range on Friday, though, we shouldn’t have to wait too long to see if there were improvements for global excitations from off-site. I would also like to do a new round of HVAC shutdowns. In addition, it might be interesting to see if transients in the 60-200 Hz region of DARM have decreased because scattering shelves (such as the shelf characterized by 12 Hz harmonics) don’t reach up as high, and if the efficacy of Jenne’s jitter subtraction has increased.