CP4 log file DOES NOT exist!

CP4 log file DOES NOT exist!

After getting Official OK from the LHO Run Coordinator (Keita Kawabe), and help with others to address a few tasks Keita wanted addressed, as of June 8th 18:40:06 UTC, H1 is now back to OBSERVING (H1 had been out of OBSERVING for a month & have now rejoined L1 in O2!).

LHO Operator Team: Ops Observatory Mode Returns

This had been in PLANNED ENGINEERING for most of the last month. Let's return to the habit of marking H1's Observatory Mode.

H1:SUS-ITMY_R0_OPTICALIGN_P_OFFSET (from -150 to -400), this is for HWS and ghost beam (alog 36264).

H1:LSC-PD_DOF_MTRX_SETTING_4_1 and 4_7 (from [0.0091, -849] to [0.035, 0]). These are for PRC input matrix (alog 36473).

H1:ALS-C_COMM_A_DEMOD_LONOM (from 19 to 12). Don't know why it was 19dBm, 12dBm sounds good to me.

H1:ALS-X_LASER_HEAD_NOISEEATERTOLERANCE (from 0.5 to 0.6), H1:ALS-X_REFL_SERVO_COMOFS (from 0.375 to 0.25). These were all changed during the X end investigation.

H1:ALS-Y_REFL_A_DEMOD_LONOM (from 20 to -100) and B_DEMOD_LONOM (from -100 to -9), we used to use channel A but at some point A was broken and we switched to B. Since then the board was swapped and both work but we still use channel B.

H1:ALS-Y_VCO_REFERENCENOM (from 21 to 20).

H1:ALS-X_QPD_A_YAW_OFFSET, H1:ASC-X_TR_A_PIT_OFFSET, H1:ALS-Y_QPD_A_PIT_OFFSET, H1:ALS-Y_QPD_A_YAW_OFFSET, H1:ASC-Y_TR_B_PIT_OFFSET and H1:ASC-Y_TR_B_YAW_OFFSET, no real change, just the numerical error on the order of 1E-17.

H1:ASL-X_FIBR_LOCK_TEMPERATURECONTROLS_HIGH and LOW were temporarily set to +-1300 during the X end investigation, doesn't look like we need to keep it, reverted them back to +-600.

H1:ALS-Y_REFL_SERVO_IN2GAIN was set to 1, don't know why but inconsequential, reverted to 0.

Measured the tuning coefficient of the laser crystal temperature for Prometheus S/N 2011B

(the one that was removed from EndX a week or so ago).

The tuning coefficient is ~2.72 GHz/V for the green light and is fairly constant for

laser crystal temperatures from 24 degC to 34 degC.

Increasing the laser crystal temperature decreases the laser frequency. Increasing the

diode current also decreases the laser frequency.

On the Ops white board, there is a note of the last time the VEAs being swept on May 2nd.

Jeff mentioned needing to unplug unneeded items at the End Stations, and I unplugged unused extension cords & phones. For the H1 PSL, I went through the checklist to confirm the room is in "Science Mode". I assume we do not do this for H2 PSL because it running at 100%.

The primary and redundant DMT processes at LHO were restarted at 1180972740. It appears there was a raw data dropout starting at 2017-06-08 8:36 UTC that lasted almost 7 hours. After this, the calibration pipeline was running but producing no output. A simple restart seems to have gotten data flowing again.

This restart also picked up the new version of the calibration code, gstlal-calibration-1.1.7. This was automatically (unintentionally) installed during Tuesday maintenance.

After noticing that our range plot on the wall was down this morning, it appears it is now back (posting time is now current & we can see that there is roughly 6-7hrs of no H1 data on this plot). H1 range is currently being listed as ~64.7Mpc.

TITLE: 06/08 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Planned Engineering

OUTGOING OPERATOR: Patrick

CURRENT ENVIRONMENT:

Wind: 14mph Gusts, 9mph 5min avg

Primary useism: 0.02 μm/s

Secondary useism: 0.13 μm/s

QUICK SUMMARY:

We currently do not have the GWI.stat or our DMT view showing the H1/L1 BNS ranges (Cheryl has emailed local CDS staff); we can see that we've been at NOMINAL LOW NOISE for just under 6hrs. Don't have a range to report, but the DARM spectrum on the front wall looks close to the reference.

At some point this morning we would like to do Sweeps of the LVEA & End Stations

I was not the outgoing operator.

Kyle, Gerardo, Chandra, John

CP4 liquid level control appears to be controlling normally now. The large dip in the red trace is likely due to warm gas in the liquid transfer line being pushed into the pump as the transfer line cools to liquid temperature. This means that the 20% setting was not enough to keep the transfer line cold.

Blue is the control valve position.

The current setpoint is 96%.

It would be nice if DVWEB could provide up-to-the minute data.

Here is the MY chamber pressure for the last 28 hours as well as the liquid level for the same period. Pressure spike at 5 hours was due to the loss of the ion pump.

The large drops in liquid level are not real - they are due to human actions. The smaller drops to the 80 and 90% range are real and normal as the PID control takes over from manual settings.

Amazing!

This is the result of applying GN2 pressure (40-100 psi) to the clogged sensing line for many days (vs hours that we had tried in the past). We think we melted the blockage with the relatively warm gas. Went through two bottles of GN2 with a few small leaks in fittings.

Happy to close out FRS 6875!

I lowered the %full set point to 92% and LLCV lower limit to 30% open since we think 20% warms the transfer line.

TITLE: 06/08 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Planned Engineering

INCOMING OPERATOR: Cheryl

SHIFT SUMMARY: Getting back into the swing

LOG:

Jeff & Evan did calibration measurements at the start of the shift. Recovery after lockloss was pretty simple, after doing initial alignment, except Jenne checked the soft error points before the soft loops were engaged. The biggest was about .1 and we survived engaging the loops, but it sounds like it maybe necessary to stop and evaluate when re-locking, for now. PI were also more aggravating than before the pump down. It seems like more modes ring up than before, and mode 28 in particular seems sensitive to phase. The usual sign flip didn't work, but a 10 degree bump and patience were enough.

There are SDF diffs that haven't been addressed, as some need more evaluation, LVEA lights are still on and Corey says the last logged VEA sweep was May 2nd, so we're not going to Observe.

Around 2 UTC, I ran A2L.

It's been otherwise quiet.

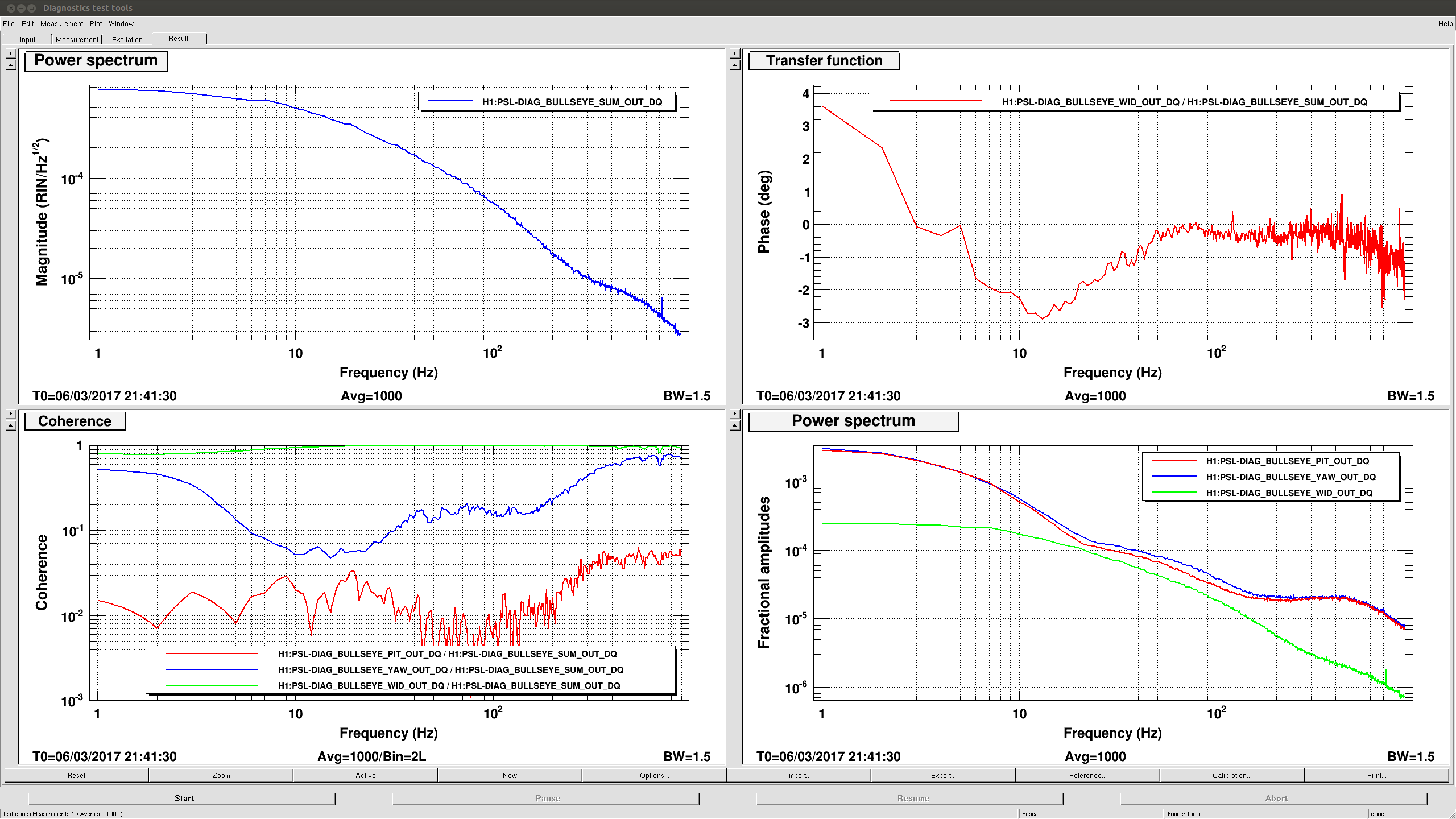

I took over the measurement time from Jenne and took the coherence measurements between DARM and Bullseye signals / IMC WFS RF/DC signals just to confirm the situation of the jitter coupling.

Attachment 1 shows the coherence measurement between DARM and the bulleye signals. They show broadband (30~900Hz) coherence as high as 0.5.

Attachment 2 shows the coherence between DARM and the IMC WFS RF signals. They shows high coherence but they look like mechanical peaks. The IMC WFS DC signals (Attachment 3) also shows qualitatively similar features.

[Jenne,Sheila,Corey,Vaishali]

Timelapse of the locking sequence :

1. Problem : IFO losing lock at Reduce modulation depth.

Solution : We started tackling the problem by first undoing the offsets on the POP_WFS_A in order to try an help our recycling gain which had tanked from its normal value of about 30 ish to 26. We could only bring this upto 28 by undoing the offsets that Sheila added yesterday (see alog 36686).

2. Problem : Jenne then noticed that the spots had moved a rather large amount (~2 cm on the ETMs in Yaw and ~1 cm in Pitch). See attached figure (SpotPos_O1O2) which shows the average spot position on the ITMs and ETMs for O1 in pink and O2 in green.

Solution : We changed the L2A decoupling coefficients and set them to what they were before the vent by using dither lines frequencies that were in the a2L script. The logic behind doing this was to try and improve the beam pointing into IFO and bring up the PRC Gain (which is now sitting at ~30) .

Logic/Methodology : This 'reverse a2L' where we moved PR3 in Pitch and Yaw (separately keeping an eye on the recycling gain and using that as a figure of merit) to affect the spot position on the ITMs and added an offset to CSOFT and DSOFT in combination to move the spot on the ETMs is basically us trying to move the actuation node to the beam spot (a2L moves actuation node to beam spot). We compared the dither lines showing up in a current calibrated DARM spectra to the an old calibrated DARM spectra reference and slowly changed the L2A decoupling coefficients in both pitch and yaw. All of this process was done in the guardian state PR3 spot move.

3. We reset the POP_WFS_A PIT and YAW again .

4. Problem : SRCL Feedforward filters different than before

Attempted solution : reset all the filters on the SRC Feedforward to what they were at the beginning of O2.

Consequence : This however didn't work too well and we went back the filters that Sheila had chosen in the alog 36686.

5. Jenne has also reset the green initial alignment set points, so hopefully the next initial alignment will come back to the place we left it thus making relocking easier.

Observations/Notes : Locked in NLN at 62 MPc, Jitter noise back, super high frequency noise has gone away i.e. the DARM spectra almost looks like what it used to before the vent.

Warning : If I have forgotten something or said something wrong, Jenne will correct me in comments :)

The following pressures were recorded for the following Dewars:

8514371 = 50 microns 8514372 = 25 microns 8514374 = 225 microns <-- also knows as CP3. 8514375 = 19 microns 8514376 = 25 microns 8514377 = 30 microns 8514379 = 26 microns 8514380 = 27 microns

1 micron of Hg = 0.001 Torr

225 micron = 0.2 Torr

Vaishali, Kiwamu,

We have implemented the calibration coefficients for the bullseye sensor in the front end today. The PIT, YAW and WID signals are now calibrated in fractional amplitudes. They are defined as

(fractional amplitude) = | HOM amplitude (e.g. E_{10} )| / | E_{00} |

Using this calibration, we virtually propagated pointing jitter (a.k.a PIT and YAW) from the bullseye to the IMC WFSs. We were able to get somewhat quantitative agreement with the measured jitter spectra there for the PIT and YAW degrees of freedom.

Next things to do:

[New calibration settings]

Since Vaishali is writing up a document describing the calibration method, we skip the explanation here and just show the results.

Once they were set, we then accepted the SDF, although they are NOT monitored.

[Some noise spectra]

Below, the calibrated spectra are shown in the lower right panel.

The jitter level was about 3x10-5 Hz-1/2 for PIT, YAW and WID at around 100 Hz. If these fields go through the PMC, their amplitude should be suppressed by a factor of 63 in amplitude (T0900649-v4). So their level should be something like 5x10-7 Hz-1/2 when they arrive at the IMC. By the way, for some reason the intensity fluctuation and beam size jitter are positively correlated: as the laser power becomes larger, the beam size becomes larger at the same time.

Here are plots showing the noise projection for the IMC WFS which qualitatively agree with what Daniel has measured in the past using the DBB QPDs instead (31631) -- IMC WFS noise are superposition of acoustic peaks and HPO jitter.

Finally, if one let these jitter fields propagate through the IMC, they should get an attenuation of about 3x10-3 in their amplitudes. Therefore the amplitudes of the HOMs after the IMC should be roughly 1.5x10-9 Hz-1/2. This number is consistent with what Sheila has estimated for pointing jitter at IM4 (34112). However, a funny thing is that, in order to explain a high coherence of 0.1 for pitch when the interferometer is locked in low noise (see for example, blue curves in the first attachment in 34502), the coupling must currently be about 1x10-11 m / fractional amplitude, which is more than 10 times larger than what Sheila measured back in February (34112). What is going on?

DCC Document link describing the Calibration : LIGO-T1700126