Wind has calmed down a bit and the range has come up some. We just rolled over to 70 hours locked.

Wind has calmed down a bit and the range has come up some. We just rolled over to 70 hours locked.

L1 alog #32936 describing an alignment shift of the L1 IM2 in pitch on March 27th, 2017.

TITLE: 04/11 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Observing at 51Mpc

OUTGOING OPERATOR: Patrick

CURRENT ENVIRONMENT:

Wind: 33mph Gusts, 27mph 5min avg

Primary useism: 0.18 μm/s

Secondary useism: 0.33 μm/s

QUICK SUMMARY: No issues handed off. Lock going on 67 hours.

TITLE: 04/10 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC STATE of H1: Observing at 59Mpc INCOMING OPERATOR: Travis SHIFT SUMMARY: Abnormal noise in 3-10 and 10-30 Hz seismic BLRMS correlated with noise in range for a few hours before noon. Also heard an airplane at 20:08 UTC that may have correlated with noise in DARM. LOG: 15:25 UTC Restarted video2. 16:00 UTC Karen driving car to warehouse. 16:45 UTC PI mode 23 came up coincident with large glitch in DARM. Spike in 3-10 and 10-30 Hz CS Z axis seismic BLRMS. Chris driving green forklift? Christina at shipping and receiving? 17:14 UTC Marc and Filiberto to mid X. 18:24 UTC Marc and Filiberto back. Heading to vault. 19:53 UTC Marc and Filiberto back. 20:03 UTC Started darmBLRMS striptool and restarted range integrand on video0. 20:08 UTC Airplane heard in control room. Possible correlated noise in DARM. 20:41 UTC Gerardo to mid Y for scroll pump maintenance work. Nothing noisy. 21:43 UTC Chandra to CP3. 21:59 UTC Chandra back. 22:54 UTC Gerardo back.

Starting CP3 fill. LLCV enabled. LLCV set to manual control. LLCV set to 50% open. Fill completed in 1072 seconds. TC B did not register fill. LLCV set back to 19.0% open. Starting CP4 fill. LLCV enabled. LLCV set to manual control. LLCV set to 70% open. Fill completed in 128 seconds. LLCV set back to 37.0% open.

Raised CP3 to 20% open.

Verified that both thermocouples are inserted into CP3 and CP4 exhaust pipes, since TC B of CP3 didn't register fill for the last couple of times.

The range has been degraded for the last few hours coincident with abnormal noise in the 3-10 and 10-30 Hz seismic BLRMS. The noise appears to have subsided for now and the range has recovered. PI mode 23 was ringing up coincident with glitches in DARM during this period.

It appears that the noise subsided for the lunch hour and is now on the way back up.

A. Urban, on behalf of the calibration group

The full suite of summary pages comparing C01 data against the online C00 frames are available here (requeres LIGO.ORG credentials). Note, these pages currently cover data between 30 November 2016 and 10 March 2017, inclusive.

Notes:

The scripts I used to generate some of these plots live in the calibration SVN repo and use GWPy (which can be sourced on any of the LDAS clusters by sourcing the gwpy.env script in that repo). The rest of it was all done using the gwsumm, the same infrastructure used to make Duncan's DetChar summary pages. (To see how a specific page was made, you can always hit "How was this page generated?" at the bottom of the page.)

Front End Watch is GREEN

HPO Watch is GREEN

PMC:

It has been locked 2 days, 17 hr 40 minutes (should be days/weeks)

Reflected power = 18.02Watts

Transmitted power = 60.42Watts

PowerSum = 78.45Watts.

FSS:

It has been locked for 2 days 12 hr and 7 min (should be days/weeks)

TPD[V] = 3.072V (min 0.9V)

ISS:

The diffracted power is around 2.1% (should be 3-5%)

Last saturation event was 2 days 12 hours and 7 minutes ago (should be days/weeks)

Possible Issues:

PMC reflected power is high

TITLE: 04/10 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Observing at 56Mpc

OUTGOING OPERATOR: Nutsinee

CURRENT ENVIRONMENT:

Wind: 15mph Gusts, 12mph 5min avg

Primary useism: 0.03 μm/s

Secondary useism: 0.26 μm/s

QUICK SUMMARY:

No issues to report.

TITLE: 04/10 Owl Shift: 07:00-15:00 UTC (00:00-08:00 PST), all times posted in UTC

STATE of H1: Observing at 62Mpc

INCOMING OPERATOR: Patrick

SHIFT SUMMARY: Been locked 57 hours and counting.

LOG:

14:02 Balers on X arm clearing path by pitchforks.

14:17 Bubba driving to meet the balers, beginning of X-arm.

Been locked for 53 hrs and counting.

TITLE: 04/10 Owl Shift: 07:00-15:00 UTC (00:00-08:00 PST), all times posted in UTC

STATE of H1: Observing at 63Mpc

OUTGOING OPERATOR: Ed

CURRENT ENVIRONMENT: Wind: 7mph Gusts, 6mph 5min avg Primary useism: 0.03 μm/s Secondary useism: 0.25 μm/s

QUICK SUMMARY: Been locked for 49 hr 50 mins and counting.

TITLE: 04/10 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Observing at 65Mpc

INCOMING OPERATOR: Nutsinee

SHIFT SUMMARY:

Strange Looking comb(?) in DARM. (see my aLog) Otherwise, quiet shift. We Broke our old 02 record by 30minutes (so far). Handing off to Nutsinee.

LOG:

There seems to be some foolishness going on in the 55-65Hz freq range. Attached are some screenshots of the DARM spectrum to illustrate. I don't see anything corollating to this noise in any of the FOMs. I don't see any visible glitching in the BNS Inspiral graph or the h(t)DMT Omega spectra. Environment is sound, save for some rising microseism. PI mode 27 is riding highand "porpoising" but not high enough to set of a verbal alarm. a2l alignment looks good (i just ran the dither not so long ago). I dunno.

Starting CP3 fill. LLCV enabled. LLCV set to manual control. LLCV set to 50% open. Fill completed in 1984 seconds. TC B did not register fill. LLCV set back to 18.0% open. Starting CP4 fill. LLCV enabled. LLCV set to manual control. LLCV set to 70% open. Fill completed in 3264 seconds. LLCV set back to 35.0% open.

Raised CP3 to 19% open and CP4 to 38% open.

this is the first run of an actual overfill using the new virtual strip tool system. This completes the cp3, cp4 portion of FRS7782. This ticket has been extended to cover the vacuum pressure strip tool.

Reduced CP4 valve setting to 37% open - looked to be overfilling.

WP 6562; Nutsinee, Kiwamu,

As a follow up of Aidan's analysis (35336), we did a simple measurement in this morning for determining the HWS coordinate.

- Preliminary result (currently being double checked with Aidan):

[Measurement]

[Verification measurement]

I've independently checked my analysis and disagree with the above aLOG. I get the same orientation that I initially calculated in aLOG 35336.

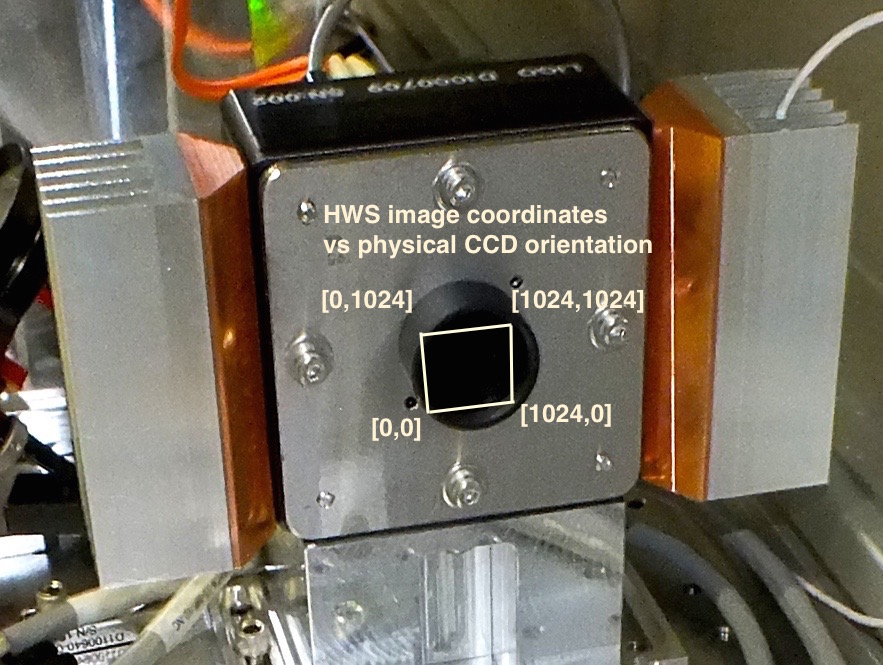

After discussing the matter with Kiwamu, it turned out there was some confusion over the orientation of the CCD. The following analysis should clear this up.

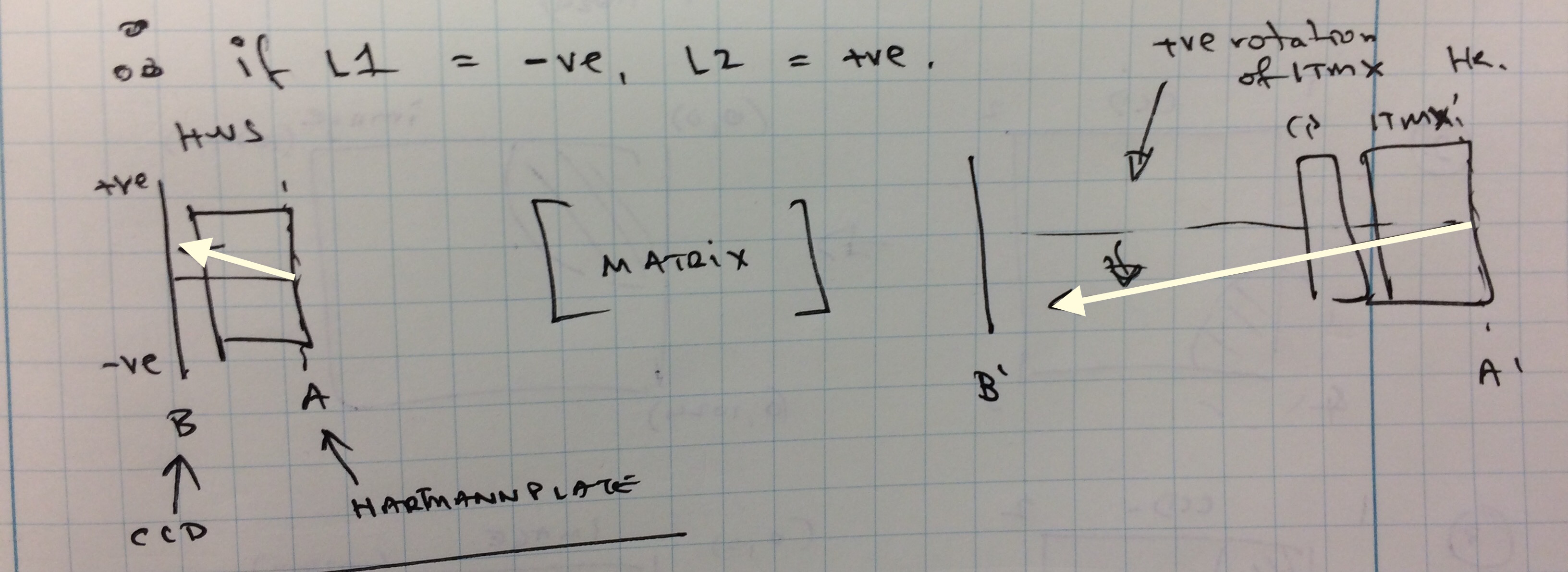

1. ABCD matrix for ITMX to HWSX (T1000179):

| -0.0572 | -0.000647 |

| 0.0035809 | -17.4852 |

So, nominally the X&Y coordinates are inverted by this matrix. However, the X coordinates will be inverted in horizontal reflection off a mirror. Fortunately, there are an even number of horizontal reflections (plus the periscope but the upper and lower mirrors cancel each other).

Therefore, we can illustrate the optical system of the HWS as below:

As viewed from above, the return beam propagates from ITMX back toward the HWSX (from right to left in this image). A positive rotation of ITMX in YAW is a counter-clockwise rotation of ITMX when viewed from above. So the return beam rotates down in the image as illustrated. The conjugate plane of the HWS Hartmann plate (plane A) is at the ITMX HR surface (plane A'). The conjugate plane of the HWS CCD (plane B) is approximately 3m from the ITMX HR surface (going into the PRC - plane B').

The even mirror reflections cancel each other out. The only thing left is the inversion from the ABCD matrix. Hence, the ray that rotates counter-clockwise at ITMX rotates clockwise at the HWS - as illustrated here. In this case, towards the right of the HWS CCD.

Lastly, the HWS CCD coordinate system is defined as shown here (with the origin in the lower-left). I verified this in the lab this morning.

Therefore: the orientation in aLOG 35336 is correct.

J. Kissel

Gathered regular bi-weekly calibration / sensing function measurements. Preliminary results (screenshots) attached; analysis to come.

The data have been saved and committed to:

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O2/H1/Measurements/SensingFunctionTFs

2017-03-21_H1DARM_OLGTF_4to1200Hz_25min.xml

2017-03-21_H1_PCAL2DARMTF_4to1200Hz_8min.xml

2017-03-06_H1_PCAL2DARMTF_BB_5to1000Hz_0p25BW_250avgs_5min.xml

J. Kissel

After processing the above measurement, the fit optical plant parameters are as follows:

DARM_IN1/OMC_DCPD_SUM [ct/mA] 2.925e-7

Optical Gain [ct/m] 1.110e6 (+/- 1.6e3)

[mA/pm] 3.795 (+/- 0.0053)

Coupled Cavity Pole Freq [Hz] 355.1 (+/- 2.6)

Residual Sensing Delay [us] 1.189 (+/- 1.7)

SRC Detuning Spring Freq [Hz] 6.49 (+/- 0.06)

SRC Detuning Quality Factor [ ] 25.9336 (+/- 6.39)

Attach are plots of the fit, and how these parameters fit in within the context of all measurements from O2.

In addition, given that the spread of the course of the detuning spring frequency is between, say 6.5 Hz and 9 Hz, I show the magnitude ratio of two toy transfer functions, where the only difference is the spring frequency. One can see that -- if not compensated for, that means a systematic magnitude error of 5%, 10%, 27% at 30, 20, and 10 Hz, respectively.

Bad news for black holes! We definitely need to track this time dependence, as was prototyped in LHO aLOG 35041.

Attached are plots comparing the sensing and response function with and without detuning frequency. Compared to LLO (a-log 32930), at LHO the detuning frequency of ~7 Hz has significant effect on the calibration around 20 Hz (see response function plot). The code used to make this plot is added to svn,

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O2/H1/Scripts/SRCDetuning/springFreqEffect.m

Attached are plots showing differences in sensing functions and response functions for spring frequencies of 6 Hz and 9 Hz. Coincidentally they are very similar to the plots in the previous comment which show differences when the spring frequencies are 0 Hz and 6.91 Hz.