GariLynn Billingsley, Travis Sadecki, Betsy Weaver, Calum Torrie, Nicki Washington (and a BIG support crew)

ITMx inspection with green light and portable microscope revealed several scattering sites. The center most sites were located high and right of center. (Camera images will be attached soon.) One of these (the brightest / closest to center) is shown in the attached picture. It measure roughly 0.5 mm across the halo.

There were many scatter sites of the same character all the way out to the edge of the optic. At the edge we attempted to clean one of these sites using the following solvents (IPA, Acetone, red FC and clear FC) without success.

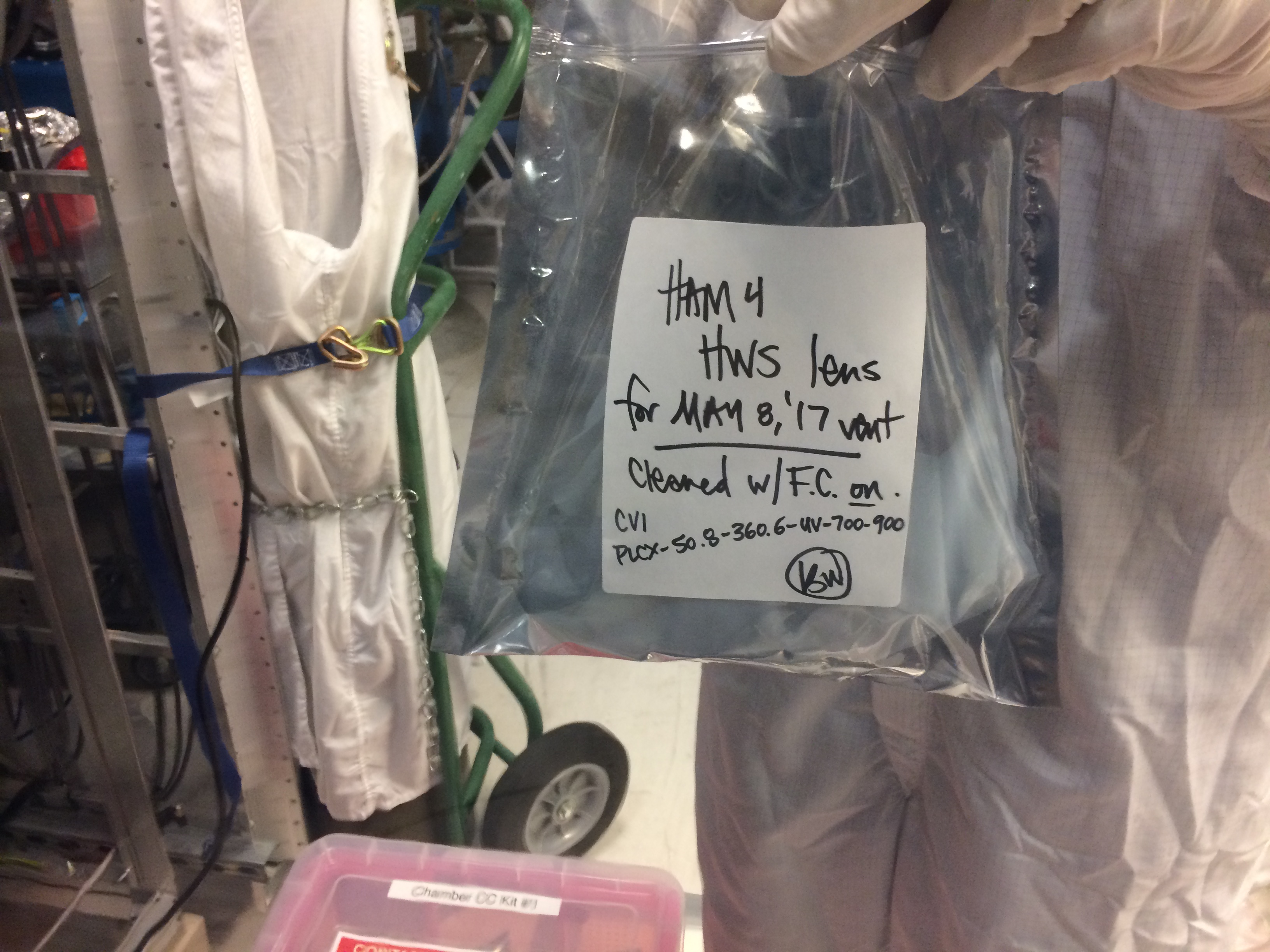

Following this local cleaning and the overall inspection we then cleaned the entire HR face as per normal procedures i.e. with red first contact (FC) spray along with the cone etc ....

Subsequent inspection showed no change to these scatterering sites.

For full chamber report see alog by Betsy.

GariLynn and team.

The RF Source (24MHz) is now reinstalled at EY. Both the timing and readback signals errors have cleared.

1. Timing error for RF source EY was reported.

2. Unit was power cycled and timing fiber reseated. Unit locked according to front panel led lights.

3. The readback frequency, error, and control signal all displayed incorrect values in MEDM.

4. Looked at RF levels and frequency outputs. All within spec.

5. Unit was brought back to EE lab.

6. The input IC buffer on the timing interface 1 PPS locking board was replaced. We later convinced ourselves IC buffer never had an issue.

7. The FPGA on the timing slave was reprogrammed. We used the same Version 4 subversion 118 code.

8. Unit was reinstalled at EY. Unit locked within 5 minutes and all readback signals now show expected values.

F. Clara, R. McCarthy, M. Pirello