(Aidan B, Corey G, TJ S, Jim W, PLUS much assistance to by Vacuum Team, Betsy W for prep work, & Jeff B. for staging of Contamination Control supplies)

All times in PDT

On Tues (May9th) multiple in-vacuum tasks were on the docket for work on H1, and this log covers the work at/inside HAM4: Replacement of the H1 HWSY VAC LENS. While performing this work, we followed Vent Plan (E1700124) & followed STEPS #32 - 39. Also used the Chamber Entry & Exit Procedure (E1201035, sheet4). Attached are some photo highlights from this job (all photos are on resourcespace [links below]).

HAM4 was roughly open from 10am - 2pm with only minimal entry within the chamber (access was from the North & only arms/upper body entered the chamber for this work task).

NOTE: During this activity there was an Alert/Site Area Emergency event on the Hanford site (first time this has happened for LHO in ~20years of being here).

- ~8:30: Alert issued & within the next hour or so LIGO (& other areas) were asked to shelter-in-place (i.e. do not leave buildings).

- ~12:00: Non-essential employees were released from work. This was also the case here at LHO. Essential LHO staff working on in-vacuum tasks were asked to remain until activities were complete.

- We continued our work throughout this time.

Summary of HAM4 Activities:

- ~10:00am: HAM4 door removed by Vacuum Team [STEP 31]

- Particle Counter Readings Taken: [STEP 32]

| 0.3um (particle/L) | 0.5um (particle/L) | |

|---|---|---|

| Before Door Removed | 68 | 12 |

| After Door Removed | 5 | 1 |

| In-Chamber | 11 | 4 |

- 10:23: Entering Cleanroom for Lens Swap Task

- 10:49: Cloth Cover Removed

- 10:52: No Contamination Control wafers were on the HAM4 table. [STEP 32]

- HAM4 ISI's NW & NE Lockers were locked by Corey (Jim was still at BSC3). [STEP 34]

- 10:56: PEEK Lens Retaining Ring removed (it was torqued on fairly hard, but able to eventually remove with a small allen key). [STEP 34]

- 10:57: Lens removed (barrel marking indicated this was a PLCC [concave] lens). [STEP 34]

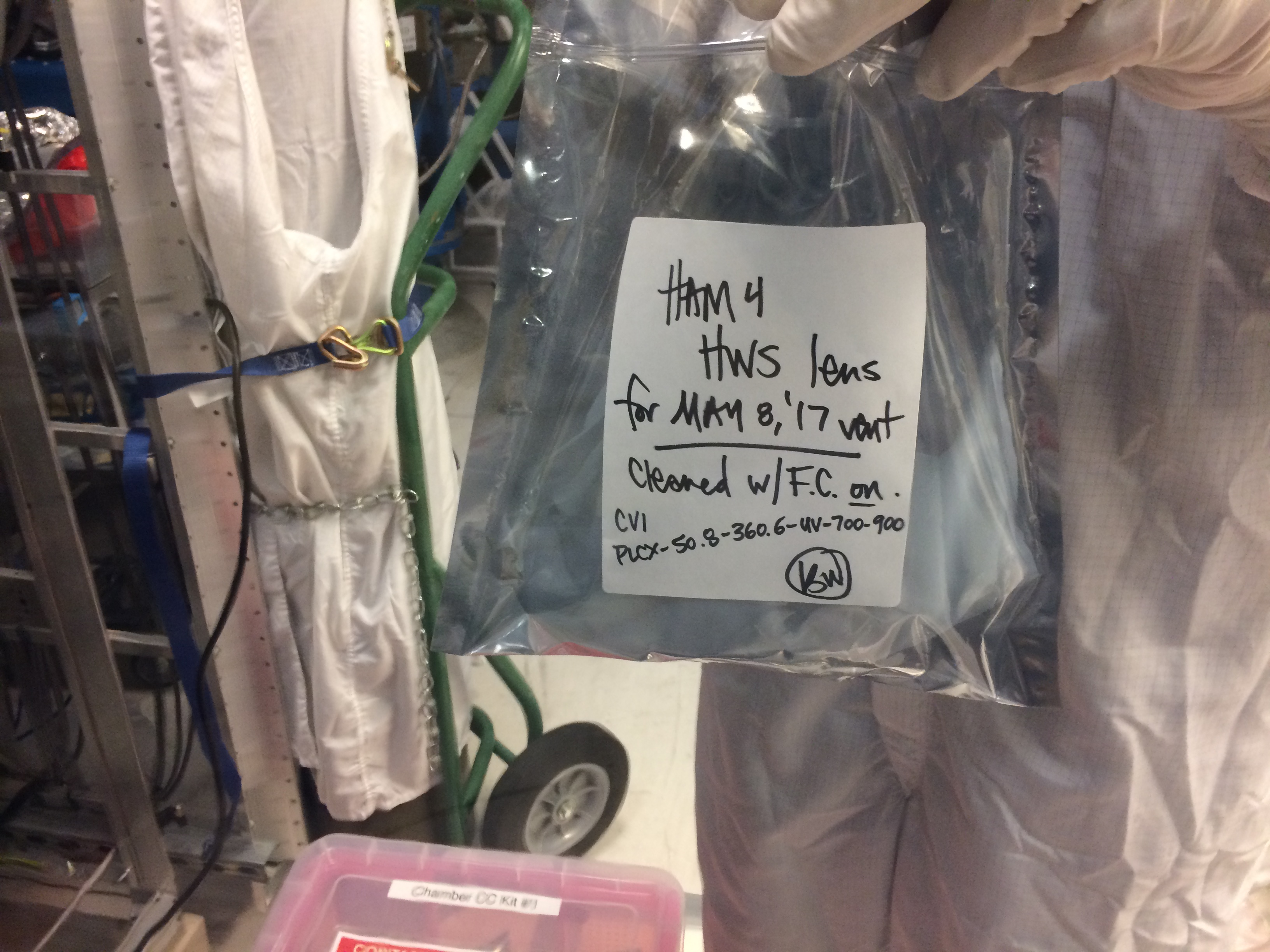

- 11:00-11:05: Installing H1 HWSY VAC LENS (see T1000179). This lens had First Contact on it. Aidan said, "curved surface facing inside chamber". It was hard to determine curved surface w/ First Contact on lens; TJ & myself made a best guess and installed lens (because First Contact [FC] on lens' faces, handled lens by faces to install in mount). Retaining ring torqued down by hand with a small allen key. [STEP 35]

- 11:05-11:08: TJ adjusted Ion Gun to 20-30psi & we worked on removing FC off curved (i.e. lens surface facing inside chamber). With air from gun held by TJ and my hand holding PEEK mesh tab, this FC came off easily. The other FC was a little tougher to remove because it is recessed within mount, but it eventually came off, and no residual FC was observed on the lens. [STEP 35]

- 11:10-11:15: Robert took photos down the tube & then I began taking photos many photos of HAM4. [STEP 36]

- I'm a little saddened by the photos taken. Due to low light, hard to see images on camera, and also trying to work fast---many photos are a bit blurry. HAM4 Photos are on Resourcespace (good ones & blurry ones).

- 11:15-11:18: Aidan asked TJ & I to look through the lens to see what images looked like: "Objects looked bigger". Aidan approved.

- Looking through the lens we pulled out made objects look "smaller"

- 11:25: Cloth Cover on Chamber

- 11:30-1:10pm: Transfer Function measurements made by Jim, Betsy, & Kiwamu. There were issues running measurements from laptop near HAM4, so measurements were completed in the Control Room.

- During this time Jim briefly opened cloth cover to UNLOCK HAM4 ISI. [STEP 37]

- SR2 & HAM4ISI transfer functions ACCEPTABLE.

- 1:00-1:15: Get Door/Chamber Closure signatures (E1700161). [STEP 38]

- 1:20-1:24: Installed a new Contamintation Control wafer. [STEP 38]

- Took another round of photos

- Perform Exit Steps/ Exit Chamber steps

- 1:30: HAM4 door installed by Vacuum Team [STEP 39]

- 2:00: HAM4 sealed & in-vacuum task COMPLETE

The new lens is from the bag (and box) labeled: CVI PLCX-50.8-360.6-UV-700-900, per Table 2 of T1000179-v20

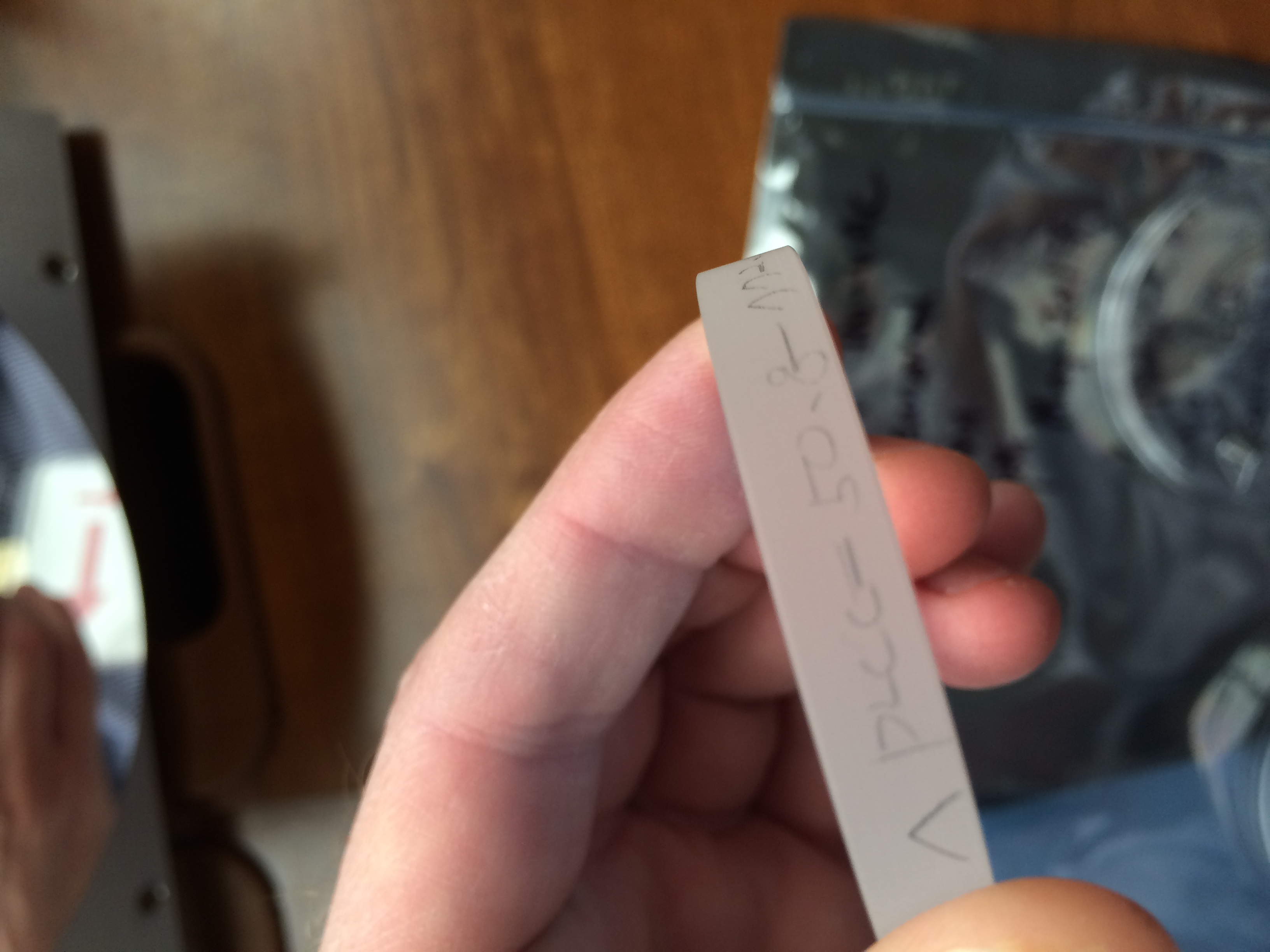

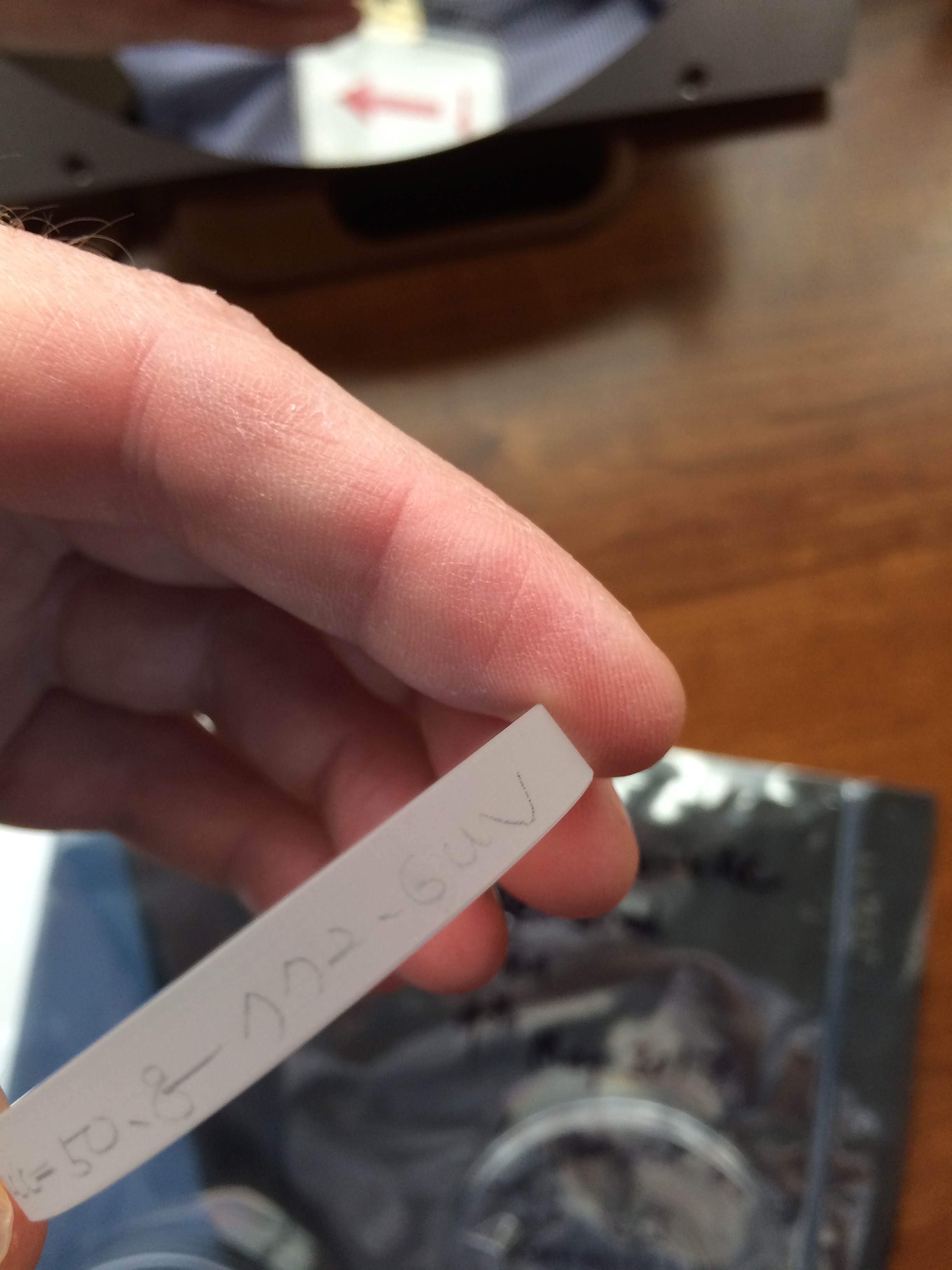

The old lens had a label penciled onto the side. This read: PLCC-50.8-772.6-UV

This confirms our educated guess back in October 2014: (T1400686, page 2).