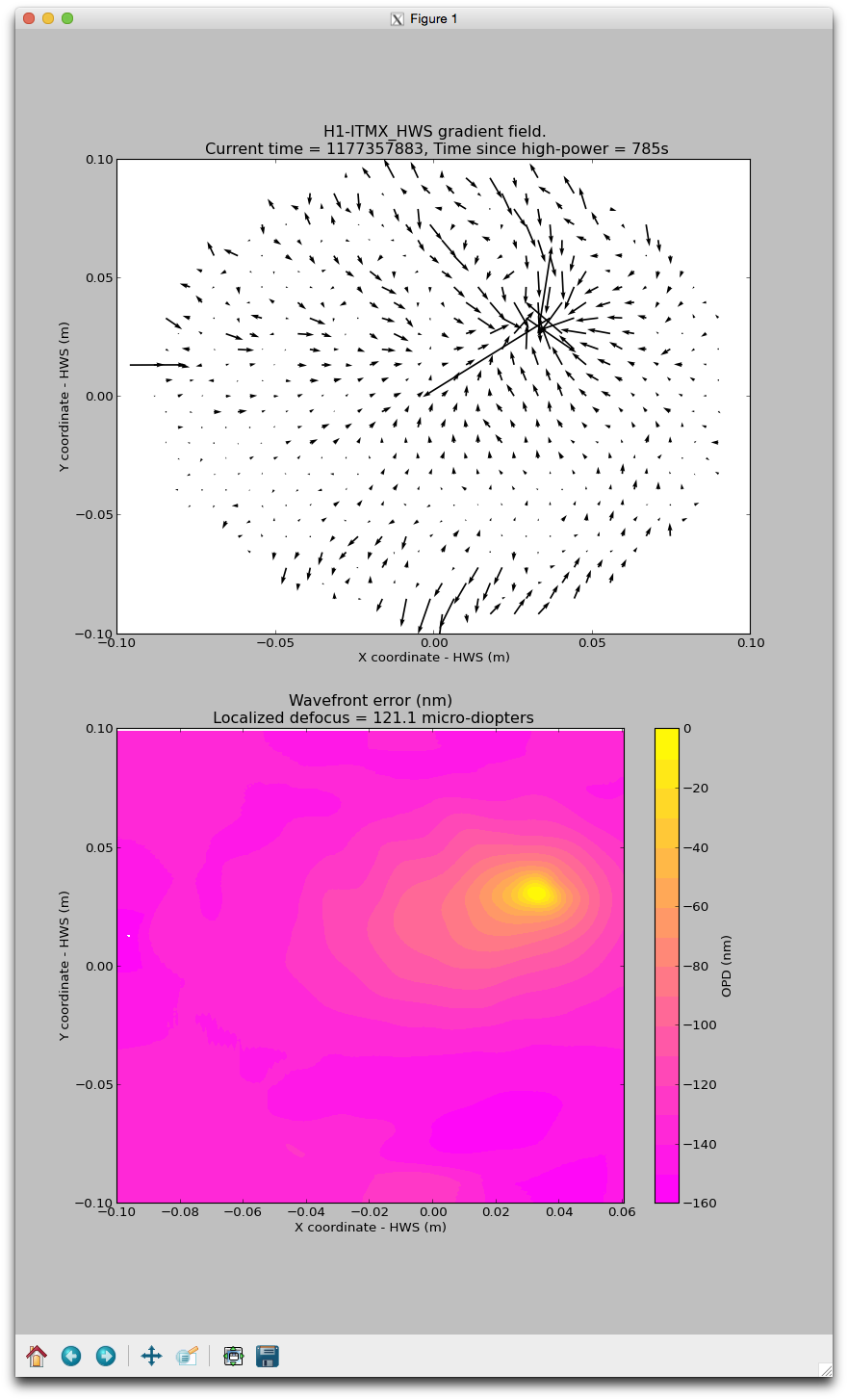

Summary: no apparent change in the induced wavefront from the point absorber.

After a request from Sheila and Kiwamu, I checked the status of the ITMX point absorber with the HWS.

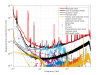

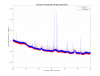

If I look at the wavefront approximately 13 minutes after lock-aquisition, I see the same magnitude of optical path distortion across the wavefront (approximately 60nm change over 20mm). This is the same scale of OPD that was seen around 17-March-2017.

Note that the whole pattern has shifted slighlty because of some on-table work in which a pick-off beam-splitter was placed in front of the HWS.

Thanks Aidan.

We were wondering about this because of the reappearance of the braod noise lump from 300-800 Hz in the last week, which is clearly visible on the summary pages. (links in this alog ) It we also now have broad coherence between DARM and IMC WFS B pit DC, which I do not think we have had today. We didn't see any obvious alignment shift that could have caused this. It also seems to be getting better or going away if you look at today's summary page.

Here is a bruco for the time when the jitter noise was high: https://ldas-jobs.ligo-wa.caltech.edu/~sheila.dwyer/bruco_April27/