Brett, Jeff and I have been looking a little at Brett's suspension model, and trying to asses how our damping design (both top mass and oplev) is at high power, based on some conversations at the LLO commissioning meeting. Today I turned off oplev damping on all test masses.

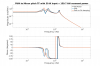

Top mass damping:

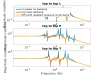

The first attached plot shows the magnitudes of the top to top transfer functions for L, P, and Y for 3 different states (no damping and no power in the arms, top mass only damping and no power in the arms, and top mass only damping and 30 Watts of input power). You can see that while it would be possible to design better damping loops to deal with the radiation pressure changes in the plant, there isn't anything terrible happening at 30 Watts. I also looked at 50 Watts input power, and these aren't any large undamped features. I'm not plotting T, V, and R because the radiation pressure doesn't have any impact on them according to the model.

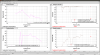

Oplev damping:

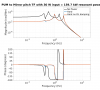

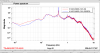

The next three plots show the transfer functions from pum to test mass pitch, in the optic basis, hard and soft. One thing that is clear is that we should not try to damp the modes above 1 Hz in the optic basis, so if we really want to use oplev damping we should do it in the radiation pressure basis and probably have a power dependent damping filter. The next two plots show the impact on the hard and soft modes. You can see that the impact of the oplev damping on the hard modes (and therefore the hard ASC loops) is minimal, but there is an impact on the soft loops around half a Hz. DSOFT pitch has a low bandwidth, but we use a bandwidth of something like 0.7Hz for CSOFT P to suppress the radiation pressure instability.

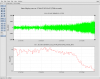

Krishna had noted that the ITMY oplev seems to have been causing several problems recently, (aog 34351), and this afternoon we think that this caused us a lockloss. I tried just turning the opelv damping offf, which was fine, except that we saw signs of the radiation pressure instability returning after a few minutes at 30 Watts. I turned the gain up by a factor of 2 (from 0.2 to 0.4), and this instability went away. The last attached plot is a measurement of the csoft pitch loop with 2 Watts, no oplev damping, before I increased the gain to 0.4.

The guardian is now set to not turn on the oplev damping, and this is accepted in SDF. Hopefully this saves us some problems, but it is probably still a good idea to fix the ITMY oplev on tuesday.