Jim, Dave:

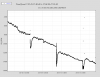

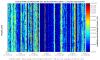

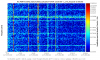

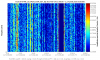

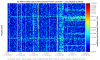

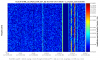

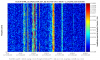

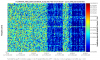

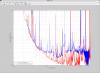

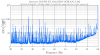

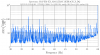

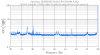

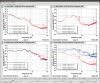

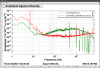

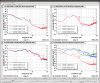

At 15:44:58 UTC (08:44:58 PDT) we received a timing error which only lasted for one second. The error was reported by the CNS-II independent GPS receivers at both end stations, they both went into the 'Waiting for GPS lock' error state at 15:44:58, stayed there for one second, and then went good. The IRIG-B signals from these receivers are being acquired by the DAQ (and monitored by GDS). The IRIG-B signals for the second prior, the second of the error, and the following two seconds (4 seconds in total) are shown below.

As can be seen, even though EX and EY both reported the error, only EX's IRIG-B is missing during the bad second.

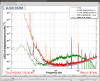

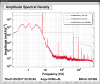

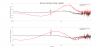

The encoded seconds in the IRIG-B are shown in the table below. Note that the GPS signal does not have leap seconds applied, so GPS = UTC +18.

| Actual seconds |

EX IRIG-b seconds |

EY IRIG-b seconds |

| 15 |

15 |

15 |

| 16 |

missing |

16 |

| 17 |

16 |

17 |

| 18 |

18 |

18 |

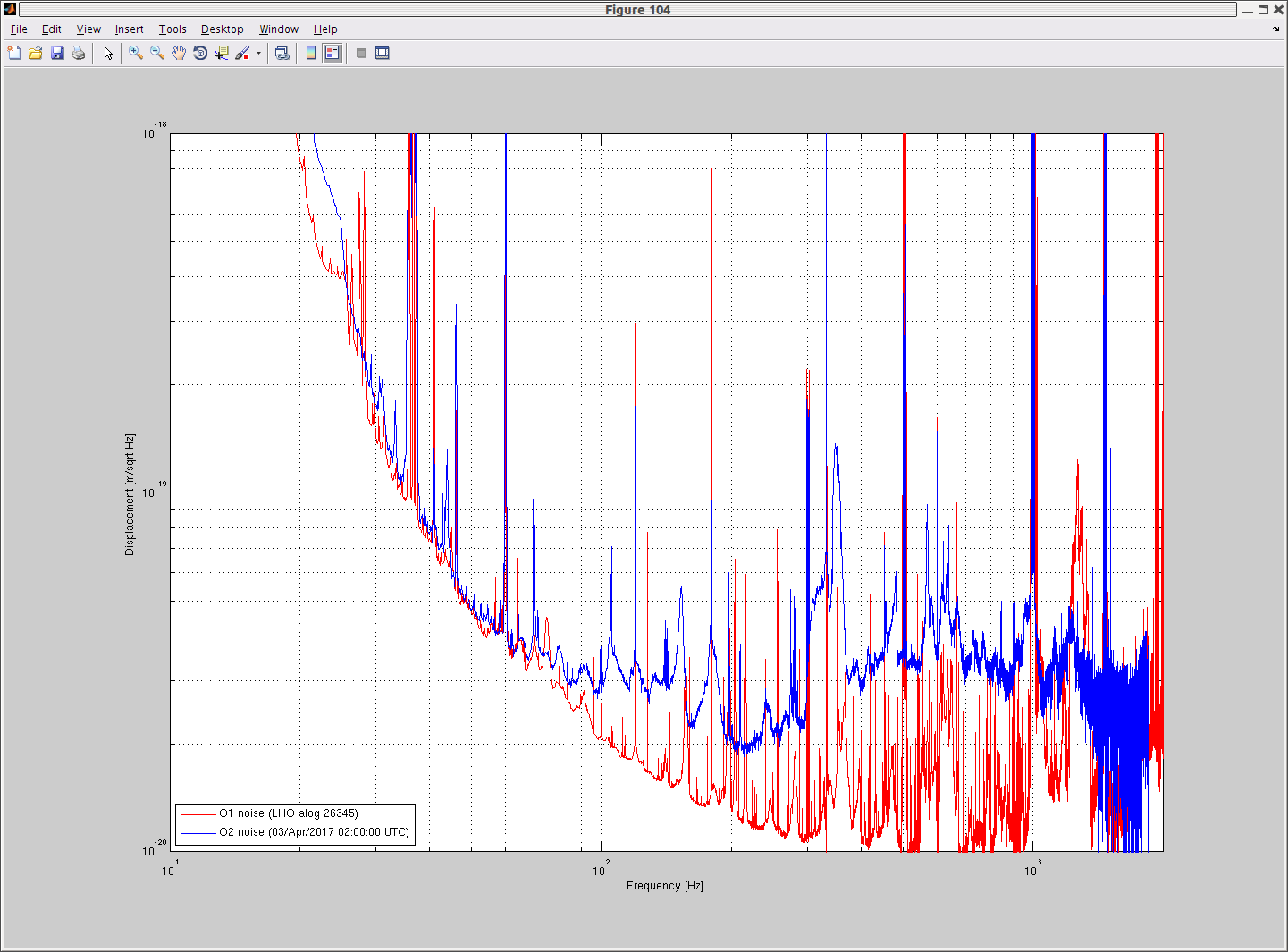

So EY was sequential through this period. EX slipped the 16 second by a second, skipped 17 and resynced at 18.