Summary: Injections suggest that vibration of the input beam tube in the 8-18 Hz band strongly couples to DARM and is the dominant source of noise in the 70-200 Hz band of DARM for transient truck and fire pump signals, and likely also for the continuous signal from the HVAC. Identification of the coupling site is based on the observation that local shaking of the input beam tube produces noise levels in DARM similar to those produced by global corner station vibrations from the fire pump and other sources, for similar RMS at an accelerometer under the Swiss cheese baffle by HAM2. The local shaker injections were insignificant on nearby accelerometers or HEPI L4Cs, and HEPI excitations cannot account for the noise, supporting a local, off-table coupling site in the IMC beam tube. In addition, local vibration injections occasionally produce a 12 Hz broad-band comb, which is also produced by trucks and the fire pump, possibly indicating a 12 Hz baffle resonance. While the Swiss cheese baffle seems the most likely coupling site, we have not yet eliminated the eye baffle by HAM3.

Several recent observations have suggested that we are limited by noise in the 100 Hz region that is produced by vibrations in the 10-30 Hz region. There was the observation that our range increased by a couple of Mpc when the HVAC was shut down, ( https://alog.ligo-wa.caltech.edu/aLOG/index.php?callRep=32886 ), and, additionally, the observations of noise from the fire pump and from trucks.

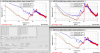

I noticed that the strongest signals in DARM produced by the fire pump and trucks had peaks that were harmonics of about 12 Hz. I injected manually all around the LVEA and found that the input beam tube was the one place where I could produce a 12 Hz comb in the 100 Hz region (injections were sub-50 Hz). Figure 1 shows that a truck, the fire pump, and my manual injections at the input beam tube produced similar upconverted noise in DARM.

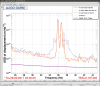

I also used an electromagnetic shaker on the input beam tube. Figure 2 is a spectrogram showing a slow shaker sweep and the strong coupling in the 8-18 Hz band. I wasn’t able to reproduce the broad 12 Hz comb with the shaker, possibly because, as mounted, it didn’t couple well to the 12 Hz mode. But the broad-band noise produced in DARM by the shaker is more typical of trucks and the fire pump: only occasionally does the 12 Hz comb appear. One possibility is that the bounce mode of the baffle is about 12 Hz.

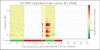

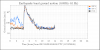

Figure 3 shows that, for equivalent noise in DARM, the RMS displacement from the shaker and the fire pump were about the same at an accelerometer mounted under the Swiss cheese baffle by HAM2. Figure 4 shows that the shaker vibration is local to the beam tube. While the shaker signal is large on the beam tube accelerometer, it is almost lost in the background at the HAM2 and 3 accelerometers and the HAM2, 3 L4Cs. Finally, the failure to reproduce the noise with HEPI injections, both during PEM injections at the beginning of the run and a recent round by Sheila, further support the off-table source of the noise.

While everything is consistent with coupling at the Swiss cheese baffle near HAM2, we haven’t eliminated the eye baffle near HAM3. This might be done by comparing a second accelerometer under the eye baffle to the one under the Swiss cheese baffle, but I didn’t have another spare PEM channel.

If it is the Swiss cheese baffle, it might be worth laying it down during a vent. Two concerns are the blocking of any beams that are dumped on the baffle, and the shiny reducing flange at the end of the input beamt ube that would be exposed.

An immediate mitigation option is to try and move beams relative to the Swiss cheese baffle while monitoring the noise from an injection. Sheila and I started this but ran out of commissioning time and LLO was up for most of the weekend so I didn’t get back to it. If someone else wants to try this, either turn on the fire pump or, for even more noise in DARM, the shaker by HAM3 (the cable goes across the floor to the driver by the wall, enter 17 Hz on the signal generator and turn on the amp, it should still be set).

Shaker injections have shown the input beamtube to be sensitive for some time ( https://alog.ligo-wa.caltech.edu/aLOG/index.php?callRep=31016). During pre-run PEM injections, an 8 to 100 Hz broad-band shaker injection on the input beamtube showed strong coupling. However the broad-band injection was smaller in the sensitive 10-18 Hz band then in other sub-bands and so the magnitude of the up-converted coupling from this narrow sub-band was not evident. When we have detected upconversion during PEM injections in the past, we have narrowed down the sensitive frequency band with a shaker sweep, but, for the input beam tube, we didn’t get to this until last week.

Figure 5 shows fire pump, trucks and the HVAC on input beam tube accelerometers and DARM.

Sheila, Anamaria, Robert