Ops Shift Log: 03/21/2017, Evening Shift 23:00 – 07:00 (16:00 - 00:00) Time - UTC (PT)

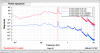

State of H1: Locked at NLN for 2.25 hours at 29.0W and 67.0 Mpc.

Intent Bit: Observing

Support: Dave B., Sheila, Jonathan

Incoming Operator: Jim

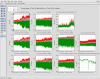

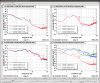

Shift Summary: Ran A2L DTT check – Pitch and Yaw are both somewhat elevated. Tumbleweed baling has finished for the day. Work will continue on the X-Arm tomorrow.

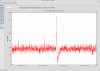

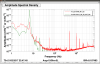

Problem #1 - Started getting OMC DCPD saturations (due to Violin Mode 508.2 ring up), but lost lock before damping. Contacted Sheila and tried to give remote access.

Problem #2 – Could not give Sheila remote access due to a problem with the authentication server. Contacted Jonathan who corrected the problem (FRS 7677 aLOG #43993).

Problem #3 – There was a problem with the Foton filter files generated after last Tuesday’s maintenance window until today at 13:00, when Jim B. fixed this problem. See aLOG #43992 for description. Contacted Dave B. who rebuilt the Foton files for the SUSs and H1OMCPI. When I reloaded, the filter files the WDs for ITMX & ETMY tripped. By the time this was all squared away the violin modes had calmed down a bit.

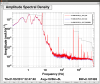

Relocked the IFO without the ALS_DIFF problem had during the day shift. I had to dress up BS Yaw a little bit, but no other tweaks were required.

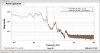

We were back to Observing in time to receive a GRB alert.

Activity Log: Time - UTC (PT)

23:00 (16:00) Take over from TJ

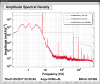

23:20 (16:20) Ran A2L check script

00:13 (17:13) Bubba – Tumbleweed baling finished for the day

01:01 (18:01) Damp PI Mode-27

02:28 (19:28) OMC DCPD Saturation

02:38 (19:38) Lockloss – Violin mode ringing up

04:36 (21:36) Relocked at NLN and back to Observing

04:45 (21:45) Damp PI Mode-27

04:59 (21:59) Damp PI Mode-27

05:06 (22:06) GRB Alert – One hour stand-down

05:10 (22:10) Damp PI Mode-27

06:06 (23:06) End stand-down

07:00 (00:00) Turn over to Jim