R. Crouch, J. Oberling, M. Robinson, T. Guidry, T. O'Hanlon

Incoming wall of text, but here's the short, short version: Over the past ~15 months during available Tuesday maintenance windows we have been working the FARO around the LVEA, setting alignment nests so we have the ability to drop the FARO almost anywhere in the LVEA and have it aligned to the local LVEA coordinate system. I'm happy to report that, after working through and around unavoidable delays (mostly the emergency vent for OFI repair and the PSL frequency noise issues from the latter half of 2024, as well as several maintenance periods where IAS personnel were unavailable due to other required maintenance work) this effort is finally complete. There are now 53 bright red Spherically-Mounted Retroflector (SMR) nests around the LVEA, and a number around the frame of the high-bay rollup door and the nearby wall; these nests have been aligned to the LVEA local coordinate system. The naming scheme is F-CSXXX: F for FARO, CS for the building (Corner Station in this case), and XXX is the monument number.

As a brief reminder of where things were left before we started moving the FARO around the LVEA, we had found what appeared to be a good alignment to our West Bay alignment monuments (BTVE-1, PSI-1, PSI-2, PSI-6, and height marks 901, 902, and 903 (for z-axis alignment only)). We had found multiple errors in z-axis coordinates for the 4 alignment monuments, eventually having to perform a water tube level survey of the BSC2 door flanges to re-establish our Z=0, then propagate that measurement to our alignment monuments to establish accurate z-axis coordinates w.r.t. WBSC2. Alogs from that work for some "light" reading: 75669, 75771, 75974, 76889, and 77216.

Jumping the X-arm Beam Tube

Our main challenge with this effort was moving the FARO to the other side of the X-arm beam tube (BT). Our most readily-accesible alignment monuments for the FARO are all in the West Bay, with no access back to these monuments once we had moved to the outside of the BT; this simple fact makes an accurate device move for the FARO very difficult (a device move is most accurate when we can shoot a mixture of nests at the new position and at the previous position(s) ). We had set several nests along the wall near the X-arm BT to assist in making the move, as well as using existing nests along the frame of the High-Bay rollup door, but still our position errors for the device move were >0.5 mm. TJ O'Hanlon was visiting in June 2024 and we brainstormed several methods to tighten up this positional uncertainty. Ultimately we decided to place temporary nests on the BTs themselves to aid in the initial and final device moves (temporary because as soon as we vent the nests move with the vacuum system, and don't necessarily return to the same spot once the vertex is back under vacuum). We set 4 nests along the top of the X-arm BT, 6 nests along the top of the Y-arm BT, and a single lonely nest on the bottom of the Y-arm BT; the Y-arm BT nests we to allow us to close the circle once we moved from the input arm area back into the West Bay. At this point we were interrupted for some PSL work (PMC swap, early July), the vertex vent for the OFI repair (July - August), and then the PSL frequency noise issues (late-September to December). We were finally able to restart this effort in mid-December 2024 and test our "new" method for jumping the BT. And it worked! Our first positional uncertainty was <0.1 mm, so we began setting alignment nests and moving the FARO around the LVEA.

Moving Around the LVEA

We started in the High-Bay area, setting nests as needed and moving to the next position to set more nests, then moving to the next position to set more... You get the idea. Our route took us around the outside of the LVEA. Starting in the High-Bay area, we proceeded down the +X side of the output arm (WHAM 5/6) and back up the -X side towards WHAM3; then down the -Y side of the input arm, around the PSL enclosure, and then back up the +Y side; and finally back in the West Bay to tie back into our original nests and apply the alignment to the LVEA local coordinate system. The issue here is the FARO really likes a large volume for accurate device moves, and this is something we definitely do not have along the outside edge of the LVEA (this is also where most IAS work takes place). We are essentially moving down a series of hallways, the hallways being the narrow walking areas between the vacuum chambers and the LVEA walls. These narrow corridors restrict the volume we can use for setting nests, which means device position errors begin stacking up as we continue to move around the LVEA. In addition, due to the vacuum equipment restricting line of sight we quickly lose sight of nests at previous device positions, which in turn causes position errors to begin stacking up; for most of our trip around the LVEA we only had sight to the device position immediately preceding the current one, and nothing further back. This is, unfortunately, unavoidable due to the physical layout of the LVEA. In an ideal world we would be able to do all of this with an empty LVEA, but that's not possible so we deal with what we have.

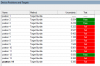

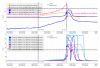

PolyWorks can estimate the device position error, so the 1st attached picture shows the results after we completed our circuit around the LVEA and tied back into our initial monuments in the West Bay. Ignore the Pass/Fail under the Test column, I didn't change PolyWorks' default of 0.25 mm and we don't really have a metric for this right now, the important thing here is the value in the Uncertainty column. PolyWorks uses a Monte Carlo simulation to calculate device position uncertainty, defaults to 100 simulations per device position; these results are given as 2 standard deviations (PolyWorks default) for each position from the instrument origin. This means that ~95% of the device's calculated positions from the simulations are within the listed uncertainty. Position 1 is our starting point by WBSC4 (old H2 BS chamber), with a view of our temporary nests on the X and Y BT, as well as the nests on the wall across the X BT and the High-Bay rollup door frame; position 2 is our jump over the X-arm BT; positions 3 through 6 are down the output arm and back up the other side to WHAM3; positions 7 through 10 are down the input arm, around the PSL enclosure, and back up the +Y side; position 11 is the final position we used before moving back into the West Bay, near the TCSy table (with a view of the monuments we placed on the Y-arm BT); position 12 is back in the West Bay close to the biergarten, and positions 13 and 14 are near the mechanical test stand by the West wall of the LVEA (14 is a repeat of 13 to account for device drift after sitting overnight). As can be seen, the estimated positional uncertainty grows as we move down the "hallways" of the output and input arms, with the worst uncertainty at the positions furthest from our starting point (positions 7-10, around the input arm). At this time I'm not sure we can do much better, given the constraints of the physical layout of the LVEA; we spoke with LLO at the last IAS meeting and they reported seeing similar numbers in their LVEA circuit. With the LVEA circuit complete, we moved back into the West Bay to grab the last nests there that couldn't see from our initial position and to align to the LVEA local coordinate system.

Aligning to the LVEA Local Coordinate System

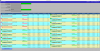

Back in the West Bay we collected the last of the red nests for FARO alignment and our alignment monuments (BTVE-1, PSI-1, PSI-2, PSI-6, and height marks 901, 902, and 903). We used the 5" sphere fit rod to grab BTVE-1 and the PSI monuments. We replaced the magnetic nests we had been using for the 3 height marks with glue nests, and glued them down. Using an autolevel and a scale we measured the difference between where the nest ended up and where the height marks were (since the glue sets fast we couldn't sight the nests in directly inline with the height marks):

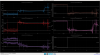

- Nest z-axis position w.r.t. height mark

- 901: +0.5 mm

- 902: -1.7 mm

- 903: -0.5 mm

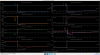

As done back in April 2024 (alog 77216), we used the z-axis coordinate for the BTVE and PSI monuments that had been corrected for the depth of the punch in the monument (since the sphere fit rod measures to the point at the base of the rod, which sits in the punch, and not the monument surface) and that had been corrected by our water tube level survey of WBSC2. We used BTVE and the 3 PSI monuments to get a rough X,Y,Z alignment (again, not using PSI-6 for z-axis alignment since we do not trust its z-axis coordinate). This rough alignment was then used to capture X and Y coordinates for the nests at the height marks, then the height marks were used to enhance the z-axis alignment (not used for X and Y). The results of this alignment are shown in the 2nd attached picture; for ease of comparison I've included the alignment results from April 2024, see the 3rd attached picture. This alignment is similar to the one we had back in April 2024, with a couple of differences that at first glance look large. The X and Y coordinates for height marks 901, 902, and 903 deviate by large amounts compared to what we had measured with our rough, BTVE/PSI only alignment, which was not the case in our April 2024 alignment. But if you look closely, you see that the measured components for the height marks in both alignments (March 2025 and April 2024) are very similar; this is what is important. We don't really care about the X and Y coordinates for the 3 height marks as we aren't using them in the alignment, and they were only captured using a rough alignment to begin with. The fact the measured components are matching fairly well lends some confidence to this alignment. The difference between the 2 that has the most potential impact is in the measured z-axis coordinates of the BTVE/PSI monuments. These z-axis coordinates are all roughly a factor of 2 worse in the March 2025 alignment vs the April 2024 one. In addition, the alignment statistics on the left side of the screenshot are all better in the April 2024 alignment. The only explanation I have for this is in April 2024 we were working in the West Bay and only the West Bay, while here and now we moved the tracker all over the LVEA, thereby carrying around some positional error in the device that wasn't present in the April 2024 alignment. The only way to really test this alignment is to measure some monuments/features in the LVEA with known coordinates and see how they match. The upcoming pre-deinstall FARO shots to the WHAM1 passive stack will be a good test of this alignment. Another good monument to look at would be BTVE-5, over by the X-arm termination slab (by the cryopump/OpLev area).

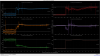

As another test, since we haven't exactly trusted the repeatability of the sphere fit rod in the past, we tried using points for PSI-1 and PSI-2 and applying the alignment using those; we still had to use the sphere fit rod on BTVE-1 due to its dome shape and on PSI-6 due to it sitting inside an electronics rack in the biergarten (the bottom rail of the rack blocks direct line of sight to the monument, but the 5" sphere fit rod clears the top of it). The results of this are shown in the 4th attached picture. Right away it's clear this alignment is not as good as the one shown in the 2nd picture. The statistics are all worse, and the match between measured coordinates is worse as well. This one gets discarded and we move ahead with the previous alignment using sphere fit rods for the BTVE/PSI monuments. Next we tested importing our move device targets into a blank PolyWorks file and aligning to them.

Global Target Test

PolyWorks allows you to import global targets from a text file. In PolyWorks, a global target is one whose position is precisely known and does not change. Now that we've applied our alignment to our list of device position targets we have [X,Y,Z] coordinates in the LVEA local coordinate system for all of them. These coordinates can be exported into an Excel spreadsheet and formatted into a text file for import into a future PolyWorks project. We did a test of this, using a duplicate of our working PolyWorks project to keep from accidentally modifying our working project file. The format for the text file is 4 columns: X position, Y position, Z position, monument name. The file can have no headers (PolyWorks gets mad if there are headers), and the units must be consistent (we use mm). When importing the text file you have to specify the units used on the Import Global Target window (again, we use mm). Once the targets have been imported into the new PolyWorks project, you set the tracker somewhere in the workspace with a good view of global target nests and measure them. The tracker then automatically aligns to the coordinates of the global targets, thereby automatically aligning itself to the LVEA local coordinate system. We did this using a blank project, and the results are shown in the 5th attached picture. For the height marks we used the same nominal coordinates from the alignment (2nd picture again). This is why they look so far off at first glance, but if you compare the measured coordinates they match really good. For the PSI monuments we used a different toolilng to take those measurements. Instead of the sphere fit rod we used our Hubbs Center Punch Nest (CPN). This nest has a 2" vertical offset (the center of the SMR sits 2" above the bottom of the nest) and a built-in center punch for either making punch marks in monuments or accurately setting the nest in an existing punch mark. Because of this we had to remove the z-axis correction for the punch depth we needed for the sphere fit rod, as the Hubbs CPN measures to the monument surface not the bottom of the punch. These also look really good, with the exception of the measured z-axis coordinate for PSI-2. At this time I don't really have an explanation for this discrepancy.

We also used these test coordinates to take some preliminary shots of the 2 accessible -X WHAM1 support tube ends; details of that in a future alog (this one is long enough already).

While pulling screenshots off of the FARO laptop for this alog I also exported the device position targets from our main working file, as these are the global targets we are going to use going forward (FARO_CS_LVEA_Global_Targets_v1.xlsx). Between the main working file and the test file used to generate the global target list for the test in the two immediately preceding paragraphs, the only difference is a new device position in the main file, position 14; position 14 was necessary in the main working file as the FARO was sitting in the same spot for a week between maintenance windows (March 18 to March 25) and had therefore drifted in that timeframe (the FARO and tripod stand are heavy and slowly sink into the vinyl floor of the LVEA). The fix for this is to do a Move Device action without actually moving the device, which accounts for any drift from the previous position. The starting, unaligned coordinates of the red nests (which we turn into global targets) are the same, the alignment results are the same; again the only difference is that new position 14 in the main working file. While removing the BT nests from the target list (since we are not going to use these for placing the FARO) I noticed that the coordinates looked a little different than I remembered. So I threw together a quick and dirty comparison spreadsheet to calculate the differences (Global_Target_Coordinate_Differences.xlsx). The first 4 columns of data are from the main working file, the next r columns of data are from the test file, and the final 3 columns are the difference (test - main). Sure enough, the coordinates from the main file differ from the test file. I set conditional formatting to turn red for any differences outside of +/-0.1 mm. The largest differences are all in z-axis coordinates, and the largest of these are all nests placed along the input arm of the IFO (WHAM1/2/3) where are device position uncertainties are the largest. These are small differences, the largest being on the order of 0.25 mm, spread out over 10s of meters of LVEA space, so I don't think this is a big issue, but interesting to note nonetheless.

And that's it! I'll write another alog detailing the preliminary shots of the WHAM1 -X support tube ends. The FARO is ready to go for the upcoming pre-deinstall shots of the WHAM1 passive stack (currently slated for Monday on the Trello). This finally closes LHO WP 11757.