I revisited my investigation into the IM alignment jumps which I originally posted in alog 27646.

In that alog I found evidence that while the HAM2 ISI was tripped, and the IMs were tripped (undamped, and rung up), the IM OSEM oscillations damp at different rates, suggesting mechanical interference.

That test data was from 19 May 2016, and only one ISI/IM trip, so I've looked at a number of other ISI/IM trips, and with the exception of IM4, find exactly the same results.

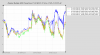

Attachment 1 is the original data and four additional ISI/IM trip events

-

green boxes are the max. OSEM amplitude for each optic, and for all IMs these are consistant

-

gold highlighted boxes show the OSEM with the lowest amplitude compared to the max. OSEM amplitude for each optic, and for IM1, IM2, and IM3, there are consistant

-

for IM4 I've highlighted OSEM LL, which was at 93% of the max. OSEM amplitude, but has fallen to less that 90%

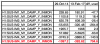

Attachment 2 is the alignment change of IM4 from May 2016 to Nov. 2016, which is the time frame that OSEM LL dropped below 90% of the max. OSEM

-

IM4 yaw changed by 217urad

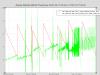

Attachment 3 is the alignment change of IM4 from Oct. 2014 to Feb. 2017

-

IM4 yaw changed by a total of 705urad

Attachment 4 shows the angular change needed to move an IM by the total EQ stop gap, and how that compares to the change of IM4 yaw

-

an angular change of 800urad is needed to move the optic by 0.2mm, the total EQ stop gap (0.1mm front + 0.1mm back)

-

IM4 yaw changed by 705urad, which means it moved 0.18mm

Attachment 5 shows the total alignment changes for all IMs

-

IM4 yaw is the largest at 705urad

-

IM2 yaw, at 265urad

-

all other IM alignment changes are 140urad or smaller

This data supports my original finding, and in the case of IM4, confirms that alignment changes matter, and it's my belief that IM4 OSEM LL is now touching an EQ stop.