Tom Dent, Miriam Cabero

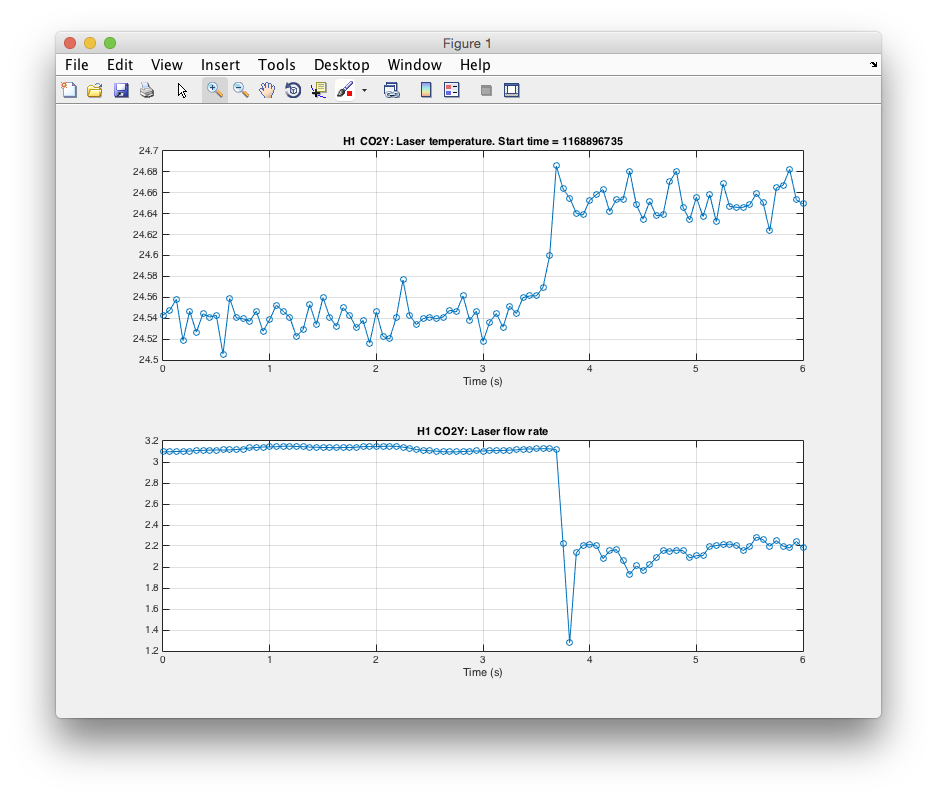

We have identified a sub-set of blip glitches that might originate from PSL glitches. A glitch with the same morphology as a blip glitch shows up in the PSL-ISS_PDA_REL_OUT_DQ channel at the same time as a blip glitch is seen in the GDS-CALIB_STRAIN channel.

We have started identifying times of these glitches using omicron triggers from the PSL-ISS_PDA_REL_OUT_DQ channel with 30 < SNR < 150 and central frequencies between ~90 Hz and a few hundreds of Hz. A preliminary list of these times (on-going, only period Nov 30 - Dec 6 so far) can be found in the file

https://www.atlas.aei.uni-hannover.de/~miriam.cabero/LSC/blips/O2_PSLblips.txt

or, with omega scans of both channels (and with a few quieter glitches), in the wiki page

Only two of those times have full omega scans for now:

The whitened time-series of the PSL channel looks like a typical loud blip glitch, which could be helpful to identify/find times of this sub-set of blip glitches by other methods more efficient than the omicron triggers:

The CBC wiki page has been moved to https://www.lsc-group.phys.uwm.edu/ligovirgo/cbcnote/PyCBC/O2SearchSchedule/O2Analysis2LoudTriggers/PSLblips

Hi Marco,

your 'List of triggers common to PSL Type 1 and GDS Type 4' (15 times in two groups) are all during the known times of telephone audio disturbance on Dec 4 - see https://alog.ligo-wa.caltech.edu/aLOG/index.php?callRep=32503 and https://www.lsc-group.phys.uwm.edu/ligovirgo/cbcnote/PyCBC/O2SearchSchedule/O2Analysis2LoudTriggers/PSLGlitches

I think these don't require looking into any further, the other classes may tell us more.

The GDS glitches that look like blips in the time series seem to be type 2, 7, and 8. You did indeed find that the group of common glitches PSL - GDS type 2 is a blip glitch. However, the PSL glitches in the groups with GDS type 7 and 8 do not look like blips in the omega scan. The subset we identified clearly shows blip glitch morphology in the omega scan for the PSL channel, so it is not surprising that those two groups turned out not to be blips in GDS.

It is though surprising that you only found one time with a coincident blip in both channels, when we identified several more times in just one week of data from the omicron triggers. What was the "relatively high threshold" you used?

Hi,

thanks Marco for looking into this. We already expected that it was a small sub-set of blip glitches, because we only found very few of them and we knew the total number of blip glitches was much higher. However, I believe that not all blip glitches have the same origin and that it is important to identify sub-sets, even if small, to possibly fix whatever could be fixed.

I have extended the wiki page https://www.lsc-group.phys.uwm.edu/ligovirgo/cbcnote/PyCBC/O2SearchSchedule/O2Analysis2LoudTriggers/PSLblips and the list of times https://www.atlas.aei.uni-hannover.de/~miriam.cabero/LSC/blips/O2_PSLblips.txt up to yesterday. It is interesting to see that I did not identify any PSL blips in, e.g., Jan 20 to Jan 30, but that they come back more often after Feb 9. Unfortunately, it is not easy to automatically identify the PSL blips: the criteria I used for the omicron triggers (SNR > 30, central frequency ~few hundred Hz) do not always yield to blips but also to things like https://ldvw.ligo.caltech.edu/ldvw/view?act=getImg&imgId=156436, which also affects CALIB_STRAIN but not in the form of blip glitches.

None of the times I added up to December appear in your list of coincident glitches, but that could be because their SNR in PSL is not very high and they only leave a very small imprint in CALIB_STRAIN compared with the ones from November. In January and February there are several louder ones with bigger effect on CALIB_STRAIN though.

The most recent iteration of PSL-ISS flag generation showed three relatively loud glitch times:

https://ldas-jobs.ligo-wa.caltech.edu/~detchar/hveto/day/20170210/latest/scans/1170732596.35/

https://ldas-jobs.ligo-wa.caltech.edu/~detchar/hveto/day/20170210/latest/scans/1170745979.41/

https://ldas-jobs.ligo-wa.caltech.edu/~detchar/hveto/day/20170212/latest/scans/1170950466.83/

The first 2 are both on Feb 10, in fact a PSL-ISS channel was picked by Hveto on that day (https://ldas-jobs.ligo-wa.caltech.edu/~detchar/hveto/day/20170210/latest/#hveto-round-8) though not very high significance.

PSL not yet glitch-free?

Indeed PSL is not yet glitch free, as I already pointed out in my comment from last week.

Imene Belahcene, Florent Robinet

At LHO, a simple command line works well at printing PSL blip glitches:

GPS times must be adjusted to your needs.

This command line returns a few GPS times not contained in Miriam's blip list: must check that they are actual blips.

The PSL has different types of glitches that match those requirements. When I look at the Omicron triggers, I do indeed check that they are blip glitches before adding the times to my list. Therefore it is perfectly consistent that you find GPS times with those characteristics that are not in my list. However, feel free to check again if you want/have time. Of course I am not error-free :)

I believe the command I posted above is an almost-perfect way to retrieve a pure sample of PSL blip glitches. The key is to only print low-Q Omicron triggers.

For example, GPS=1165434378.2129 is a PSL blip glitch and it is not in Miriam's list.

There is nothing special about what you call a blip glitch: any broadband and short-duration (hence low-Q) glitch will produce the rain-drop shape in a time-frequency map. This is due to the intrinsic tiling structure of Omicron/Omega.

Next time I update the list (probably some time this week) I will check the GPS times given by the command line you suggest (it would be nice if it does indeed work perfectly at finding only these glitches, then we'd have an automated PSL blips finder!)