Kevin, Sheila

Over the last few days we've run ADF sweeps a few times. Here's a record of what happened and file names.

The files are in /ligo/gitcommon/squeezing/sqzutils/data

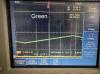

Nov 22 08:31 HF_10kHz_11_2025.h5 IFO had been powered up for 11:00, sweep started at about 14:50 UTC Nov 22. NLG 24.3, measurement is in 88224. Something seemed to go wrong towards the end of the sweep, we aren't sure what.

Nov 22 12:38 HF_10kHz_11_2025_2.h5 IFo had been powered up for 15:10, same lock as the previous measurement, same NLG measurement, sweep started at about 19 UTC.

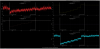

Nov 25 00:36 HF_10kHz_11_2025_b4_CO2_step.h5 IFO had been powered up for 4:20, in this sweep I didn't turn off the SQZ angle servo based on the ADF, so the demod phase was moved around during the sweep, making this data not useful.

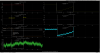

Nov 26 01:56 HF_10kHz_11_2025_b4_CO2_step2.h5 last night's first scan, started only 38 minutes after power up at about 8:18 UTC Nov 26th. The amplified seed was 7.8e-3, unamplified seed didn't happen correctly with the script but the waveplate hasn't moved since previous measurements of that level at 2.9e-4 (88223), giving an NLG of 26.9. (Edit, I redid the unamplified seed measurement, it looks like 3.2e-4 now, making the NLG 24.4 for these last two measurements, which has been consistent (24.3 or 24.4) for the last week.

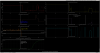

Nov 26 08:15 HF_10kHz_11_2025_after_CO2_1hour.h5 this morning's sweep, IFO had been powered up for 7 hours, CO2s had been stepped from 1.7W each to 0.9W each for 1.5 hours when the sweep started at about 14:37 UTC Nov 26th. NLG should be the same as for above, 26.9. 24.4