TITLE: 12/06 Eve Shift: 00:00-08:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Observing at 72.9403Mpc

INCOMING OPERATOR: TJ

SHIFT SUMMARY: Other than the lockloss due to PSL trip, not a bad evening for locking.

LOG:

See previous aLogs for the play-by-play tonight.

For DaveB, there was a /ligo server connection interrupt at 1:32 UTC.

For DetChar, Keita wanted to acknowledge/remind that Observing lock stretches for today have an ion pump running at BSC8.

PSL dust monitor is still alarming periodically.

7:57 GRB alert.

But don't worry, we are back to Observing at 10:36 UTC.

I haven't seen anything of note for the lockloss. I checked the usual templates, with some screenshots of them attached.

This seems like another example of the SR3 problem. (alog 32220 FRS 6852)

If you want to check for this kind of lockloss, zoom the time axis right around the lockloss time to see if the SR3 sensors change fractions of a second before the lockloss.

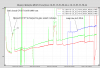

See my note in alog 32220, namely that Sheila and I looked again and we see that the glitch is on the T1 and LF coils, which share a line of electronics. The second lockloss TJ started with in this log (12/06) are somewhat unconclusively linked to SR3 - no "glitches" like the first one 12/05, but instead all 6 top mass SR3 OSEMs show motion before lockloss.

Sheila, Betsy

Attached is a ~5 day trend of the SR3 top stage OSEMs. T1 and LF do have an overall step in the min/max of their signals which happened at the time of that lockloss which showed the SR3 glitch (12/05 16:02 UTC)...