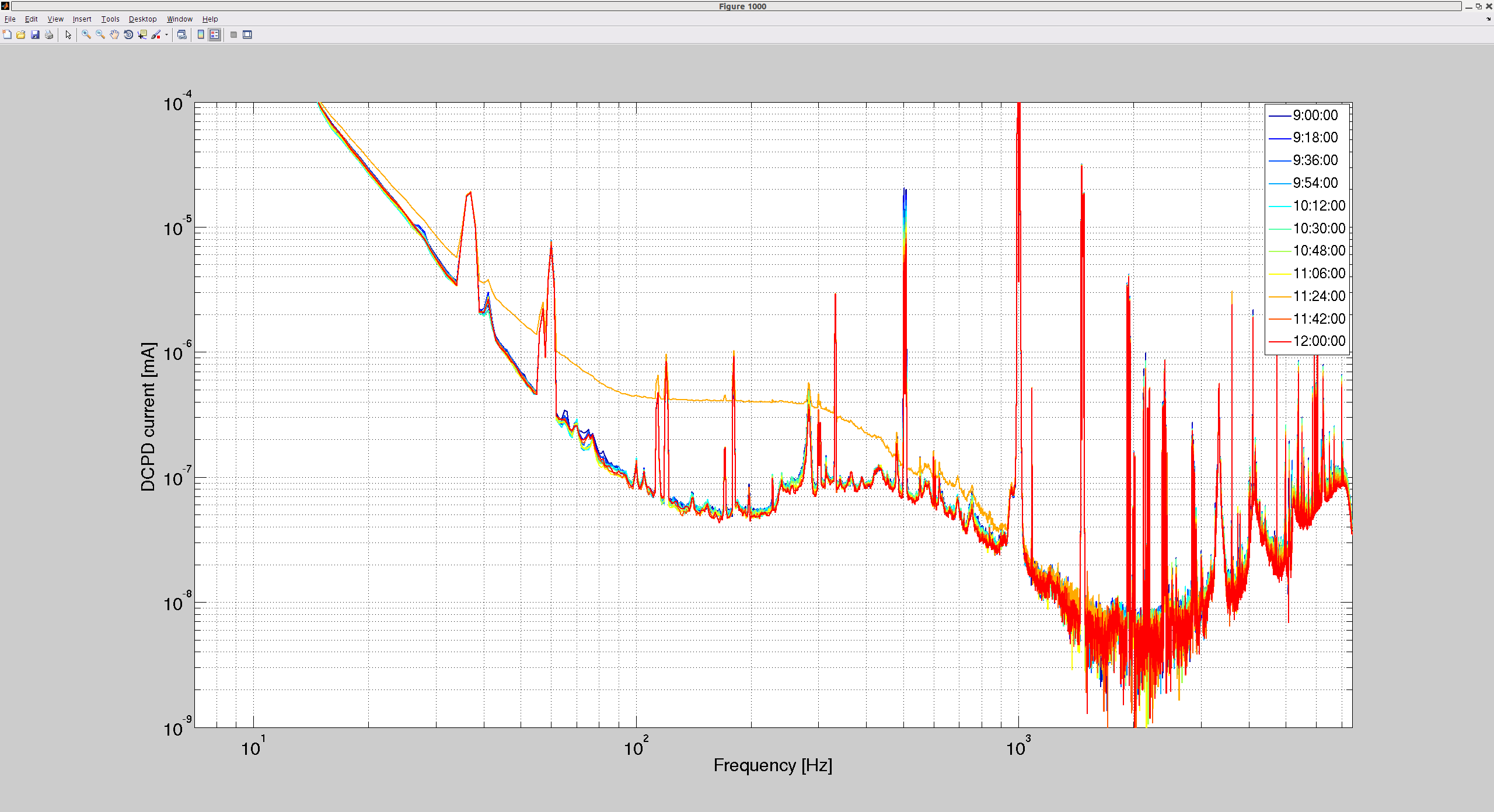

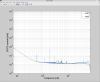

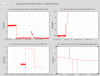

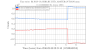

This is a plot of the jitter measured by the IMC WFS DC PIT/YAW sensors during last nights lock. The 280 Hz periscope peak reaches about 1x10-4/√Hz in relative pointing noise, or about 3x10-4 rms. The relative pointing noise out of the HPO is about 2x10-5/√Hz at 300 Hz. After the attenuation through the PMC this would correspond to a level below 10-6/√Hz. The jitter peaks show up in DARM, if they are high enough. This is clearly visible in the coherence spectra.

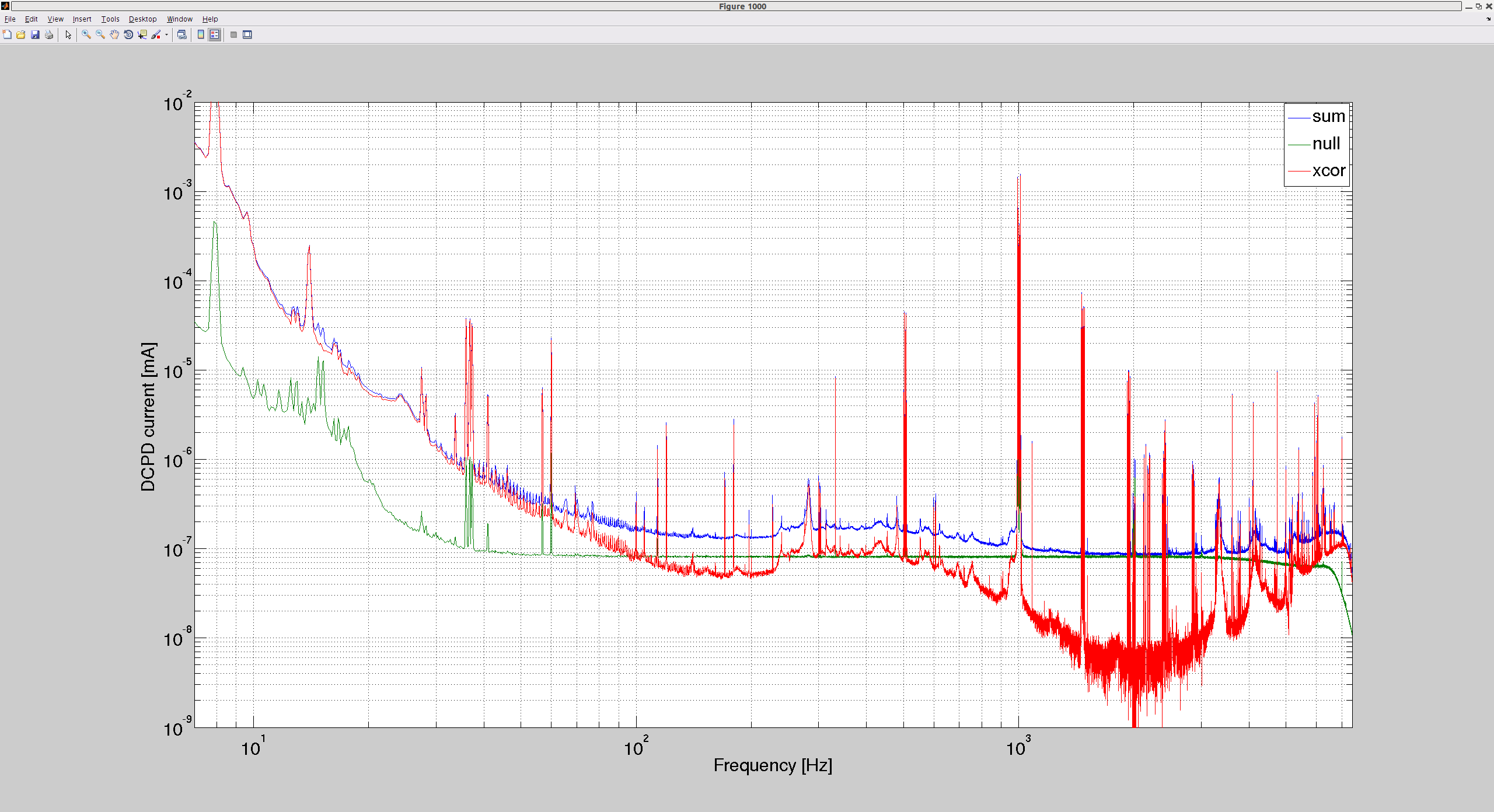

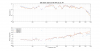

The ISS second loop control signal is an indication of the intensity noise after the mode cleaner with only the first loop on. The flat noise level above 200 Hz is around 3x10-6/√Hz in RIN, with peaks around 240 Hz, 430 Hz, 580 Hz and 700 Hz. Comparing this to the free-running noise in alog 29778 shows this RIN level at 10^-5/√Hz. We can also compare this with the DBB measurements, such as in alog 29754: the intensity noise after the HPO shows a 1/f behaviour and no peaks. Looking at the numbers it explains the noise below 300 Hz. It looks like a flat noise at the 10^-5 level including the above peaks gets added to the free-running intensity noise after the PMC. The peaks in the controls signal of the second loop ISS line up with peaks visible in the pointing noise. But, neither the numbers nor the spectral shape matches. These peaks have coherence with DARM.

Checking the calibration of the WFS DC readouts I noticed a calibration error of a factor of 0.065. So, all angles measured by the WFSs should be scaled by this number. This still makes the jitter after the PMC dominant, but one might expect to see some of the HPO jitter peak show through in places where the downstream jitter has a valley. In any case, we should repeat the PSL jitter measurement with the IMC unlocked.

A report of the measured beam jitter at LLO is available in T1300368.

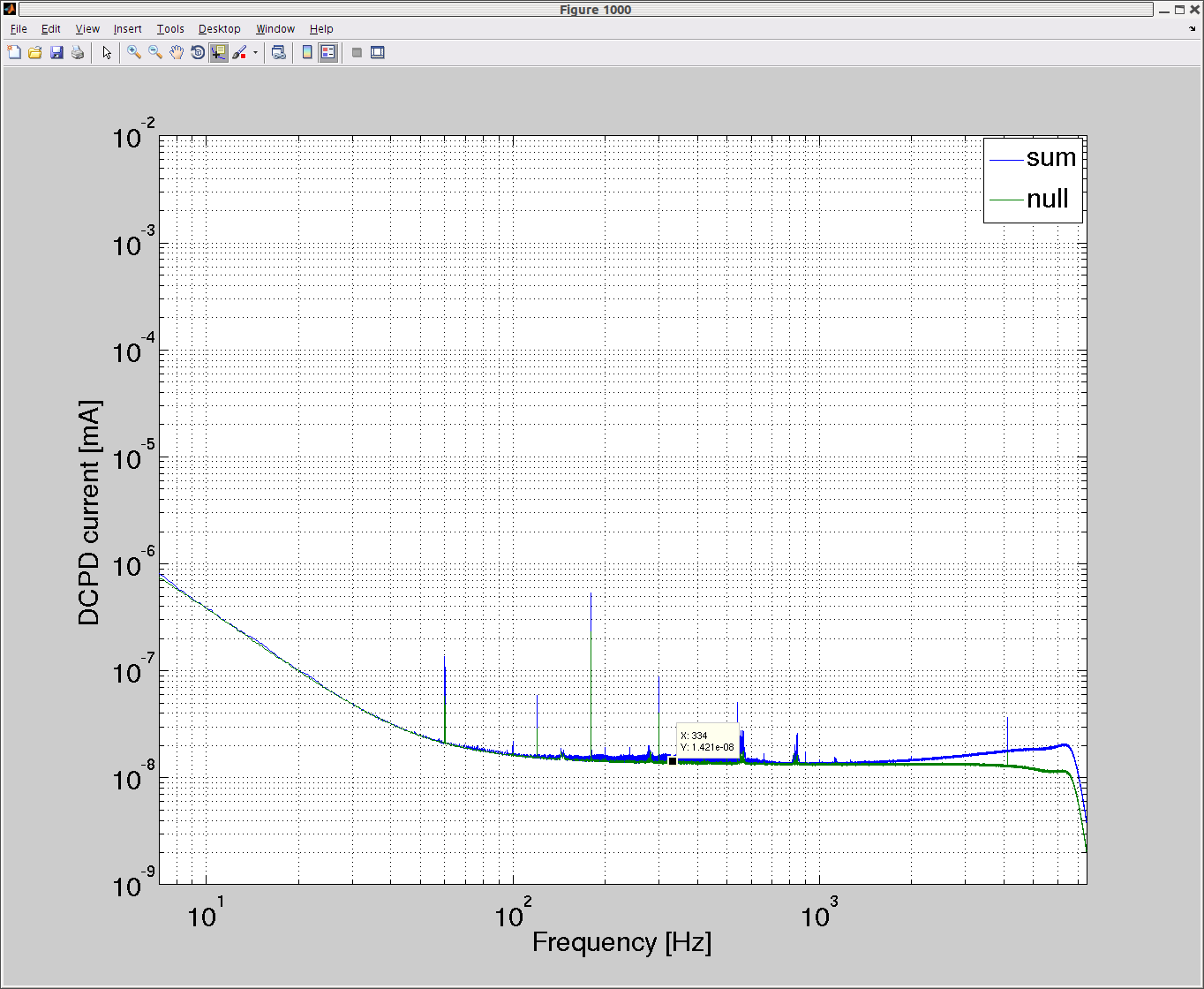

An earlier measurement at LHO is reported in alog 21212. Using an IMC divergence angle of 1.6x10–4 rad, the periscope peak at 280 Hz is around 10^-4/√Hz. This is closer to the first posted spectrum with the "wrong" calibration. Here I post this spectrum again and add the dbb measurement of the jitter out of the HPO propagated through the PMC (1.6%), but scaled by a fudge factor of 2. The Sep 11, 2015, spectrum shows a more or less flat noise level below 80 Hz, whereas the recent spectrum shows 1/f noise. The HPO spectrum also shows as 1/f dependency and is within a factor of 2 of the first posted spectrum. If jitter into the IMC is the main coupling mechanism into DARM, the HPO jitter peaks above 400 Hz are well below the PSL table jitter after the PMC and the would not show up in the DARM spectrum.