TITLE: 11/24 Owl Shift: 08:00-16:00 UTC (00:00-08:00 PST), all times posted in UTC

STATE of H1: Meh

INCOMING OPERATOR: Ed

SHIFT SUMMARY: Anamaria left couple of suggestions of things that I can do to help improve the range. I was going to try for an hour but caused a lockloss in the process. Have been having trouble locking all my shift. There're only two ISI configurations I could try without using BRSX (which is still rung up). USEISM_NOBRSXY took me the furthest towards NLN (I was in NLN once with this configuration) but it seems unstable at ~37 mHz.

LOG:

9:08 Lockloss as I tried to engage SRC1 P. Not sure how. I think that's because I forgot to turn on the -20dB filter and turn off the integrator. Sorry. I was going to try moving SR2 and hope that SRM follows.

10:12 Locklosses couple of times during PRMI to DRMI transition. Turned out SRM pitch got stuck in some bad alignment. I moved SRM during PRMI locked then transitioned. ASC was able to engage without issue.

10:18 Lockloss 1 minute into NLN.

10:48 Lockloss the twice at SWITCH_TO_QPDs.

11:13 Lockloss at CARM_ON_TR. I wonder if the useism and wind is becoming a problem.

11:25 Another lockloss at SWITCH_TO_QPDS.

~11:50 Tried to engage BRSX after another lockloss at CARM on TR but it's still rung up. Reverted the configuration to no BRX.

12:02 Tried switching to USEISM_NOBRSXY. It's not in the reccomend list. But with BRSX rung up I kinda run out of options.

Made through SWITH_TO_QPDS with USEISM_NOBRSXY configuration

12:30 Arrived at NOISE_TUNINGS. Staying here for half an hour.

While staying here I noticed AS90/POP90 dropped suddenly. I tried several things to improve AS90/POP90. Moving SR2 seems to work. See alog31813

13:08 Run a2l

13:14 lockloss.

13:27 Went to LVEA to toggle noise eater. Checked with LLO ops (Jeramy)

13:50 Kyle to BSC8 to continue his work from yesterday.

14:32 Kyle out

14:41 Lost lock a few times at PRMI_LOCKED and DRMI_LOCKED. Tidal seems unstable. Recently lost lock at SWITCH_TO_QPDS. I'm switching the seismic config back to Windy no BRSX

15:12 Switching ISI config back to USEISM_NOBRSXY after 3 locklosses at FIND_IR. Wind reached 20 mph.

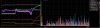

15:39 Lockloss at LOWNOSIE_ASC (IMC-F ran away at 37 Hz). PRMI striptool screenshot attached.