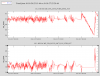

Summary: new ISS 2nd loop gain = 17 dB.

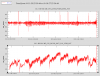

Since we have changed the amount of light on the inner loop PD (PDA, alog30871) and also decided to lock with an input light power of 25 W, these let us re-tune the gain of the ISS second loop. The goal of this adjustment is to maintain the intended UGF in the second loop. This morning, I have measured the open loop and adjusted the gain. The measurements were done with the IMC locked at 25 W and the second loop locked with the DC-coupling enabled. The inner loop was closed with a gain of 18 dB and a diffracted power of 4% as usual (30871).

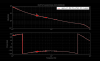

The attached image shows the measured open loop transfer function of the ISS second loop. The UGF was tuned to 19 kHz (which used to be 18 kHz back in this September alog29915) where the phase margin is almost the same value of 45 deg. The gain margin is found to be 7 dB. Everything seems good. Additionally, the raw data files are attached.